Zhaotao Wu

Slice Imputation: Intermediate Slice Interpolation for Anisotropic 3D Medical Image Segmentation

Mar 21, 2022

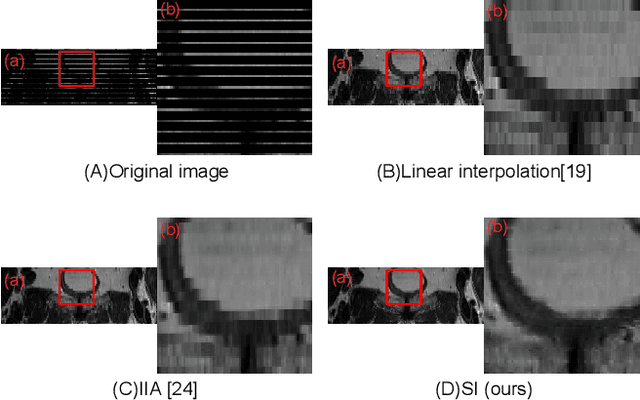

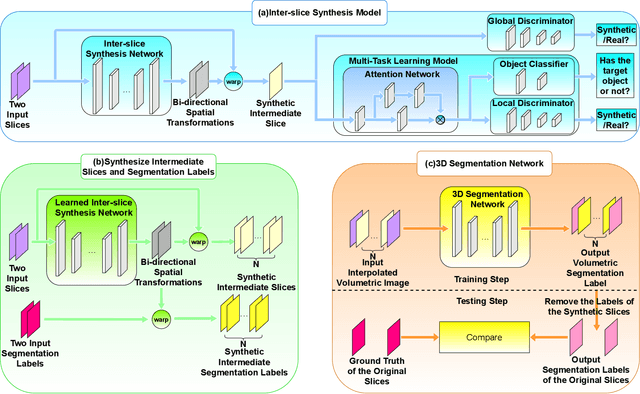

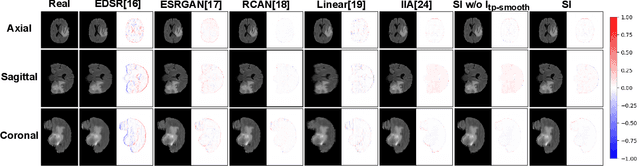

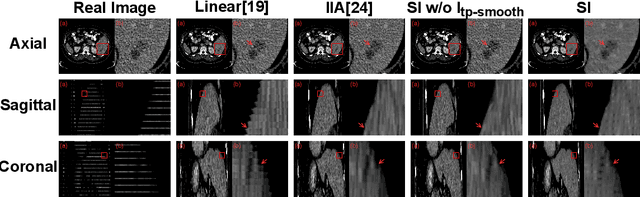

Abstract:We introduce a novel frame-interpolation-based method for slice imputation to improve segmentation accuracy for anisotropic 3D medical images, in which the number of slices and their corresponding segmentation labels can be increased between two consecutive slices in anisotropic 3D medical volumes. Unlike previous inter-slice imputation methods, which only focus on the smoothness in the axial direction, this study aims to improve the smoothness of the interpolated 3D medical volumes in all three directions: axial, sagittal, and coronal. The proposed multitask inter-slice imputation method, in particular, incorporates a smoothness loss function to evaluate the smoothness of the interpolated 3D medical volumes in the through-plane direction (sagittal and coronal). It not only improves the resolution of the interpolated 3D medical volumes in the through-plane direction but also transforms them into isotropic representations, which leads to better segmentation performances. Experiments on whole tumor segmentation in the brain, liver tumor segmentation, and prostate segmentation indicate that our method outperforms the competing slice imputation methods on both computed tomography and magnetic resonance images volumes in most cases.

Inter-slice image augmentation based on frame interpolation for boosting medical image segmentation accuracy

Jan 31, 2020

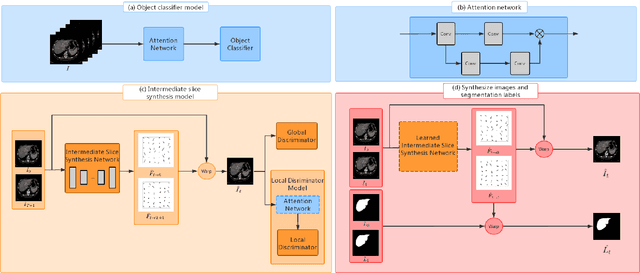

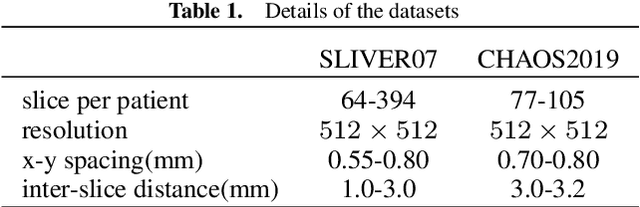

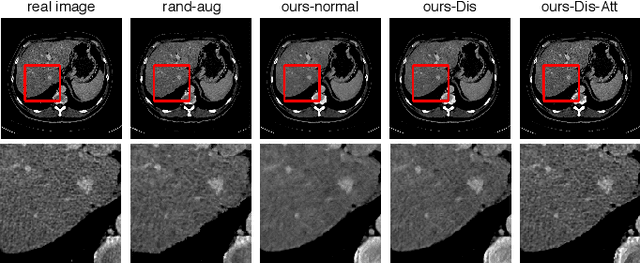

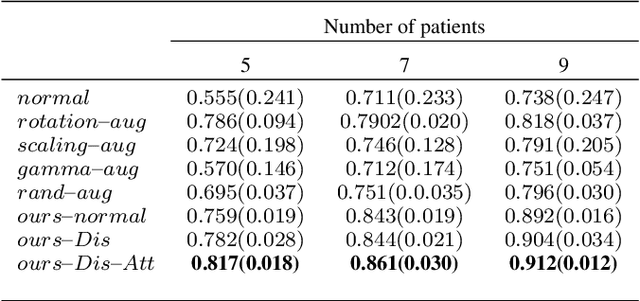

Abstract:We introduce the idea of inter-slice image augmentation whereby the numbers of the medical images and the corresponding segmentation labels are increased between two consecutive images in order to boost medical image segmentation accuracy. Unlike conventional data augmentation methods in medical imaging, which only increase the number of training samples directly by adding new virtual samples using simple parameterized transformations such as rotation, flipping, scaling, etc., we aim to augment data based on the relationship between two consecutive images, which increases not only the number but also the information of training samples. For this purpose, we propose a frame-interpolation-based data augmentation method to generate intermediate medical images and the corresponding segmentation labels between two consecutive images. We train and test a supervised U-Net liver segmentation network on SLIVER07 and CHAOS2019, respectively, with the augmented training samples, and obtain segmentation scores exhibiting significant improvement compared to the conventional augmentation methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge