Zhanhong Huang

Parallel Processing of Point Cloud Ground Segmentation for Mechanical and Solid-State LiDARs

Aug 19, 2024

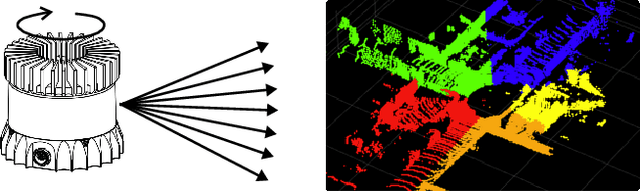

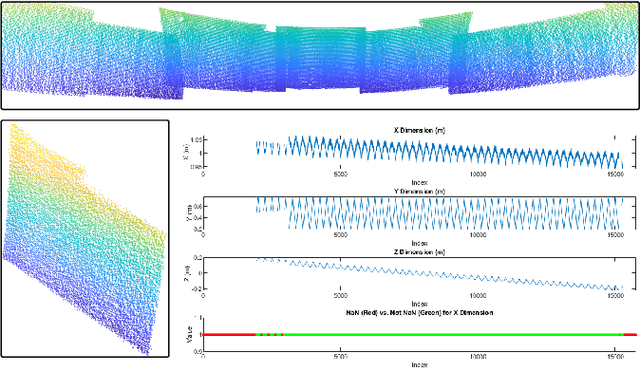

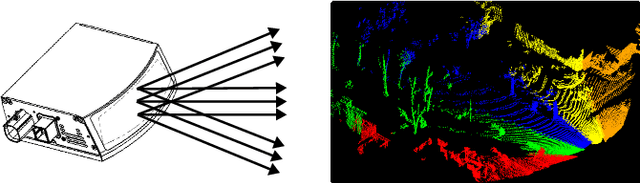

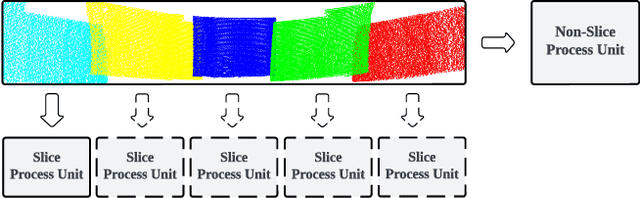

Abstract:In this study, we introduce a novel parallel processing framework for real-time point cloud ground segmentation on FPGA platforms, aimed at adapting LiDAR algorithms to the evolving landscape from mechanical to solid-state LiDAR (SSL) technologies. Focusing on the ground segmentation task, we explore parallel processing techniques on existing approaches and adapt them to real-world SSL data handling. We validated frame-segmentation based parallel processing methods using point-based, voxel-based, and range-image-based ground segmentation approaches on the SemanticKITTI dataset based on mechanical LiDAR. The results revealed the superior performance and robustness of the range-image method, especially in its resilience to slicing. Further, utilizing a custom dataset from our self-built Camera-SSLSS equipment, we examined regular SSL data frames and validated the effectiveness of our parallel approach for SSL sensor. Additionally, our pioneering implementation of range-image ground segmentation on FPGA for SSL sensors demonstrated significant processing speed improvements and resource efficiency, achieving processing rates up to 50.3 times faster than conventional CPU setups. These findings underscore the potential of parallel processing strategies to significantly enhance LiDAR technologies for advanced perception tasks in autonomous systems. Post-publication, both the data and the code will be made available on GitHub.

Stream-Based Ground Segmentation for Real-Time LiDAR Point Cloud Processing on FPGA

Aug 19, 2024Abstract:This paper presents a novel and fast approach for ground plane segmentation in a LiDAR point cloud, specifically optimized for processing speed and hardware efficiency on FPGA hardware platforms. Our approach leverages a channel-based segmentation method with an advanced angular data repair technique and a cross-eight-way flood-fill algorithm. This innovative approach significantly reduces the number of iterations while ensuring the high accuracy of the segmented ground plane, which makes the stream-based hardware implementation possible. To validate the proposed approach, we conducted extensive experiments on the SemanticKITTI dataset. We introduced a bird's-eye view (BEV) evaluation metric tailored for the area representation of LiDAR segmentation tasks. Our method demonstrated superior performance in terms of BEV areas when compared to the existing approaches. Moreover, we presented an optimized hardware architecture targeted on a Zynq-7000 FPGA, compatible with LiDARs of various channel densities, i.e., 32, 64, and 128 channels. Our FPGA implementation operating at 160 MHz significantly outperforms the traditional computing platforms, which is 12 to 25 times faster than the CPU-based solutions and up to 6 times faster than the GPU-based solution, in addition to the benefit of low power consumption.

Real-Time Joint Simulation of LiDAR Perception and Motion Planning for Automated Driving

May 11, 2023Abstract:Real-time perception and motion planning are two crucial tasks for autonomous driving. While there are many research works focused on improving the performance of perception and motion planning individually, it is still not clear how a perception error may adversely impact the motion planning results. In this work, we propose a joint simulation framework with LiDAR-based perception and motion planning for real-time automated driving. Taking the sensor input from the CARLA simulator with additive noise, a LiDAR perception system is designed to detect and track all surrounding vehicles and to provide precise orientation and velocity information. Next, we introduce a new collision bound representation that relaxes the communication cost between the perception module and the motion planner. A novel collision checking algorithm is implemented using line intersection checking that is more efficient for long distance range in comparing to the traditional method of occupancy grid. We evaluate the joint simulation framework in CARLA for urban driving scenarios. Experiments show that our proposed automated driving system can execute at 25 Hz, which meets the real-time requirement. The LiDAR perception system has high accuracy within 20 meters when evaluated with the ground truth. The motion planning results in consistent safe distance keeping when tested in CARLA urban driving scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge