Zengxi Huang

Study on Sparse Representation based Classification for Biometric Verification

Feb 27, 2015

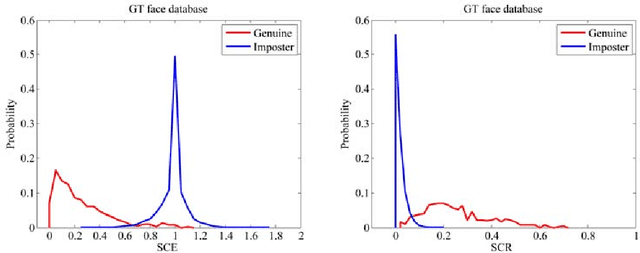

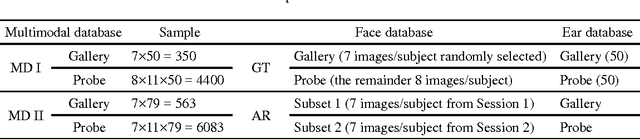

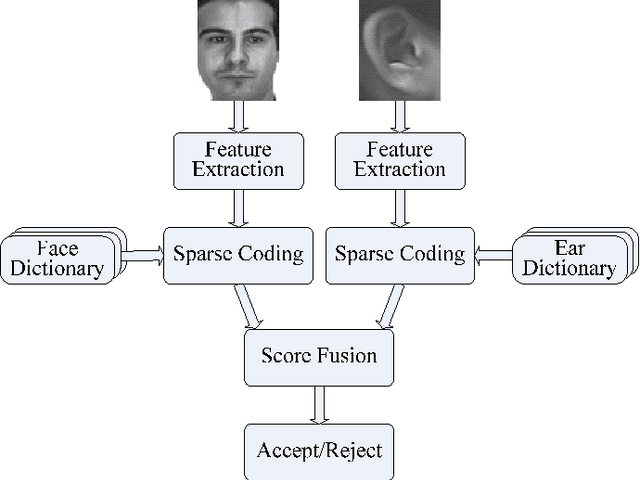

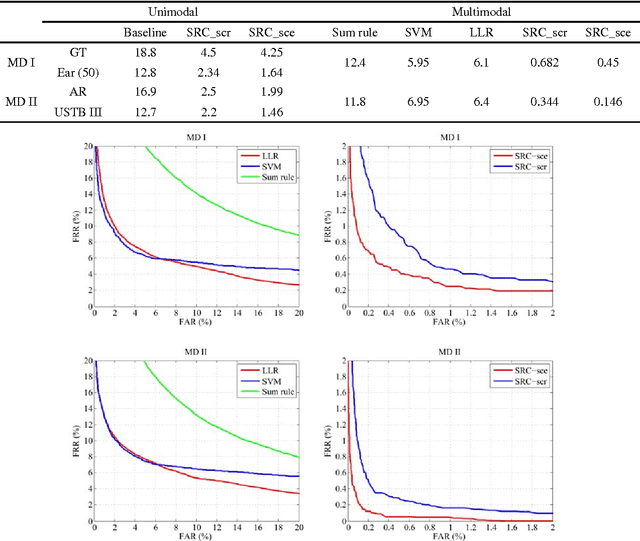

Abstract:In this paper, we propose a multimodal verification system integrating face and ear based on sparse representation based classification (SRC). The face and ear query samples are first encoded separately to derive sparsity-based match scores, and which are then combined with sum-rule fusion for verification. Apart from validating the encouraging performance of SRC-based multimodal verification, this paper also dedicates to provide a clear understanding about the characteristics of SRC-based biometric verification. To this end, two sparsity-based metrics, i.e. spare coding error (SCE) and sparse contribution rate (SCR), are involved, together with face and ear unimodal SRC-based verification. As for the issue that SRC-based biometric verification may suffer from heavy computational burden and verification accuracy degradation with increase of enrolled subjects, we argue that it could be properly resolved by exploiting small random dictionary for sparsity-based score computation, which consists of training samples from a limited number of randomly selected subjects. Experimental results demonstrate the superiority of SRC-based multimodal verification compared to the state-of-the-art multimodal methods like likelihood ratio (LLR), support vector machine (SVM), and the sum-rule fusion methods using cosine similarity, meanwhile the idea of using small random dictionary is feasible in both effectiveness and efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge