Zelin Ji

Parameter Estimation based Automatic Modulation Recognition for Radio Frequency Signal

Dec 11, 2024

Abstract:Automatic modulation recognition (AMR) critically contributes to spectrum sensing, dynamic spectrum access, and intelligent communications in cognitive radio systems. The introduction of deep learning has greatly improved the accuracy of AMR. However, current automatic identification methods require the input of key parameters such as the carrier frequency, which is necessary to convert the radio frequency (RF) to a base-band signal before it can be used for identification. In addition, the high complexity of deep learning models leads to high computational effort and long recognition times of existing methods, which are difficult to implement in demodulation system deployments. To address the above issues, in this paper, we first use power spectrum analysis to estimate the carrier frequency and signal bandwidth, which realizes the effective conversion from RF signals to base-band signals. This paper chooses the long short-term memory (LSTM) network as the model for automatic identification, which has low implementation complexity while maintaining high accuracy. Finally, by training the LSTM with actual sampling data combined with parameter estimation (PE), the method proposed in this paper can guarantee more than 90% format recognition accuracy.

Transfer Learning Guided Noise Reduction for Automatic Modulation Classification

Nov 13, 2024

Abstract:Automatic modulation classification (AMC) has emerged as a key technique in cognitive radio networks in sixth-generation (6G) communications. AMC enables effective data transmission without requiring prior knowledge of modulation schemes. However, the low classification accuracy under the condition of low signal-to-noise ratio (SNR) limits the implementation of AMC techniques under the rapidly changing physical channels in 6G and beyond. This paper investigates the AMC technique for the signals with dynamic and varying SNRs, and a deep learning based noise reduction network is proposed to reduce the noise introduced by the wireless channel and the receiving equipment. In particular, a transfer learning guided learning framework (TNR-AMC) is proposed to utilize the scarce annotated modulation signals and improve the classification accuracy for low SNR modulation signals. The numerical results show that the proposed noise reduction network achieves an accuracy improvement of over 20\% in low SNR scenarios, and the TNR-AMC framework can improve the classification accuracy under unstable SNRs.

Computational Offloading in Semantic-Aware Cloud-Edge-End Collaborative Networks

Feb 28, 2024

Abstract:The trend of massive connectivity pushes forward the explosive growth of end devices. The emergence of various applications has prompted a demand for pervasive connectivity and more efficient computing paradigms. On the other hand, the lack of computational capacity of the end devices restricts the implementation of the intelligent applications, and becomes a bottleneck of the multiple access for supporting massive connectivity. Mobile cloud computing (MCC) and mobile edge computing (MEC) techniques enable end devices to offload local computation-intensive tasks to servers by networks. In this paper, we consider the cloud-edge-end collaborative networks to utilize distributed computing resources. Furthermore, we apply task-oriented semantic communications to tackle the fast-varying channel between the end devices and MEC servers and reduce the communication cost. To minimize long-term energy consumption on constraints queue stability and computational delay, a Lyapunov-guided deep reinforcement learning hybrid (DRLH) framework is proposed to solve the mixed integer non-linear programming (MINLP) problem. The long-term energy consumption minimization problem is transformed into the deterministic problem in each time frame. The DRLH framework integrates a model-free deep reinforcement learning algorithm with a model-based mathematical optimization algorithm to mitigate computational complexity and leverage the scenario information, so that improving the convergence performance. Numerical results demonstrate that the proposed DRLH framework achieves near-optimal performance on energy consumption while stabilizing all queues.

Meta Federated Reinforcement Learning for Distributed Resource Allocation

Jul 09, 2023Abstract:In cellular networks, resource allocation is usually performed in a centralized way, which brings huge computation complexity to the base station (BS) and high transmission overhead. This paper explores a distributed resource allocation method that aims to maximize energy efficiency (EE) while ensuring the quality of service (QoS) for users. Specifically, in order to address wireless channel conditions, we propose a robust meta federated reinforcement learning (\textit{MFRL}) framework that allows local users to optimize transmit power and assign channels using locally trained neural network models, so as to offload computational burden from the cloud server to the local users, reducing transmission overhead associated with local channel state information. The BS performs the meta learning procedure to initialize a general global model, enabling rapid adaptation to different environments with improved EE performance. The federated learning technique, based on decentralized reinforcement learning, promotes collaboration and mutual benefits among users. Analysis and numerical results demonstrate that the proposed \textit{MFRL} framework accelerates the reinforcement learning process, decreases transmission overhead, and offloads computation, while outperforming the conventional decentralized reinforcement learning algorithm in terms of convergence speed and EE performance across various scenarios.

Energy-Efficient Task Offloading for Semantic-Aware Networks

Jan 20, 2023Abstract:The limited computation capacity of user equipments restricts the local implementation of computation-intense applications. Edge computing, especially the edge intelligence system enables local users to offload the computation tasks to the edge servers for reducing the computational energy consumption of user equipments and fast task execution. However, the limited bandwidth of upstream channels may increase the task transmission latency and affect the computation offloading performance. To overcome the challenge of the limited resource of wireless communications, we adopt a semantic-aware task offloading system, where the semantic information of tasks are extracted and offloaded to the edge servers. Furthermore, a proximal policy optimization based multi-agent reinforcement learning algorithm (MAPPO) is proposed to coordinate the resource of wireless communications and the computation, so that the resource management can be performed distributedly and the computational complexity of the online algorithm can be reduced.

Federated Learning for Distributed Energy-Efficient Resource Allocation

Apr 20, 2022

Abstract:In cellular networks, resource allocation is performed in a centralized way, which brings huge computation complexity to the base station (BS) and high transmission overhead. This paper investigates the distributed resource allocation scheme for cellular networks to maximize the energy efficiency of the system in the uplink transmission, while guaranteeing the quality of service (QoS) for cellular users. Particularly, to cope the fast varying channels in wireless communication environment, we propose a robust federated reinforcement learning (FRL_suc) framework to enable local users to perform distributed resource allocation in items of transmit power and channel assignment by the guidance of the local neural network trained at each user. Analysis and numerical results show that the proposed FRL_suc framework can lower the transmission overhead and offload the computation from the central server to the local users, while outperforming the conventional multi-agent reinforcement learning algorithm in terms of EE, and is more robust to channel variations.

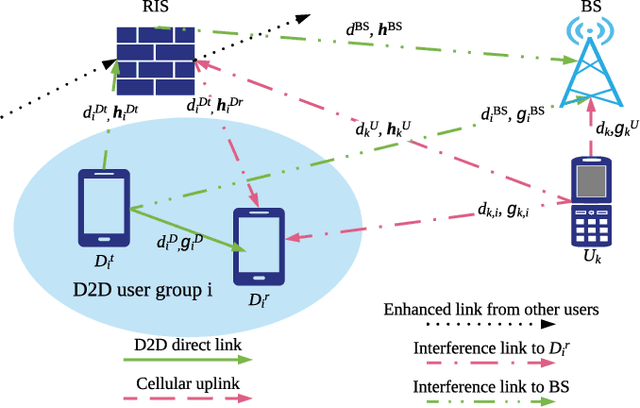

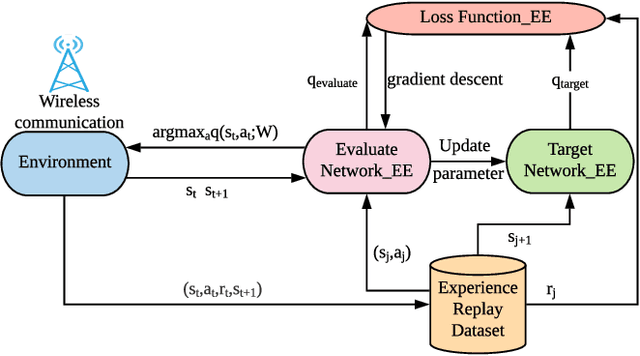

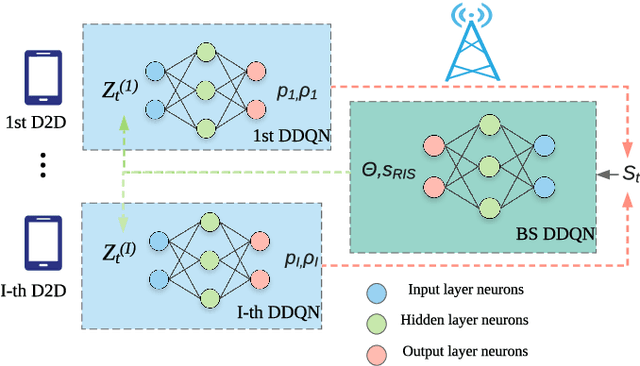

Reconfigurable Intelligent Surface Assisted Device-to-Device Communications

Jul 05, 2021

Abstract:Reconfigurable intelligent surface (RIS) technology is a promising method to enhance wireless communications services and to realize the smart radio environment. In this paper, we investigate the application of RIS in D2D communications, and maximize the sum of the transmission rate of the D2D underlaying networks in a new perspective. Instead of solving similarly formulated resource allocation problems for D2D communications, this paper treats the wireless environment as a variable by adjusting the position and phase shift of the RIS. To solve this non-convex problem, we propose a novel double deep Q-network (DDQN) based structure which is able to achieve the near-optimal performance with lower complexity and enhanced robustness. Simulation results illustrate that the proposed DDQN based structure can achieve a higher uplink rate compared to the benchmarks, meanwhile meeting the quality of service (QoS) requirements at the base station (BS) and D2D receivers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge