Yuze Chi

SPA-GCN: Efficient and Flexible GCN Accelerator with an Application for Graph Similarity Computation

Nov 10, 2021

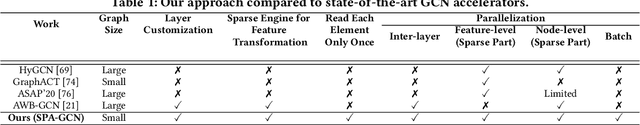

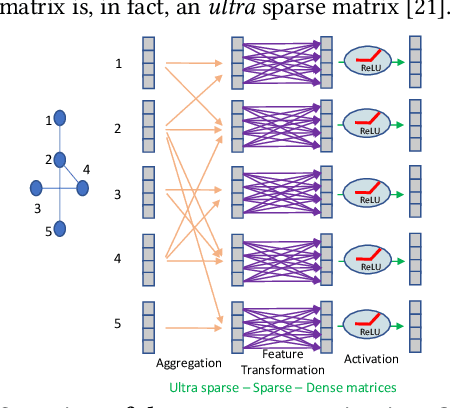

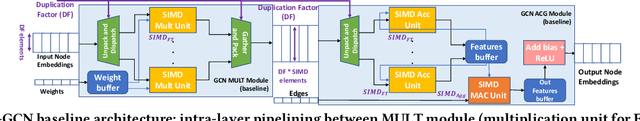

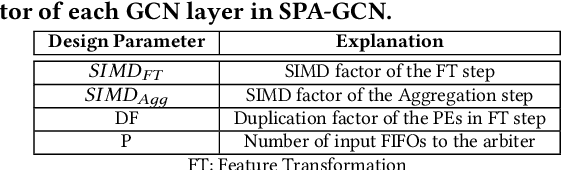

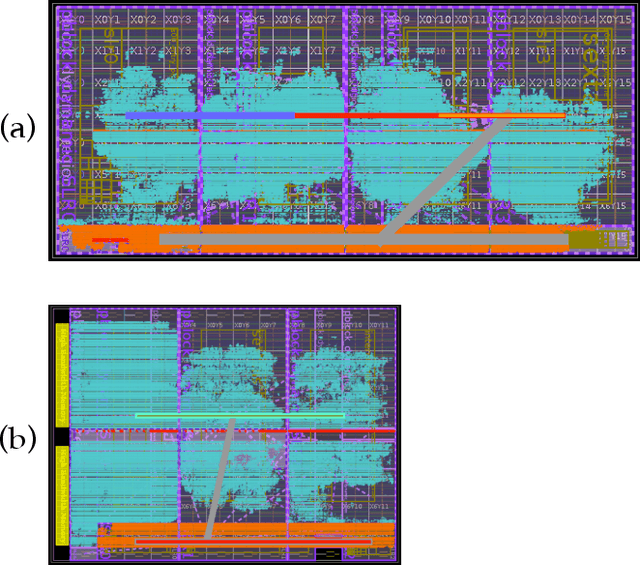

Abstract:While there have been many studies on hardware acceleration for deep learning on images, there has been a rather limited focus on accelerating deep learning applications involving graphs. The unique characteristics of graphs, such as the irregular memory access and dynamic parallelism, impose several challenges when the algorithm is mapped to a CPU or GPU. To address these challenges while exploiting all the available sparsity, we propose a flexible architecture called SPA-GCN for accelerating Graph Convolutional Networks (GCN), the core computation unit in deep learning algorithms on graphs. The architecture is specialized for dealing with many small graphs since the graph size has a significant impact on design considerations. In this context, we use SimGNN, a neural-network-based graph matching algorithm, as a case study to demonstrate the effectiveness of our architecture. The experimental results demonstrate that SPA-GCN can deliver a high speedup compared to a multi-core CPU implementation and a GPU implementation, showing the efficiency of our design.

Pyxis: An Open-Source Performance Dataset of Sparse Accelerators

Oct 08, 2021

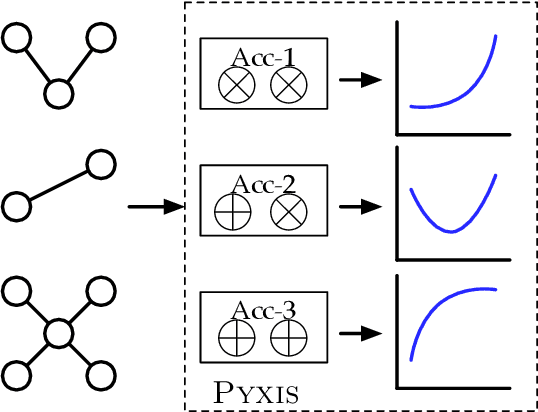

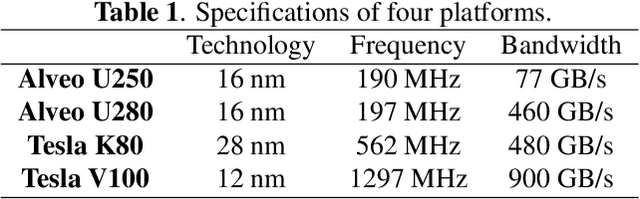

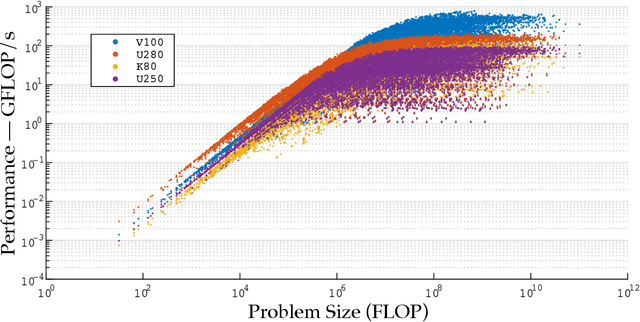

Abstract:Specialized accelerators provide gains of performance and efficiency in specific domains of applications. Sparse data structures or/and representations exist in a wide range of applications. However, it is challenging to design accelerators for sparse applications because no analytic architecture or performance-level models are able to fully capture the spectrum of the sparse data. Accelerator researchers rely on real execution to get precise feedback for their designs. In this work, we present PYXIS, a performance dataset for specialized accelerators on sparse data. PYXIS collects accelerator designs and real execution performance statistics. Currently, there are 73.8 K instances in PYXIS. PYXIS is open-source, and we are constantly growing PYXIS with new accelerator designs and performance statistics. PYXIS can benefit researchers in the fields of accelerator, architecture, performance, algorithm, and many related topics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge