Yunqin Li

Multi-Modal Feature Fusion for Spatial Morphology Analysis of Traditional Villages via Hierarchical Graph Neural Networks

Oct 31, 2025

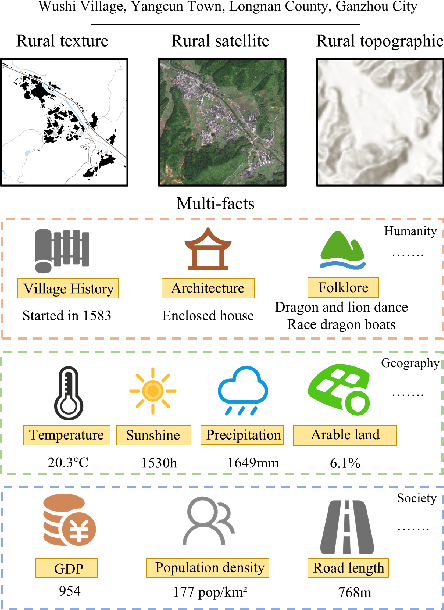

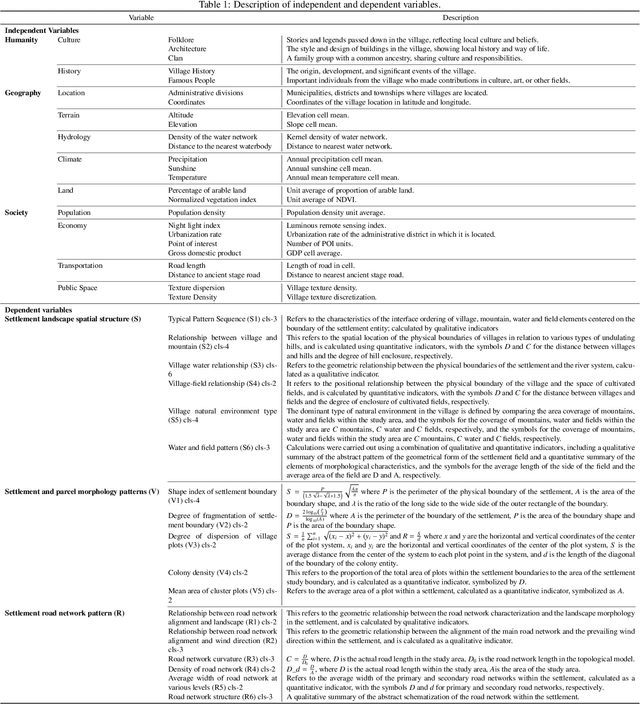

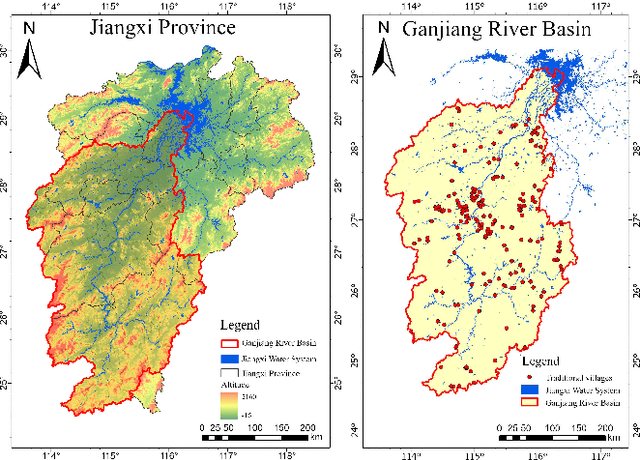

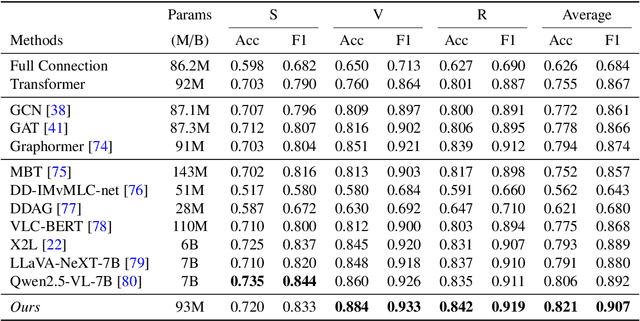

Abstract:Villages areas hold significant importance in the study of human-land relationships. However, with the advancement of urbanization, the gradual disappearance of spatial characteristics and the homogenization of landscapes have emerged as prominent issues. Existing studies primarily adopt a single-disciplinary perspective to analyze villages spatial morphology and its influencing factors, relying heavily on qualitative analysis methods. These efforts are often constrained by the lack of digital infrastructure and insufficient data. To address the current research limitations, this paper proposes a Hierarchical Graph Neural Network (HGNN) model that integrates multi-source data to conduct an in-depth analysis of villages spatial morphology. The framework includes two types of nodes-input nodes and communication nodes-and two types of edges-static input edges and dynamic communication edges. By combining Graph Convolutional Networks (GCN) and Graph Attention Networks (GAT), the proposed model efficiently integrates multimodal features under a two-stage feature update mechanism. Additionally, based on existing principles for classifying villages spatial morphology, the paper introduces a relational pooling mechanism and implements a joint training strategy across 17 subtypes. Experimental results demonstrate that this method achieves significant performance improvements over existing approaches in multimodal fusion and classification tasks. Additionally, the proposed joint optimization of all sub-types lifts mean accuracy/F1 from 0.71/0.83 (independent models) to 0.82/0.90, driven by a 6% gain for parcel tasks. Our method provides scientific evidence for exploring villages spatial patterns and generative logic.

Advancing Urban Renewal: An Automated Approach to Generating Historical Arcade Facades with Stable Diffusion Models

Nov 20, 2023Abstract:Urban renewal and transformation processes necessitate the preservation of the historical urban fabric, particularly in districts known for their architectural and historical significance. These regions, with their diverse architectural styles, have traditionally required extensive preliminary research, often leading to subjective results. However, the advent of machine learning models has opened up new avenues for generating building facade images. Despite this, creating high-quality images for historical district renovations remains challenging, due to the complexity and diversity inherent in such districts. In response to these challenges, our study introduces a new methodology for automatically generating images of historical arcade facades, utilizing Stable Diffusion models conditioned on textual descriptions. By classifying and tagging a variety of arcade styles, we have constructed several realistic arcade facade image datasets. We trained multiple low-rank adaptation (LoRA) models to control the stylistic aspects of the generated images, supplemented by ControlNet models for improved precision and authenticity. Our approach has demonstrated high levels of precision, authenticity, and diversity in the generated images, showing promising potential for real-world urban renewal projects. This new methodology offers a more efficient and accurate alternative to conventional design processes in urban renewal, bypassing issues of unconvincing image details, lack of precision, and limited stylistic variety. Future research could focus on integrating this two-dimensional image generation with three-dimensional modeling techniques, providing a more comprehensive solution for renovating architectural facades in historical districts.

Improving Facade Parsing with Vision Transformers and Line Integration

Oct 07, 2023Abstract:Facade parsing stands as a pivotal computer vision task with far-reaching applications in areas like architecture, urban planning, and energy efficiency. Despite the recent success of deep learning-based methods in yielding impressive results on certain open-source datasets, their viability for real-world applications remains uncertain. Real-world scenarios are considerably more intricate, demanding greater computational efficiency. Existing datasets often fall short in representing these settings, and previous methods frequently rely on extra models to enhance accuracy, which requires much computation cost. In this paper, we introduce Comprehensive Facade Parsing (CFP), a dataset meticulously designed to encompass the intricacies of real-world facade parsing tasks. Comprising a total of 602 high-resolution street-view images, this dataset captures a diverse array of challenging scenarios, including sloping angles and densely clustered buildings, with painstakingly curated annotations for each image. We introduce a new pipeline known as Revision-based Transformer Facade Parsing (RTFP). This marks the pioneering utilization of Vision Transformers (ViT) in facade parsing, and our experimental results definitively substantiate its merit. We also design Line Acquisition, Filtering, and Revision (LAFR), an efficient yet accurate revision algorithm that can improve the segment result solely from simple line detection using prior knowledge of the facade. In ECP 2011, RueMonge 2014, and our CFP, we evaluate the superiority of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge