Yunhai Xiao

Iterative Reweighted Framework Based Algorithms for Sparse Linear Regression with Generalized Elastic Net Penalty

Nov 22, 2024

Abstract:The elastic net penalty is frequently employed in high-dimensional statistics for parameter regression and variable selection. It is particularly beneficial compared to lasso when the number of predictors greatly surpasses the number of observations. However, empirical evidence has shown that the $\ell_q$-norm penalty (where $0 < q < 1$) often provides better regression compared to the $\ell_1$-norm penalty, demonstrating enhanced robustness in various scenarios. In this paper, we explore a generalized elastic net model that employs a $\ell_r$-norm (where $r \geq 1$) in loss function to accommodate various types of noise, and employs a $\ell_q$-norm (where $0 < q < 1$) to replace the $\ell_1$-norm in elastic net penalty. Theoretically, we establish the computable lower bounds for the nonzero entries of the generalized first-order stationary points of the proposed generalized elastic net model. For implementation, we develop two efficient algorithms based on the locally Lipschitz continuous $\epsilon$-approximation to $\ell_q$-norm. The first algorithm employs an alternating direction method of multipliers (ADMM), while the second utilizes a proximal majorization-minimization method (PMM), where the subproblems are addressed using the semismooth Newton method (SNN). We also perform extensive numerical experiments with both simulated and real data, showing that both algorithms demonstrate superior performance. Notably, the PMM-SSN is efficient than ADMM, even though the latter provides a simpler implementation.

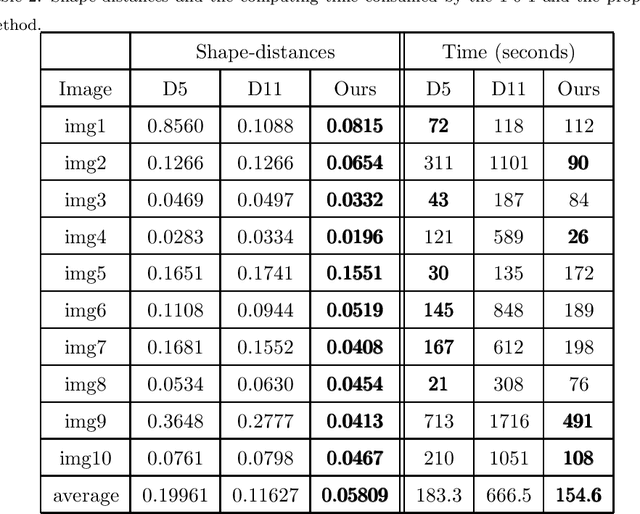

Multiple Convex Objects Image Segmentation via Proximal Alternating Direction Method of Multipliers

Mar 22, 2022

Abstract:This paper focuses on the issue of image segmentation with convex shape prior. Firstly, we use binary function to represent convex object(s). The convex shape prior turns out to be a simple quadratic inequality constraint on the binary indicator function associated with each object. An image segmentation model incorporating convex shape prior into a probability-based method is proposed. Secondly, a new algorithm is designed to solve involved optimization problem, which is a challenging task because of the quadratic inequality constraint. To tackle this difficulty, we relax and linearize the quadratic inequality constraint to reduce it to solve a sequence of convex minimization problems. For each convex problem, an efficient proximal alternating direction method of multipliers is developed to solve it. The convergence of the algorithm follows some existing results in the optimization literature. Moreover, an interactive procedure is introduced to improve the accuracy of segmentation gradually. Numerical experiments on natural and medical images demonstrate that the proposed method is superior to some existing methods in terms of segmentation accuracy and computational time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge