Yunfei Guo

ParkGaussian: Surround-view 3D Gaussian Splatting for Autonomous Parking

Jan 04, 2026Abstract:Parking is a critical task for autonomous driving systems (ADS), with unique challenges in crowded parking slots and GPS-denied environments. However, existing works focus on 2D parking slot perception, mapping, and localization, 3D reconstruction remains underexplored, which is crucial for capturing complex spatial geometry in parking scenarios. Naively improving the visual quality of reconstructed parking scenes does not directly benefit autonomous parking, as the key entry point for parking is the slots perception module. To address these limitations, we curate the first benchmark named ParkRecon3D, specifically designed for parking scene reconstruction. It includes sensor data from four surround-view fisheye cameras with calibrated extrinsics and dense parking slot annotations. We then propose ParkGaussian, the first framework that integrates 3D Gaussian Splatting (3DGS) for parking scene reconstruction. To further improve the alignment between reconstruction and downstream parking slot detection, we introduce a slot-aware reconstruction strategy that leverages existing parking perception methods to enhance the synthesis quality of slot regions. Experiments on ParkRecon3D demonstrate that ParkGaussian achieves state-of-the-art reconstruction quality and better preserves perception consistency for downstream tasks. The code and dataset will be released at: https://github.com/wm-research/ParkGaussian

Design and Control of a Low-cost Non-backdrivable End-effector Upper Limb Rehabilitation Device

Jun 20, 2024Abstract:This paper presents the development of an upper limb end-effector based rehabilitation device for stroke patients, offering assistance or resistance along any 2-dimensional trajectory during physical therapy. It employs a non-backdrivable ball-screw-driven mechanism for enhanced control accuracy. The control system features three novel algorithms: First, the Implicit Euler velocity control algorithm (IEVC) highlighted for its state-of-the-art accuracy, stability, efficiency and generalizability in motion restriction control. Second, an Admittance Virtual Dynamics simulation algorithm that achieves a smooth and natural human interaction with the non-backdrivable end-effector. Third, a generalized impedance force calculation algorithm allowing efficient impedance control on any trajectory or area boundary. Experimental validation demonstrated the system's effectiveness in accurate end-effector position control across various trajectories and configurations. The proposed upper limb end-effector-based rehabilitation device, with its high performance and adaptability, holds significant promise for extensive clinical application, potentially improving rehabilitation outcomes for stroke patients.

Emotion recognition based on multi-modal electrophysiology multi-head attention Contrastive Learning

Jul 12, 2023

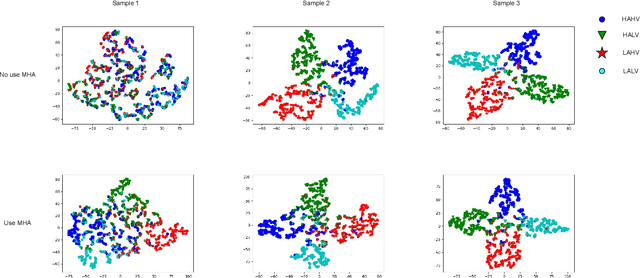

Abstract:Emotion recognition is an important research direction in artificial intelligence, helping machines understand and adapt to human emotional states. Multimodal electrophysiological(ME) signals, such as EEG, GSR, respiration(Resp), and temperature(Temp), are effective biomarkers for reflecting changes in human emotions. However, using electrophysiological signals for emotion recognition faces challenges such as data scarcity, inconsistent labeling, and difficulty in cross-individual generalization. To address these issues, we propose ME-MHACL, a self-supervised contrastive learning-based multimodal emotion recognition method that can learn meaningful feature representations from unlabeled electrophysiological signals and use multi-head attention mechanisms for feature fusion to improve recognition performance. Our method includes two stages: first, we use the Meiosis method to group sample and augment unlabeled electrophysiological signals and design a self-supervised contrastive learning task; second, we apply the trained feature extractor to labeled electrophysiological signals and use multi-head attention mechanisms for feature fusion. We conducted experiments on two public datasets, DEAP and MAHNOB-HCI, and our method outperformed existing benchmark methods in emotion recognition tasks and had good cross-individual generalization ability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge