Yuji Iwahori

Emotion-Guided Image to Music Generation

Oct 29, 2024

Abstract:Generating music from images can enhance various applications, including background music for photo slideshows, social media experiences, and video creation. This paper presents an emotion-guided image-to-music generation framework that leverages the Valence-Arousal (VA) emotional space to produce music that aligns with the emotional tone of a given image. Unlike previous models that rely on contrastive learning for emotional consistency, the proposed approach directly integrates a VA loss function to enable accurate emotional alignment. The model employs a CNN-Transformer architecture, featuring pre-trained CNN image feature extractors and three Transformer encoders to capture complex, high-level emotional features from MIDI music. Three Transformer decoders refine these features to generate musically and emotionally consistent MIDI sequences. Experimental results on a newly curated emotionally paired image-MIDI dataset demonstrate the proposed model's superior performance across metrics such as Polyphony Rate, Pitch Entropy, Groove Consistency, and loss convergence.

Extraction of Key-frames of Endoscopic Videos by using Depth Information

Jun 30, 2021

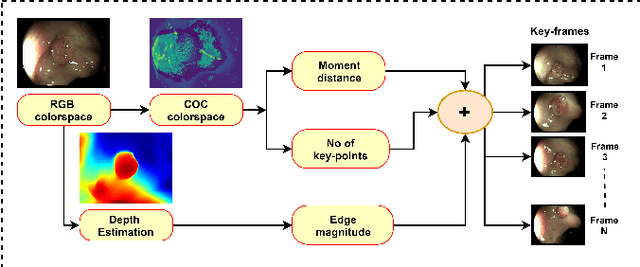

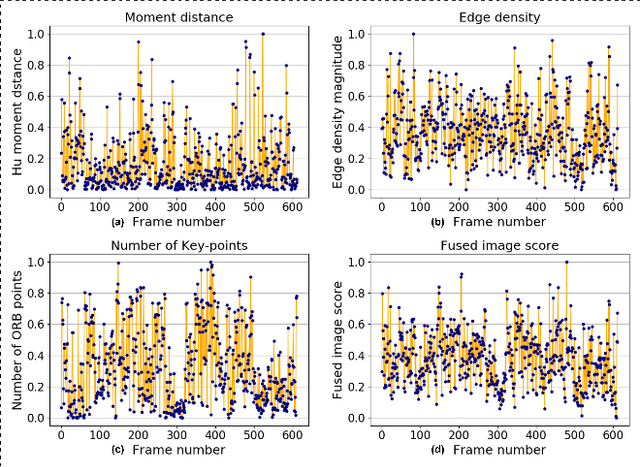

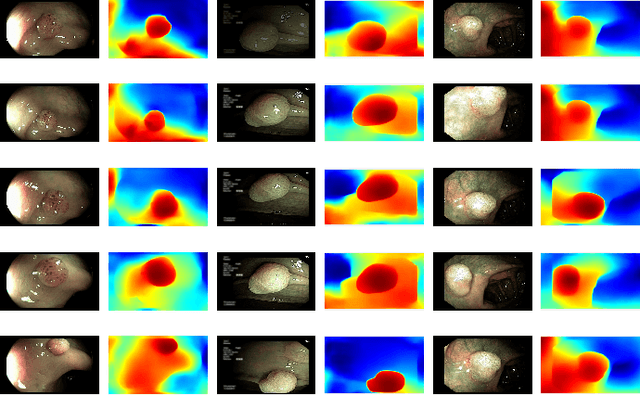

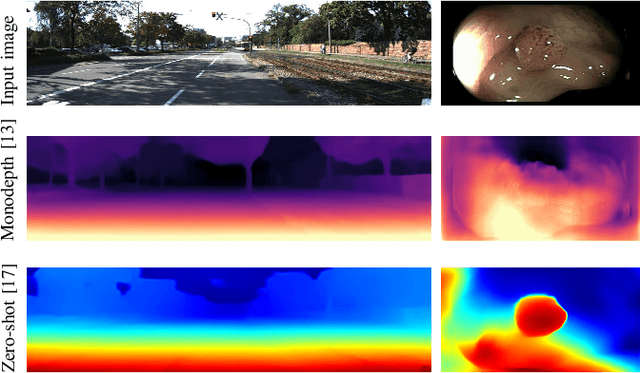

Abstract:A deep learning-based monocular depth estimation (MDE) technique is proposed for selection of most informative frames (key frames) of an endoscopic video. In most of the cases, ground truth depth maps of polyps are not readily available and that is why the transfer learning approach is adopted in our method. An endoscopic modalities generally capture thousands of frames. In this scenario, it is quite important to discard low-quality and clinically irrelevant frames of an endoscopic video while the most informative frames should be retained for clinical diagnosis. In this view, a key-frame selection strategy is proposed by utilizing the depth information of polyps. In our method, image moment, edge magnitude, and key-points are considered for adaptively selecting the key frames. One important application of our proposed method could be the 3D reconstruction of polyps with the help of extracted key frames. Also, polyps are localized with the help of extracted depth maps.

Pseudo vs. True Defect Classification in Printed Circuits Boards using Wavelet Features

Oct 24, 2013

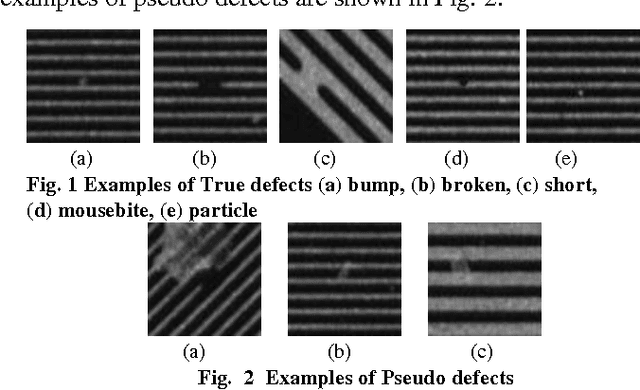

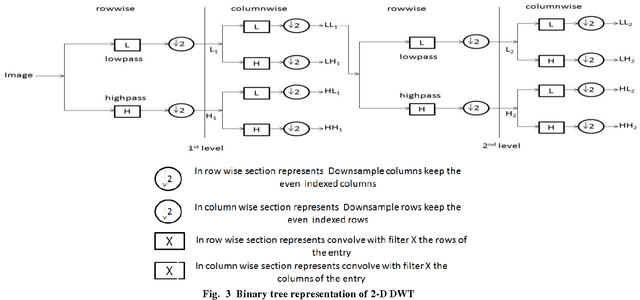

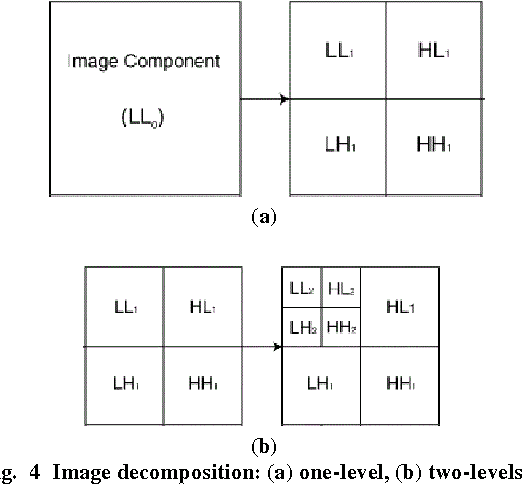

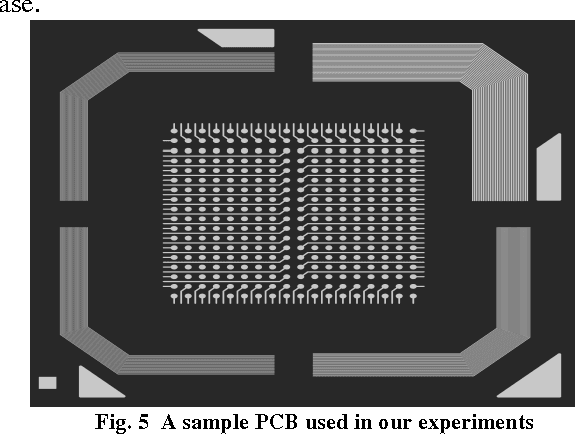

Abstract:In recent years, Printed Circuit Boards (PCB) have become the backbone of a large number of consumer electronic devices leading to a surge in their production. This has made it imperative to employ automatic inspection systems to identify manufacturing defects in PCB before they are installed in the respective systems. An important task in this regard is the classification of defects as either true or pseudo defects, which decides if the PCB is to be re-manufactured or not. This work proposes a novel approach to detect most common defects in the PCBs. The problem has been approached by employing highly discriminative features based on multi-scale wavelet transform, which are further boosted by using a kernalized version of the support vector machines (SVM). A real world printed circuit board dataset has been used for quantitative analysis. Experimental results demonstrated the efficacy of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge