Yui Noma

Markov Chain Monte Carlo for Arrangement of Hyperplanes in Locality-Sensitive Hashing

Mar 18, 2013

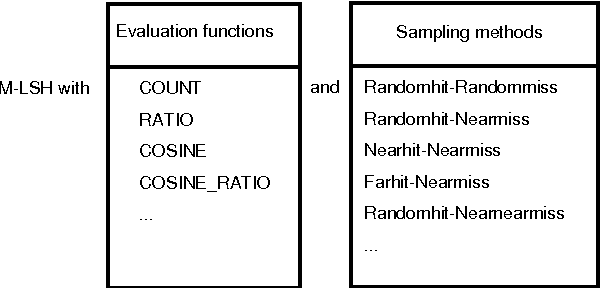

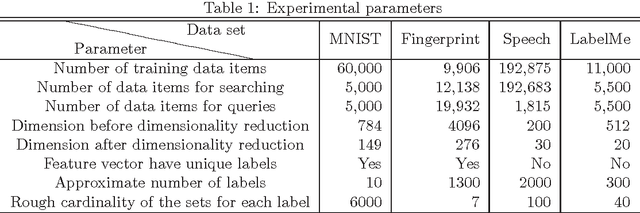

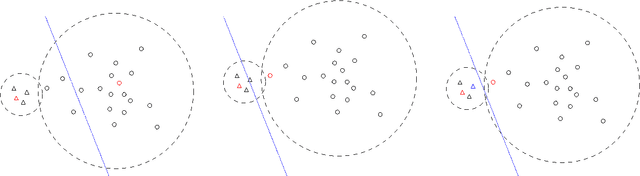

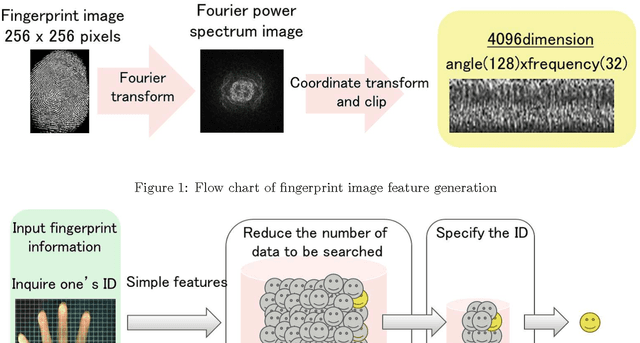

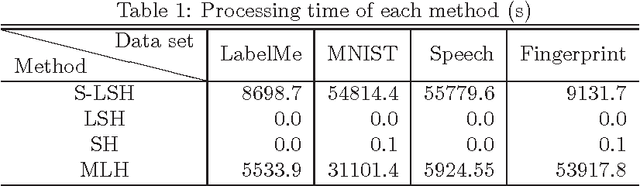

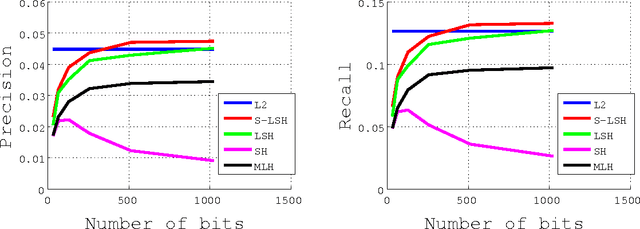

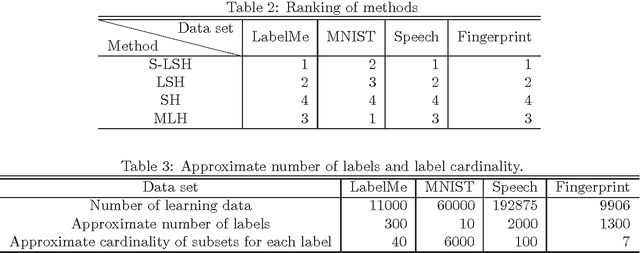

Abstract:Since Hamming distances can be calculated by bitwise computations, they can be calculated with less computational load than L2 distances. Similarity searches can therefore be performed faster in Hamming distance space. The elements of Hamming distance space are bit strings. On the other hand, the arrangement of hyperplanes induce the transformation from the feature vectors into feature bit strings. This transformation method is a type of locality-sensitive hashing that has been attracting attention as a way of performing approximate similarity searches at high speed. Supervised learning of hyperplane arrangements allows us to obtain a method that transforms them into feature bit strings reflecting the information of labels applied to higher-dimensional feature vectors. In this p aper, we propose a supervised learning method for hyperplane arrangements in feature space that uses a Markov chain Monte Carlo (MCMC) method. We consider the probability density functions used during learning, and evaluate their performance. We also consider the sampling method for learning data pairs needed in learning, and we evaluate its performance. We confirm that the accuracy of this learning method when using a suitable probability density function and sampling method is greater than the accuracy of existing learning methods.

Hyperplane Arrangements and Locality-Sensitive Hashing with Lift

Dec 26, 2012

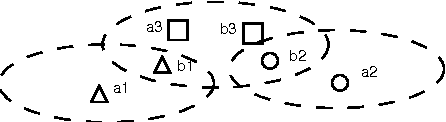

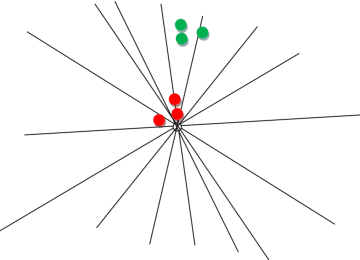

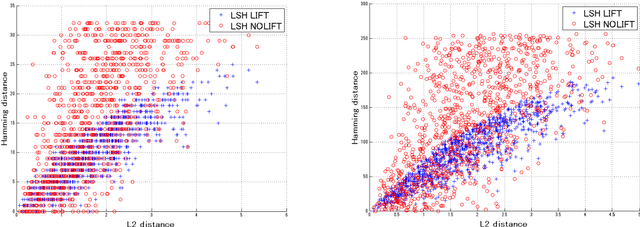

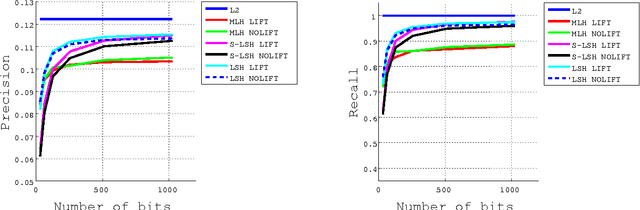

Abstract:Locality-sensitive hashing converts high-dimensional feature vectors, such as image and speech, into bit arrays and allows high-speed similarity calculation with the Hamming distance. There is a hashing scheme that maps feature vectors to bit arrays depending on the signs of the inner products between feature vectors and the normal vectors of hyperplanes placed in the feature space. This hashing can be seen as a discretization of the feature space by hyperplanes. If labels for data are given, one can determine the hyperplanes by using learning algorithms. However, many proposed learning methods do not consider the hyperplanes' offsets. Not doing so decreases the number of partitioned regions, and the correlation between Hamming distances and Euclidean distances becomes small. In this paper, we propose a lift map that converts learning algorithms without the offsets to the ones that take into account the offsets. With this method, the learning methods without the offsets give the discretizations of spaces as if it takes into account the offsets. For the proposed method, we input several high-dimensional feature data sets and studied the relationship between the statistical characteristics of data, the number of hyperplanes, and the effect of the proposed method.

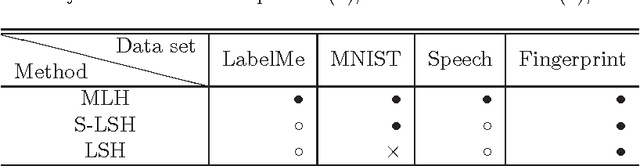

Locality-Sensitive Hashing with Margin Based Feature Selection

Oct 11, 2012

Abstract:We propose a learning method with feature selection for Locality-Sensitive Hashing. Locality-Sensitive Hashing converts feature vectors into bit arrays. These bit arrays can be used to perform similarity searches and personal authentication. The proposed method uses bit arrays longer than those used in the end for similarity and other searches and by learning selects the bits that will be used. We demonstrated this method can effectively perform optimization for cases such as fingerprint images with a large number of labels and extremely few data that share the same labels, as well as verifying that it is also effective for natural images, handwritten digits, and speech features.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge