Yuhu Shi

Region-aware Spatiotemporal Modeling with Collaborative Domain Generalization for Cross-Subject EEG Emotion Recognition

Jan 22, 2026Abstract:Cross-subject EEG-based emotion recognition (EER) remains challenging due to strong inter-subject variability, which induces substantial distribution shifts in EEG signals, as well as the high complexity of emotion-related neural representations in both spatial organization and temporal evolution. Existing approaches typically improve spatial modeling, temporal modeling, or generalization strategies in isolation, which limits their ability to align representations across subjects while capturing multi-scale dynamics and suppressing subject-specific bias within a unified framework. To address these gaps, we propose a Region-aware Spatiotemporal Modeling framework with Collaborative Domain Generalization (RSM-CoDG) for cross-subject EEG emotion recognition. RSM-CoDG incorporates neuroscience priors derived from functional brain region partitioning to construct region-level spatial representations, thereby improving cross-subject comparability. It also employs multi-scale temporal modeling to characterize the dynamic evolution of emotion-evoked neural activity. In addition, the framework employs a collaborative domain generalization strategy, incorporating multidimensional constraints to reduce subject-specific bias in a fully unseen target subject setting, which enhances the generalization to unknown individuals. Extensive experimental results on SEED series datasets demonstrate that RSM-CoDG consistently outperforms existing competing methods, providing an effective approach for improving robustness. The source code is available at https://github.com/RyanLi-X/RSM-CoDG.

RSNet: A Light Framework for The Detection of Multi-scale Remote Sensing Targets

Oct 30, 2024

Abstract:Recent developments in synthetic aperture radar (SAR) ship detection have seen deep learning techniques achieve remarkable progress in accuracy and speed. However, the detection of small targets against complex backgrounds remains a significant challenge. To tackle these difficulties, this letter presents RSNet, a lightweight framework aimed at enhancing ship detection capabilities in SAR imagery. RSNet features the Waveletpool-ContextGuided (WCG) backbone for enhanced accuracy with fewer parameters, and the Waveletpool-StarFusion (WSF) head for efficient parameter reduction. Additionally, a Lightweight-Shared (LS) module minimizes the detection head's parameter load. Experiments on the SAR Ship Detection Dataset (SSDD) and High-Resolution SAR Image Dataset (HRSID) demonstrate that RSNet achieves a strong balance between lightweight design and detection performance, surpassing many state-of-the-art detectors, reaching 72.5\% and 67.6\% in \textbf{\(\mathbf{mAP_{.50:95}}\) }respectively with 1.49M parameters. Our code will be released soon.

A novel brain registration model combining structural and functional MRI information

Sep 26, 2024

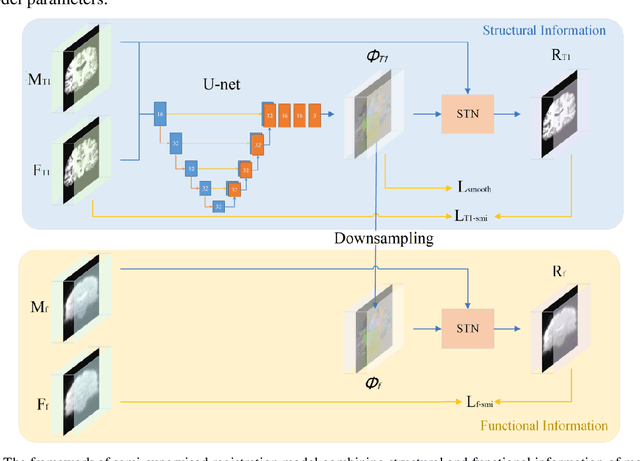

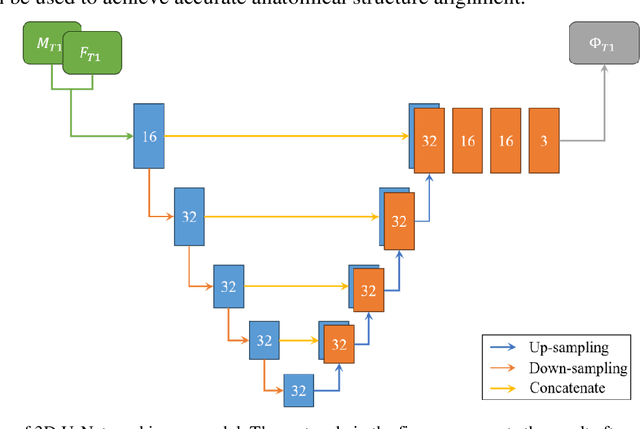

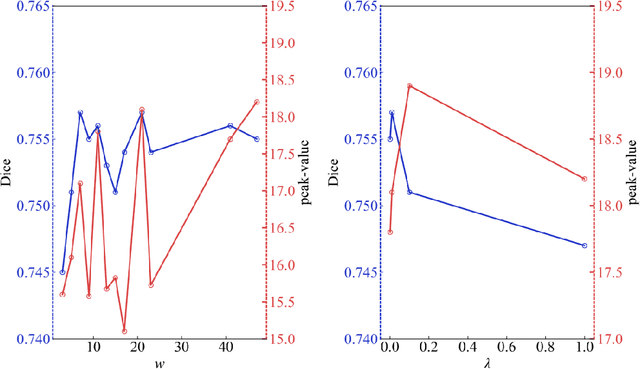

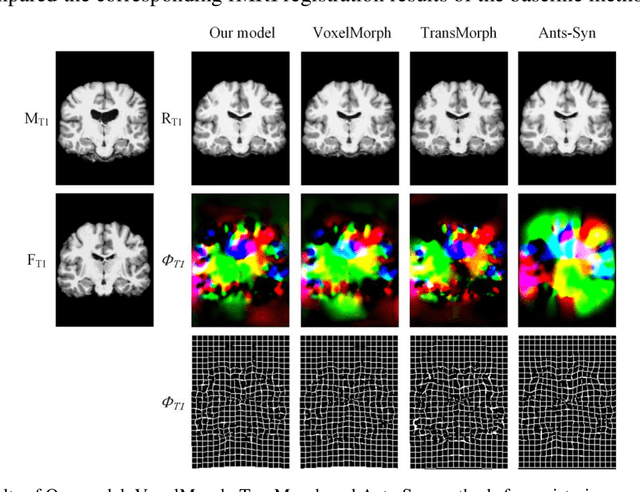

Abstract:Although developed functional magnetic resonance imaging (fMRI) registration algorithms based on deep learning have achieved a certain degree of alignment of functional area, they underutilized fine structural information. In this paper, we propose a semi-supervised convolutional neural network (CNN) registration model that integrates both structural and functional MRI information. The model first learns to generate deformation fields by inputting structural MRI (T1w-MRI) into the CNN to capture fine structural information. Then, we construct a local functional connectivity pattern to describe the local fMRI information, and use the Bhattacharyya coefficient to measure the similarity between two fMRI images, which is used as a loss function to facilitate the alignment of functional areas. In the inter-subject registration experiment, our model achieved an average number of voxels exceeding the threshold of 4.24 is 2248 in the group-level t-test maps for the four functional brain networks (default mode network, visual network, central executive network, and sensorimotor network). Additionally, the atlas-based registration experiment results show that the average number of voxels exceeding this threshold is 3620. The results are the largest among all methods. Our model achieves an excellent registration performance in fMRI and improves the consistency of functional regions. The proposed model has the potential to optimize fMRI image processing and analysis, facilitating the development of fMRI applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge