Yue Xia

Perfect Privacy for Discriminator-Based Byzantine-Resilient Federated Learning

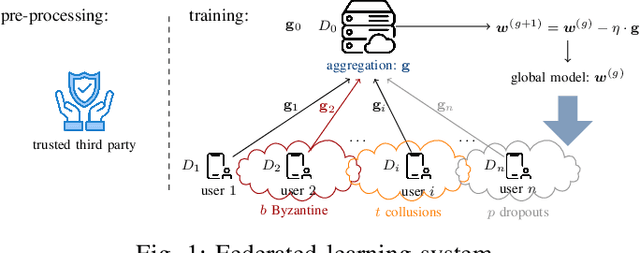

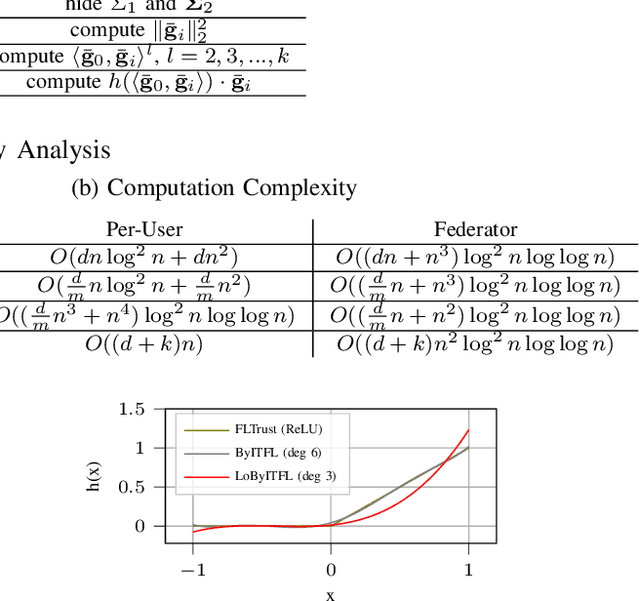

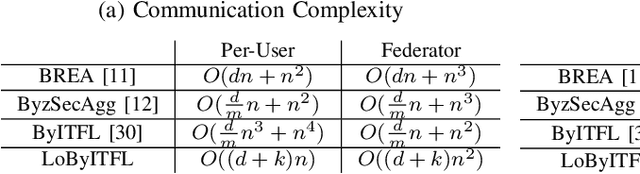

Jun 16, 2025Abstract:Federated learning (FL) shows great promise in large-scale machine learning but introduces new privacy and security challenges. We propose ByITFL and LoByITFL, two novel FL schemes that enhance resilience against Byzantine users while keeping the users' data private from eavesdroppers. To ensure privacy and Byzantine resilience, our schemes build on having a small representative dataset available to the federator and crafting a discriminator function allowing the mitigation of corrupt users' contributions. ByITFL employs Lagrange coded computing and re-randomization, making it the first Byzantine-resilient FL scheme with perfect Information-Theoretic (IT) privacy, though at the cost of a significant communication overhead. LoByITFL, on the other hand, achieves Byzantine resilience and IT privacy at a significantly reduced communication cost, but requires a Trusted Third Party, used only in a one-time initialization phase before training. We provide theoretical guarantees on privacy and Byzantine resilience, along with convergence guarantees and experimental results validating our findings.

LoByITFL: Low Communication Secure and Private Federated Learning

May 29, 2024

Abstract:Federated Learning (FL) faces several challenges, such as the privacy of the clients data and security against Byzantine clients. Existing works treating privacy and security jointly make sacrifices on the privacy guarantee. In this work, we introduce LoByITFL, the first communication-efficient Information-Theoretic (IT) private and secure FL scheme that makes no sacrifices on the privacy guarantees while ensuring security against Byzantine adversaries. The key ingredients are a small and representative dataset available to the federator, a careful transformation of the FLTrust algorithm and the use of a trusted third party only in a one-time preprocessing phase before the start of the learning algorithm. We provide theoretical guarantees on privacy and Byzantine-resilience, and provide convergence guarantee and experimental results validating our theoretical findings.

Byzantine-Resilient Secure Aggregation for Federated Learning Without Privacy Compromises

May 14, 2024

Abstract:Federated learning (FL) shows great promise in large scale machine learning, but brings new risks in terms of privacy and security. We propose ByITFL, a novel scheme for FL that provides resilience against Byzantine users while keeping the users' data private from the federator and private from other users. The scheme builds on the preexisting non-private FLTrust scheme, which tolerates malicious users through trust scores (TS) that attenuate or amplify the users' gradients. The trust scores are based on the ReLU function, which we approximate by a polynomial. The distributed and privacy-preserving computation in ByITFL is designed using a combination of Lagrange coded computing, verifiable secret sharing and re-randomization steps. ByITFL is the first Byzantine resilient scheme for FL with full information-theoretic privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge