Yubo Shi

Transform-Based Feature Map Compression for CNN Inference

Jun 24, 2021

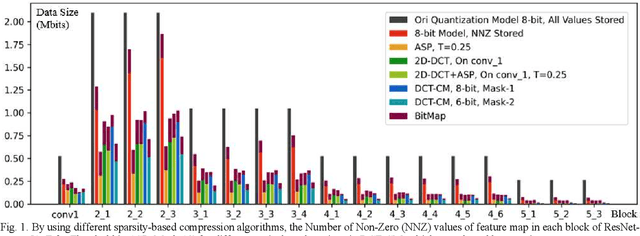

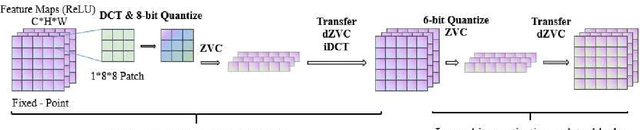

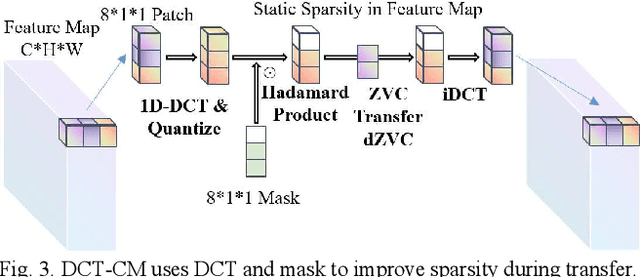

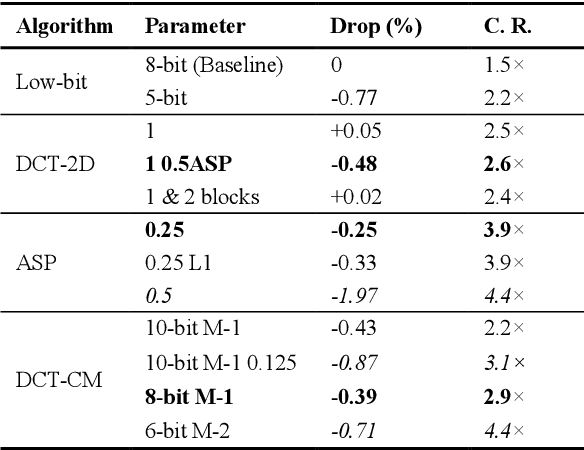

Abstract:To achieve higher accuracy in machine learning tasks, very deep convolutional neural networks (CNNs) are designed recently. However, the large memory access of deep CNNs will lead to high power consumption. A variety of hardware-friendly compression methods have been proposed to reduce the data transfer bandwidth by exploiting the sparsity of feature maps. Most of them focus on designing a specialized encoding format to increase the compression ratio. Differently, we observe and exploit the sparsity distinction between activations in earlier and later layers to improve the compression ratio. We propose a novel hardware-friendly transform-based method named 1D-Discrete Cosine Transform on Channel dimension with Masks (DCT-CM), which intelligently combines DCT, masks, and a coding format to compress activations. The proposed algorithm achieves an average compression ratio of 2.9x (53% higher than the state-of-the-art transform-based feature map compression works) during inference on ResNet-50 with an 8-bit quantization scheme.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge