Young-Seok Kweon

Neurophysiological Characteristics of Adaptive Reasoning for Creative Problem-Solving Strategy

Nov 11, 2025

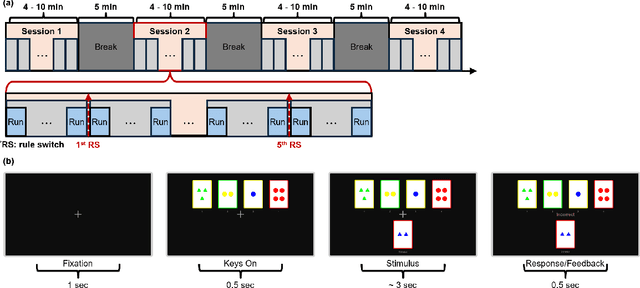

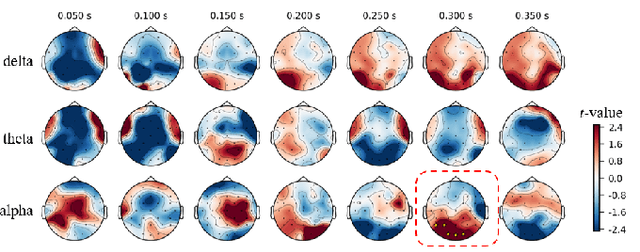

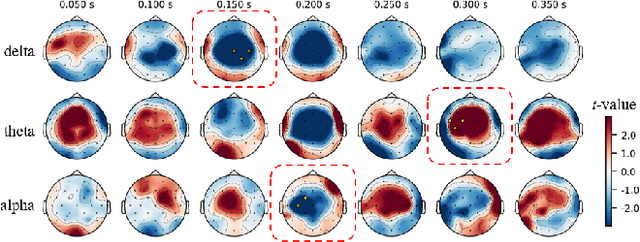

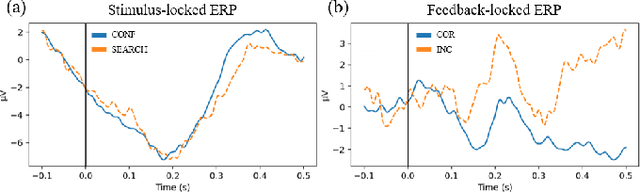

Abstract:Adaptive reasoning enables humans to flexibly adjust inference strategies when environmental rules or contexts change, yet its underlying neural dynamics remain unclear. This study investigated the neurophysiological mechanisms of adaptive reasoning using a card-sorting paradigm combined with electroencephalography and compared human performance with that of a multimodal large language model. Stimulus- and feedback-locked analyses revealed coordinated delta-theta-alpha dynamics: early delta-theta activity reflected exploratory monitoring and rule inference, whereas occipital alpha engagement indicated confirmatory stabilization of attention after successful rule identification. In contrast, the multimodal large language model exhibited only short-term feedback-driven adjustments without hierarchical rule abstraction or genuine adaptive reasoning. These findings identify the neural signatures of human adaptive reasoning and highlight the need for brain-inspired artificial intelligence that incorporates oscillatory feedback coordination for true context-sensitive adaptation.

Multi-Signal Reconstruction Using Masked Autoencoder From EEG During Polysomnography

Nov 14, 2023

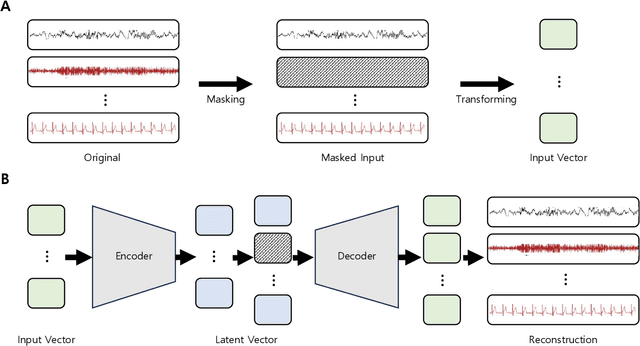

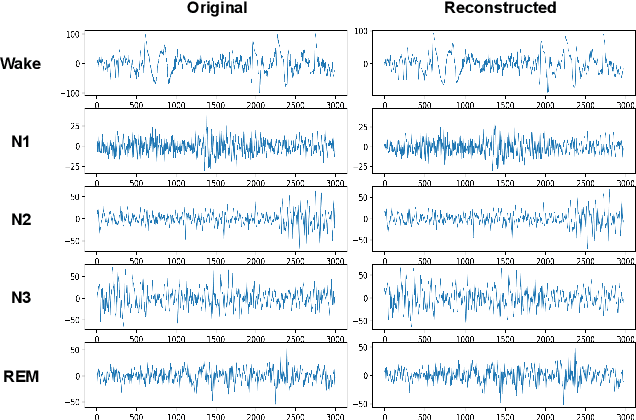

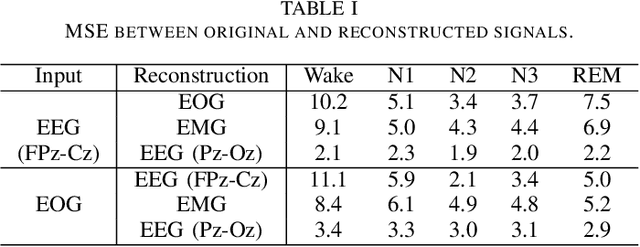

Abstract:Polysomnography (PSG) is an indispensable diagnostic tool in sleep medicine, essential for identifying various sleep disorders. By capturing physiological signals, including EEG, EOG, EMG, and cardiorespiratory metrics, PSG presents a patient's sleep architecture. However, its dependency on complex equipment and expertise confines its use to specialized clinical settings. Addressing these limitations, our study aims to perform PSG by developing a system that requires only a single EEG measurement. We propose a novel system capable of reconstructing multi-signal PSG from a single-channel EEG based on a masked autoencoder. The masked autoencoder was trained and evaluated using the Sleep-EDF-20 dataset, with mean squared error as the metric for assessing the similarity between original and reconstructed signals. The model demonstrated proficiency in reconstructing multi-signal data. Our results present promise for the development of more accessible and long-term sleep monitoring systems. This suggests the expansion of PSG's applicability, enabling its use beyond the confines of clinics.

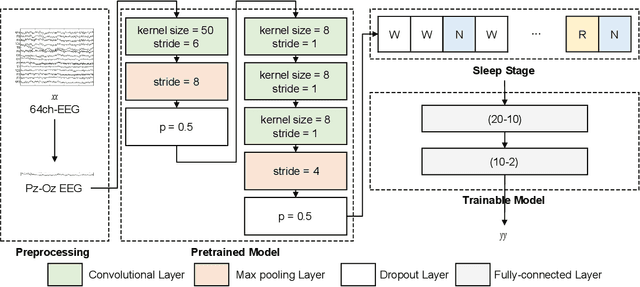

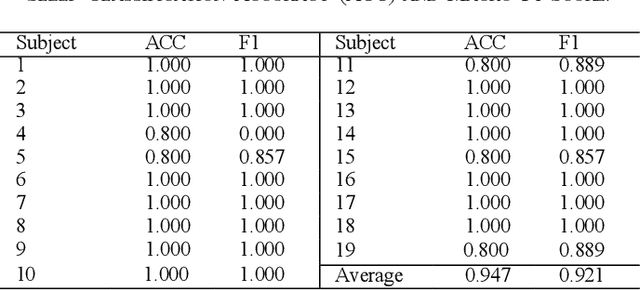

Development of Personalized Sleep Induction System based on Mental States

Dec 12, 2022

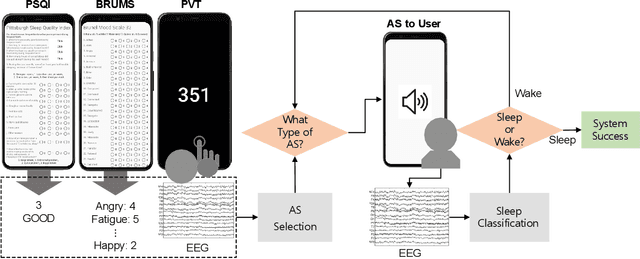

Abstract:Sleep is an essential behavior to prevent the decrement of cognitive, motor, and emotional performance and various diseases. However, it is not easy to fall asleep when people want to sleep. There are various sleep-disturbing factors such as the COVID-19 situation, noise from outside, and light during the night. We aim to develop a personalized sleep induction system based on mental states using electroencephalogram and auditory stimulation. Our system analyzes users' mental states using an electroencephalogram and results of the Pittsburgh sleep quality index and Brunel mood scale. According to mental states, the system plays sleep induction sound among five auditory stimulation: white noise, repetitive beep sounds, rainy sound, binaural beat, and sham sound. Finally, the sleep-inducing system classified the sleep stage of participants with 94.7 percent and stopped auditory stimulation if participants showed non-rapid eye movement sleep. Our system makes 18 participants fall asleep among 20 participants.

Automatic Micro-sleep Detection under Car-driving Simulation Environment using Night-sleep EEG

Dec 10, 2020

Abstract:A micro-sleep is a short sleep that lasts from 1 to 30 secs. Its detection during driving is crucial to prevent accidents that could claim a lot of people's lives. Electroencephalogram (EEG) is suitable to detect micro-sleep because EEG was associated with consciousness and sleep. Deep learning showed great performance in recognizing brain states, but sufficient data should be needed. However, collecting micro-sleep data during driving is inefficient and has a high risk of obtaining poor data quality due to noisy driving situations. Night-sleep data at home is easier to collect than micro-sleep data during driving. Therefore, we proposed a deep learning approach using night-sleep EEG to improve the performance of micro-sleep detection. We pre-trained the U-Net to classify the 5-class sleep stages using night-sleep EEG and used the sleep stages estimated by the U-Net to detect micro-sleep during driving. This improved micro-sleep detection performance by about 30\% compared to the traditional approach. Our approach was based on the hypothesis that micro-sleep corresponds to the early stage of non-rapid eye movement (NREM) sleep. We analyzed EEG distribution during night-sleep and micro-sleep and found that micro-sleep has a similar distribution to NREM sleep. Our results provide the possibility of similarity between micro-sleep and the early stage of NREM sleep and help prevent micro-sleep during driving.

Predicting the Transition from Short-term to Long-term Memory based on Deep Neural Network

Dec 07, 2020

Abstract:Memory is an essential element in people's daily life based on experience. So far, many studies have analyzed electroencephalogram (EEG) signals at encoding to predict later remembered items, but few studies have predicted long-term memory only with EEG signals of successful short-term memory. Therefore, we aim to predict long-term memory using deep neural networks. In specific, the spectral power of the EEG signals of remembered items in short-term memory was calculated and inputted to the multilayer perceptron (MLP) and convolutional neural network (CNN) classifiers to predict long-term memory. Seventeen participants performed visuo-spatial memory task consisting of picture and location memory in the order of encoding, immediate retrieval (short-term memory), and delayed retrieval (long-term memory). We applied leave-one-subject-out cross-validation to evaluate the predictive models. As a result, the picture memory showed the highest kappa-value of 0.19 on CNN, and location memory showed the highest kappa-value of 0.32 in MLP. These results showed that long-term memory can be predicted with measured EEG signals during short-term memory, which improves learning efficiency and helps people with memory and cognitive impairments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge