Yongshuai Wang

FViT: A Focal Vision Transformer with Gabor Filter

Feb 27, 2024Abstract:Vision transformers have achieved encouraging progress in various computer vision tasks. A common belief is that this is attributed to the competence of self-attention in modeling the global dependencies among feature tokens. Unfortunately, self-attention still faces some challenges in dense prediction tasks, such as the high computational complexity and absence of desirable inductive bias. To address these issues, we revisit the potential benefits of integrating vision transformer with Gabor filter, and propose a Learnable Gabor Filter (LGF) by using convolution. As an alternative to self-attention, we employ LGF to simulate the response of simple cells in the biological visual system to input images, prompting models to focus on discriminative feature representations of targets from various scales and orientations. Additionally, we design a Bionic Focal Vision (BFV) block based on the LGF. This block draws inspiration from neuroscience and introduces a Multi-Path Feed Forward Network (MPFFN) to emulate the working way of biological visual cortex processing information in parallel. Furthermore, we develop a unified and efficient pyramid backbone network family called Focal Vision Transformers (FViTs) by stacking BFV blocks. Experimental results show that FViTs exhibit highly competitive performance in various vision tasks. Especially in terms of computational efficiency and scalability, FViTs show significant advantages compared with other counterparts. Code is available at https://github.com/nkusyl/FViT

EViT: An Eagle Vision Transformer with Bi-Fovea Self-Attention

Oct 22, 2023

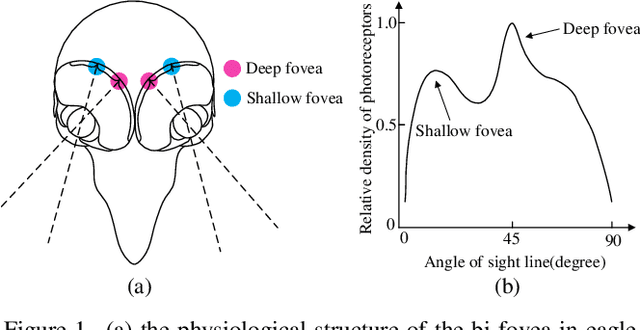

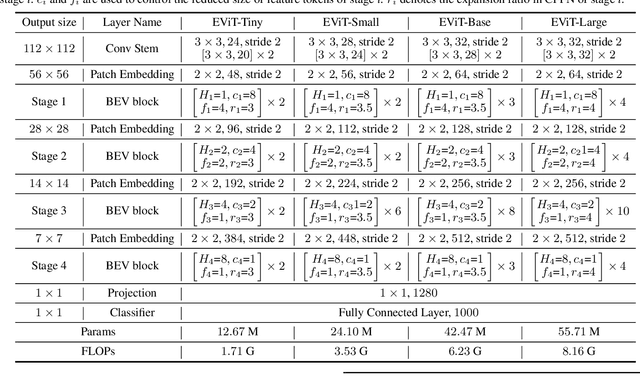

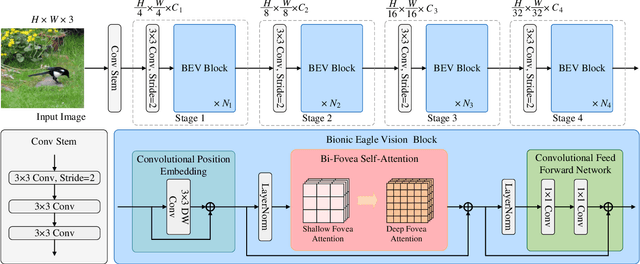

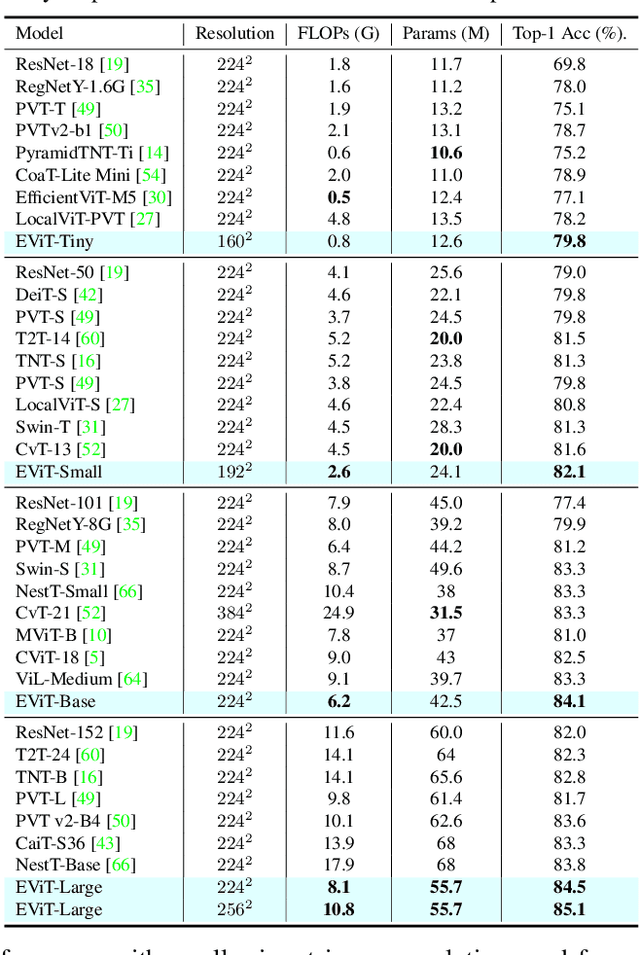

Abstract:Thanks to the advancement of deep learning technology, vision transformer has demonstrated competitive performance in various computer vision tasks. Unfortunately, vision transformer still faces some challenges such as high computational complexity and absence of desirable inductive bias. To alleviate these problems, a novel Bi-Fovea Self-Attention (BFSA) is proposed, inspired by the physiological structure and characteristics of bi-fovea vision in eagle eyes. This BFSA can simulate the shallow fovea and deep fovea functions of eagle vision, enable the network to extract feature representations of targets from coarse to fine, facilitate the interaction of multi-scale feature representations. Additionally, a Bionic Eagle Vision (BEV) block based on BFSA is designed in this study. It combines the advantages of CNNs and Vision Transformers to enhance the ability of global and local feature representations of networks. Furthermore, a unified and efficient general pyramid backbone network family is developed by stacking the BEV blocks in this study, called Eagle Vision Transformers (EViTs). Experimental results on various computer vision tasks including image classification, object detection, instance segmentation and other transfer learning tasks show that the proposed EViTs perform effectively by comparing with the baselines under same model size and exhibit higher speed on graphics processing unit than other models. Code is available at https://github.com/nkusyl/EViT.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge