Yiru Yao

A Database for Perceived Quality Assessment of User-Generated VR Videos

Jun 13, 2022

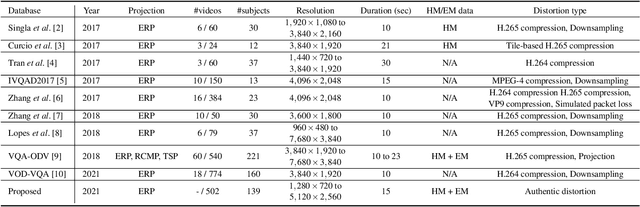

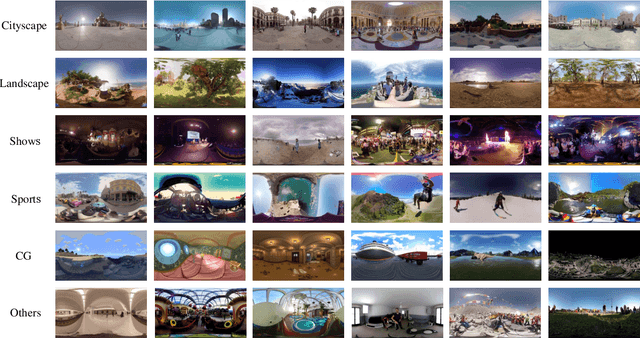

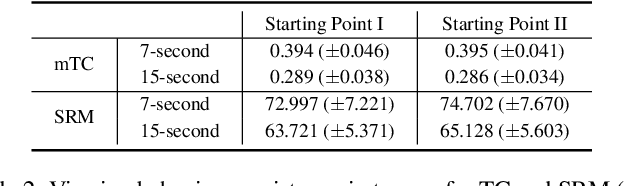

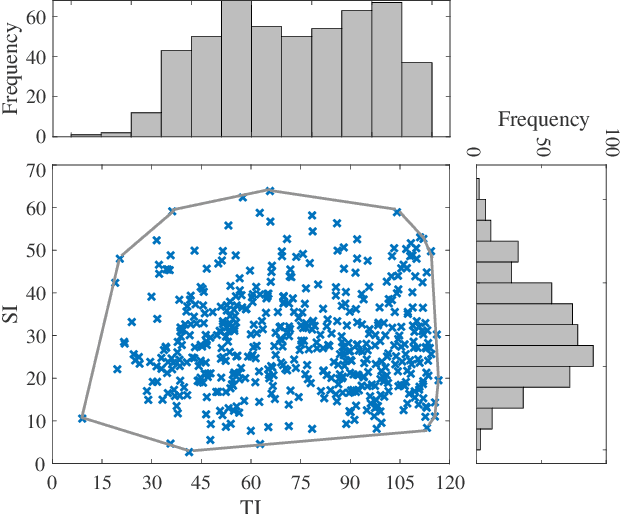

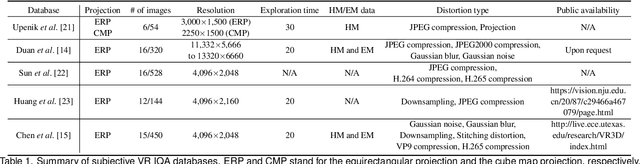

Abstract:Virtual reality (VR) videos (typically in the form of 360$^\circ$ videos) have gained increasing attention due to the fast development of VR technologies and the remarkable popularization of consumer-grade 360$^\circ$ cameras and displays. Thus it is pivotal to understand how people perceive user-generated VR videos, which may suffer from commingled authentic distortions, often localized in space and time. In this paper, we establish one of the largest 360$^\circ$ video databases, containing 502 user-generated videos with rich content and distortion diversities. We capture viewing behaviors (i.e., scanpaths) of 139 users, and collect their opinion scores of perceived quality under four different viewing conditions (two starting points $\times$ two exploration times). We provide a thorough statistical analysis of recorded data, resulting in several interesting observations, such as the significant impact of viewing conditions on viewing behaviors and perceived quality. Besides, we explore other usage of our data and analysis, including evaluation of computational models for quality assessment and saliency detection of 360$^\circ$ videos. We have made the dataset and code available at https://github.com/Yao-Yiru/VR-Video-Database.

Omnidirectional Images as Moving Camera Videos

May 21, 2020

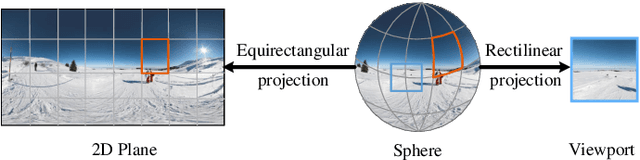

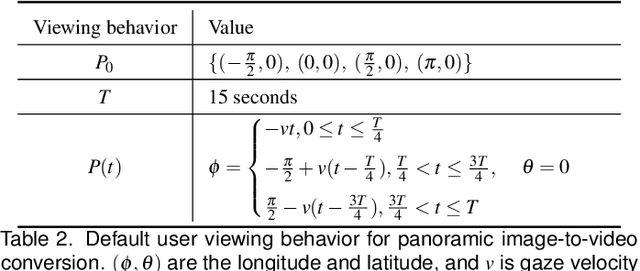

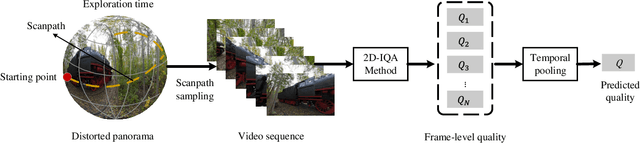

Abstract:Omnidirectional images (also referred to as static 360{\deg} panoramas) impose viewing conditions much different from those of regular 2D images. A natural question arises: how do humans perceive image distortions in immersive virtual reality (VR) environments? We argue that, apart from the distorted panorama itself, three types of viewing behavior governed by VR conditions are crucial in determining its perceived quality: starting point, exploration time, and scanpath. In this paper, we propose a principled computational framework for objective quality assessment of 360{\deg} images, which embodies the threefold behavior in a delightful way. Specifically, we first transform an omnidirectional image to several video representations using viewing behavior of different users. We then leverage the recent advances in full-reference 2D image/video quality assessment to compute the perceived quality of the panorama. We construct a set of specific quality measures within the proposed framework, and demonstrate their promises on two VR quality databases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge