Yiqun Xu

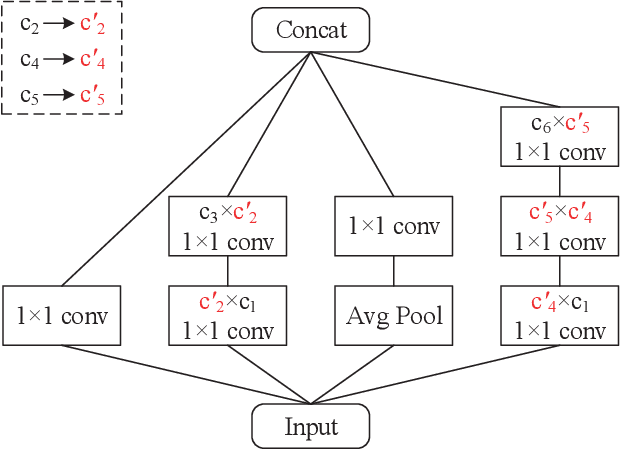

AIP: Adversarial Iterative Pruning Based on Knowledge Transfer for Convolutional Neural Networks

Aug 31, 2021

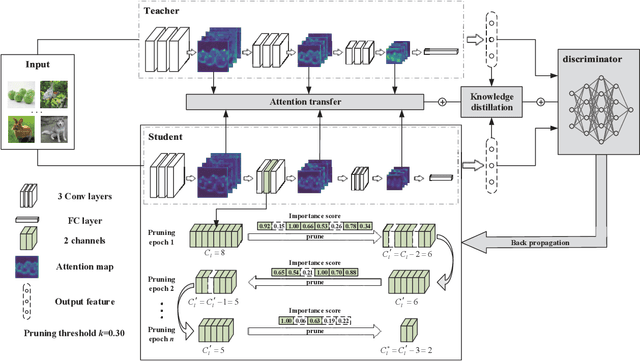

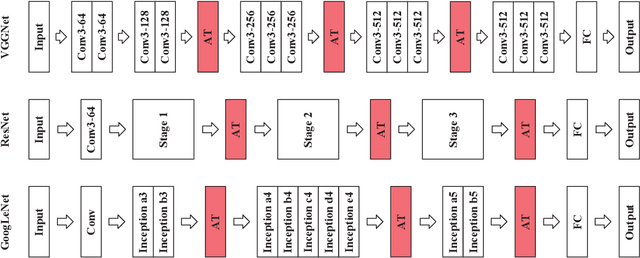

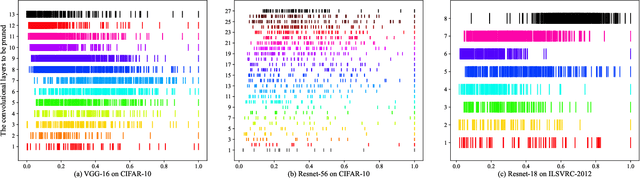

Abstract:With the increase of structure complexity, convolutional neural networks (CNNs) take a fair amount of computation cost. Meanwhile, existing research reveals the salient parameter redundancy in CNNs. The current pruning methods can compress CNNs with little performance drop, but when the pruning ratio increases, the accuracy loss is more serious. Moreover, some iterative pruning methods are difficult to accurately identify and delete unimportant parameters due to the accuracy drop during pruning. We propose a novel adversarial iterative pruning method (AIP) for CNNs based on knowledge transfer. The original network is regarded as the teacher while the compressed network is the student. We apply attention maps and output features to transfer information from the teacher to the student. Then, a shallow fully-connected network is designed as the discriminator to allow the output of two networks to play an adversarial game, thereby it can quickly recover the pruned accuracy among pruning intervals. Finally, an iterative pruning scheme based on the importance of channels is proposed. We conduct extensive experiments on the image classification tasks CIFAR-10, CIFAR-100, and ILSVRC-2012 to verify our pruning method can achieve efficient compression for CNNs even without accuracy loss. On the ILSVRC-2012, when removing 36.78% parameters and 45.55% floating-point operations (FLOPs) of ResNet-18, the Top-1 accuracy drop are only 0.66%. Our method is superior to some state-of-the-art pruning schemes in terms of compressing rate and accuracy. Moreover, we further demonstrate that AIP has good generalization on the object detection task PASCAL VOC.

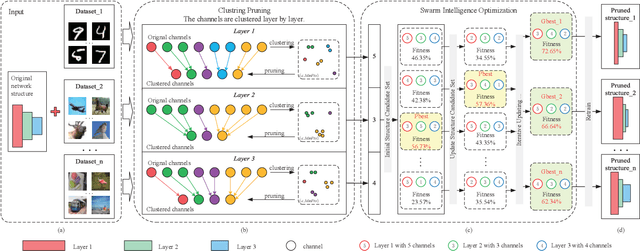

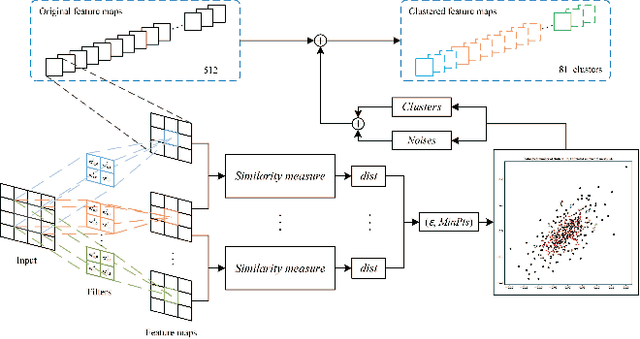

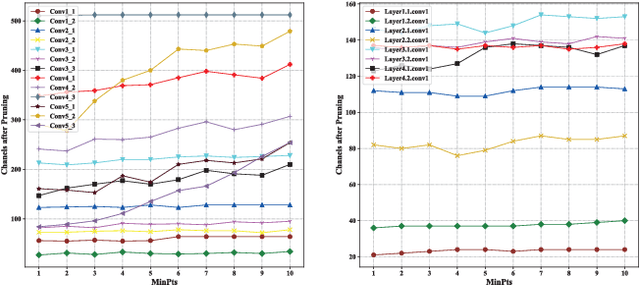

ACP: Automatic Channel Pruning via Clustering and Swarm Intelligence Optimization for CNN

Jan 16, 2021

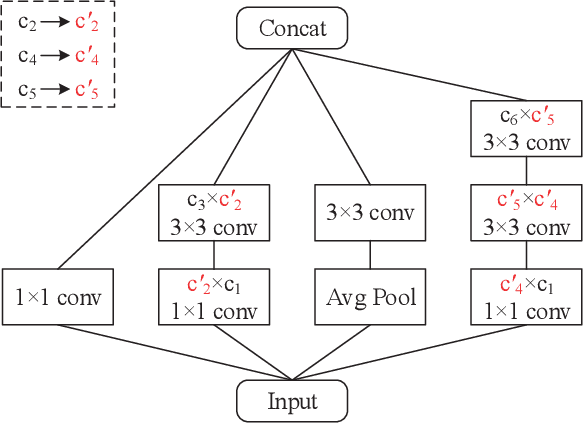

Abstract:As the convolutional neural network (CNN) gets deeper and wider in recent years, the requirements for the amount of data and hardware resources have gradually increased. Meanwhile, CNN also reveals salient redundancy in several tasks. The existing magnitude-based pruning methods are efficient, but the performance of the compressed network is unpredictable. While the accuracy loss after pruning based on the structure sensitivity is relatively slight, the process is time-consuming and the algorithm complexity is notable. In this article, we propose a novel automatic channel pruning method (ACP). Specifically, we firstly perform layer-wise channel clustering via the similarity of the feature maps to perform preliminary pruning on the network. Then a population initialization method is introduced to transform the pruned structure into a candidate population. Finally, we conduct searching and optimizing iteratively based on the particle swarm optimization (PSO) to find the optimal compressed structure. The compact network is then retrained to mitigate the accuracy loss from pruning. Our method is evaluated against several state-of-the-art CNNs on three different classification datasets CIFAR-10/100 and ILSVRC-2012. On the ILSVRC-2012, when removing 64.36% parameters and 63.34% floating-point operations (FLOPs) of ResNet-50, the Top-1 and Top-5 accuracy drop are less than 0.9%. Moreover, we demonstrate that without harming overall performance it is possible to compress SSD by more than 50% on the target detection dataset PASCAL VOC. It further verifies that the proposed method can also be applied to other CNNs and application scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge