AIP: Adversarial Iterative Pruning Based on Knowledge Transfer for Convolutional Neural Networks

Paper and Code

Aug 31, 2021

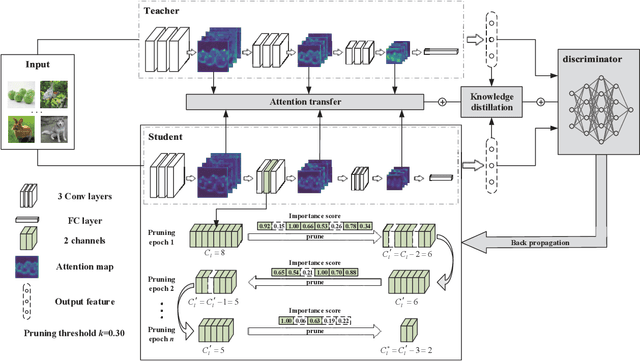

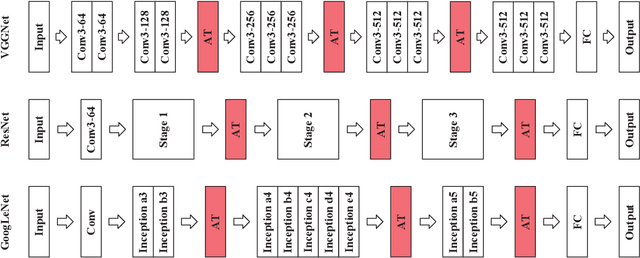

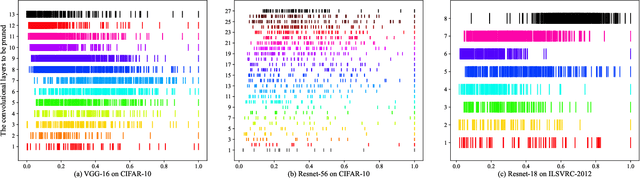

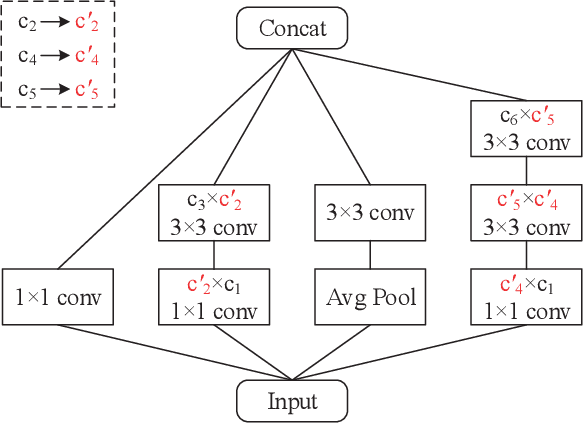

With the increase of structure complexity, convolutional neural networks (CNNs) take a fair amount of computation cost. Meanwhile, existing research reveals the salient parameter redundancy in CNNs. The current pruning methods can compress CNNs with little performance drop, but when the pruning ratio increases, the accuracy loss is more serious. Moreover, some iterative pruning methods are difficult to accurately identify and delete unimportant parameters due to the accuracy drop during pruning. We propose a novel adversarial iterative pruning method (AIP) for CNNs based on knowledge transfer. The original network is regarded as the teacher while the compressed network is the student. We apply attention maps and output features to transfer information from the teacher to the student. Then, a shallow fully-connected network is designed as the discriminator to allow the output of two networks to play an adversarial game, thereby it can quickly recover the pruned accuracy among pruning intervals. Finally, an iterative pruning scheme based on the importance of channels is proposed. We conduct extensive experiments on the image classification tasks CIFAR-10, CIFAR-100, and ILSVRC-2012 to verify our pruning method can achieve efficient compression for CNNs even without accuracy loss. On the ILSVRC-2012, when removing 36.78% parameters and 45.55% floating-point operations (FLOPs) of ResNet-18, the Top-1 accuracy drop are only 0.66%. Our method is superior to some state-of-the-art pruning schemes in terms of compressing rate and accuracy. Moreover, we further demonstrate that AIP has good generalization on the object detection task PASCAL VOC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge