Yijin Zhou

INFA-Guard: Mitigating Malicious Propagation via Infection-Aware Safeguarding in LLM-Based Multi-Agent Systems

Jan 21, 2026Abstract:The rapid advancement of Large Language Model (LLM)-based Multi-Agent Systems (MAS) has introduced significant security vulnerabilities, where malicious influence can propagate virally through inter-agent communication. Conventional safeguards often rely on a binary paradigm that strictly distinguishes between benign and attack agents, failing to account for infected agents i.e., benign entities converted by attack agents. In this paper, we propose Infection-Aware Guard, INFA-Guard, a novel defense framework that explicitly identifies and addresses infected agents as a distinct threat category. By leveraging infection-aware detection and topological constraints, INFA-Guard accurately localizes attack sources and infected ranges. During remediation, INFA-Guard replaces attackers and rehabilitates infected ones, avoiding malicious propagation while preserving topological integrity. Extensive experiments demonstrate that INFA-Guard achieves state-of-the-art performance, reducing the Attack Success Rate (ASR) by an average of 33%, while exhibiting cross-model robustness, superior topological generalization, and high cost-effectiveness.

GROD: Enhancing Generalization of Transformer with Out-of-Distribution Detection

Jun 13, 2024Abstract:Transformer networks excel in natural language processing (NLP) and computer vision (CV) tasks. However, they face challenges in generalizing to Out-of-Distribution (OOD) datasets, that is, data whose distribution differs from that seen during training. The OOD detection aims to distinguish data that deviates from the expected distribution, while maintaining optimal performance on in-distribution (ID) data. This paper introduces a novel approach based on OOD detection, termed the Generate Rounded OOD Data (GROD) algorithm, which significantly bolsters the generalization performance of transformer networks across various tasks. GROD is motivated by our new OOD detection Probably Approximately Correct (PAC) Theory for transformer. The transformer has learnability in terms of OOD detection that is, when the data is sufficient the outlier can be well represented. By penalizing the misclassification of OOD data within the loss function and generating synthetic outliers, GROD guarantees learnability and refines the decision boundaries between inlier and outlier. This strategy demonstrates robust adaptability and general applicability across different data types. Evaluated across diverse OOD detection tasks in NLP and CV, GROD achieves SOTA regardless of data format. On average, it reduces the SOTA FPR@95 from 21.97% to 0.12%, and improves AUROC from 93.62% to 99.98% on image classification tasks, and the SOTA FPR@95 by 12.89% and AUROC by 2.27% in detecting semantic text outliers. The code is available at https://anonymous.4open.science/r/GROD-OOD-Detection-with-transformers-B70F.

EEG-based Emotion Style Transfer Network for Cross-dataset Emotion Recognition

Aug 09, 2023

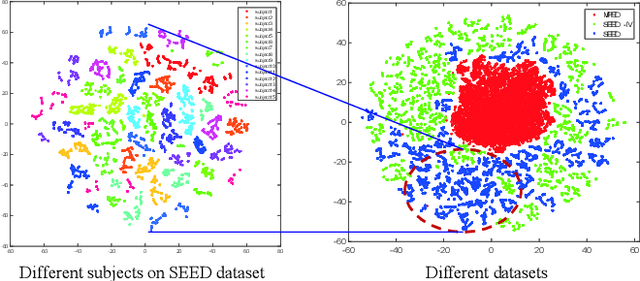

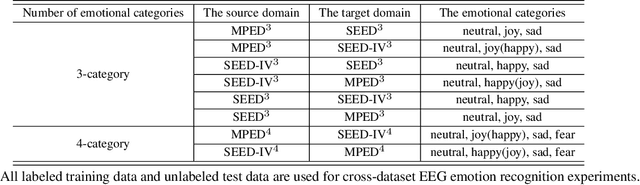

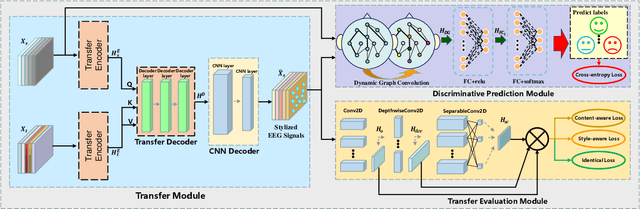

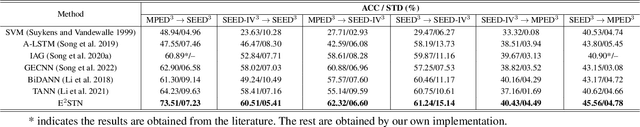

Abstract:As the key to realizing aBCIs, EEG emotion recognition has been widely studied by many researchers. Previous methods have performed well for intra-subject EEG emotion recognition. However, the style mismatch between source domain (training data) and target domain (test data) EEG samples caused by huge inter-domain differences is still a critical problem for EEG emotion recognition. To solve the problem of cross-dataset EEG emotion recognition, in this paper, we propose an EEG-based Emotion Style Transfer Network (E2STN) to obtain EEG representations that contain the content information of source domain and the style information of target domain, which is called stylized emotional EEG representations. The representations are helpful for cross-dataset discriminative prediction. Concretely, E2STN consists of three modules, i.e., transfer module, transfer evaluation module, and discriminative prediction module. The transfer module encodes the domain-specific information of source and target domains and then re-constructs the source domain's emotional pattern and the target domain's statistical characteristics into the new stylized EEG representations. In this process, the transfer evaluation module is adopted to constrain the generated representations that can more precisely fuse two kinds of complementary information from source and target domains and avoid distorting. Finally, the generated stylized EEG representations are fed into the discriminative prediction module for final classification. Extensive experiments show that the E2STN can achieve the state-of-the-art performance on cross-dataset EEG emotion recognition tasks.

Progressive Graph Convolution Network for EEG Emotion Recognition

Dec 14, 2021

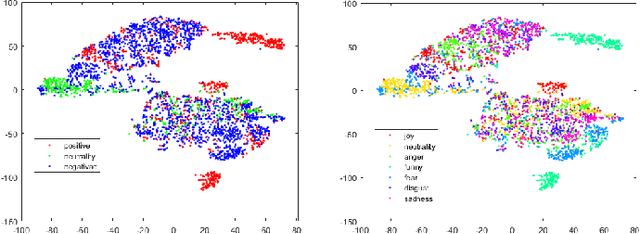

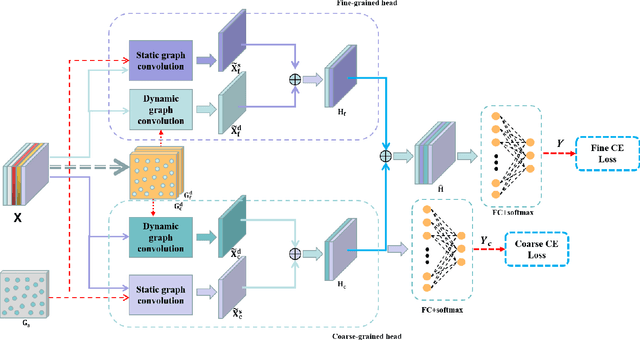

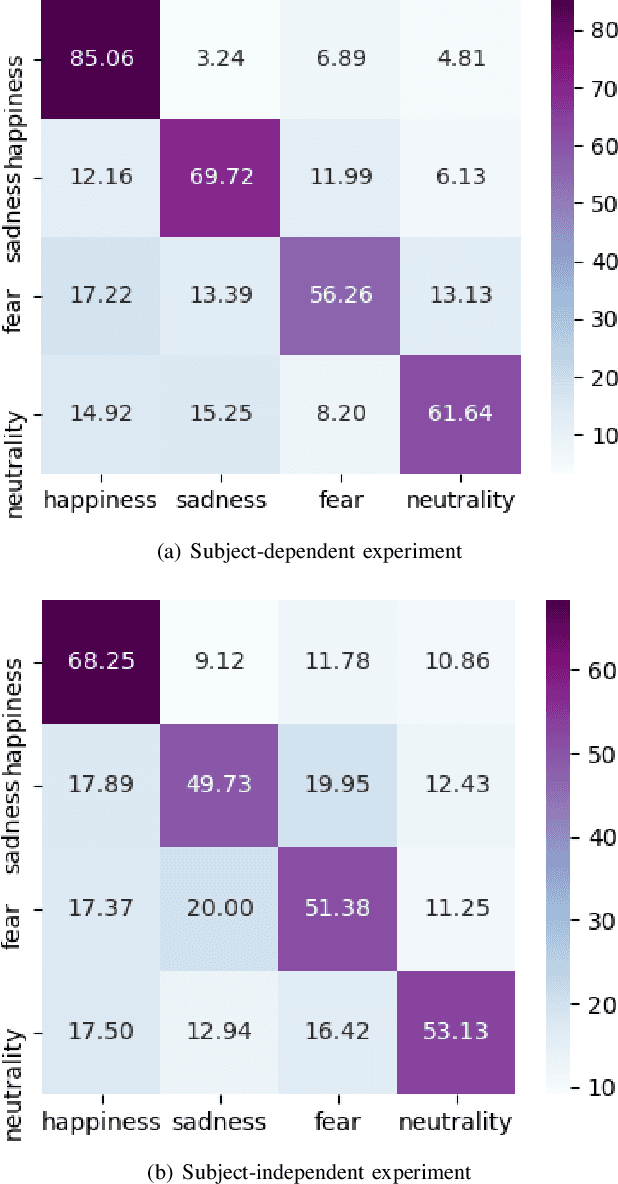

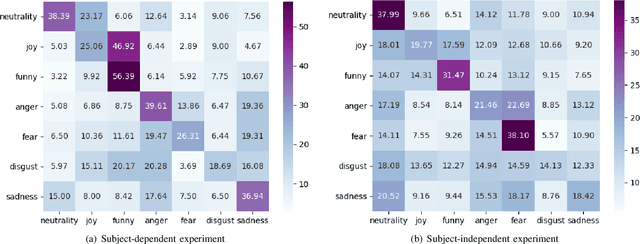

Abstract:Studies in the area of neuroscience have revealed the relationship between emotional patterns and brain functional regions, demonstrating that dynamic relationships between different brain regions are an essential factor affecting emotion recognition determined through electroencephalography (EEG). Moreover, in EEG emotion recognition, we can observe that clearer boundaries exist between coarse-grained emotions than those between fine-grained emotions, based on the same EEG data; this indicates the concurrence of large coarse- and small fine-grained emotion variations. Thus, the progressive classification process from coarse- to fine-grained categories may be helpful for EEG emotion recognition. Consequently, in this study, we propose a progressive graph convolution network (PGCN) for capturing this inherent characteristic in EEG emotional signals and progressively learning the discriminative EEG features. To fit different EEG patterns, we constructed a dual-graph module to characterize the intrinsic relationship between different EEG channels, containing the dynamic functional connections and static spatial proximity information of brain regions from neuroscience research. Moreover, motivated by the observation of the relationship between coarse- and fine-grained emotions, we adopt a dual-head module that enables the PGCN to progressively learn more discriminative EEG features, from coarse-grained (easy) to fine-grained categories (difficult), referring to the hierarchical characteristic of emotion. To verify the performance of our model, extensive experiments were conducted on two public datasets: SEED-IV and multi-modal physiological emotion database (MPED).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge