Yeongmo Kim

On the Convergence of Continual Learning with Adaptive Methods

Apr 15, 2024

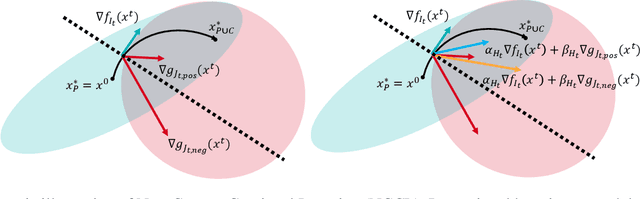

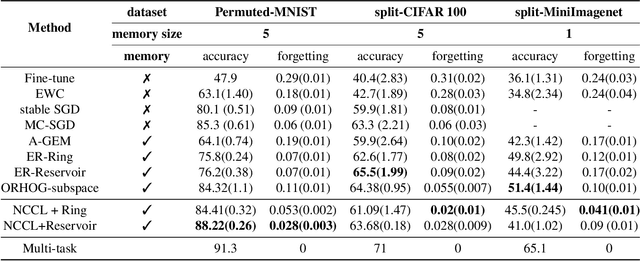

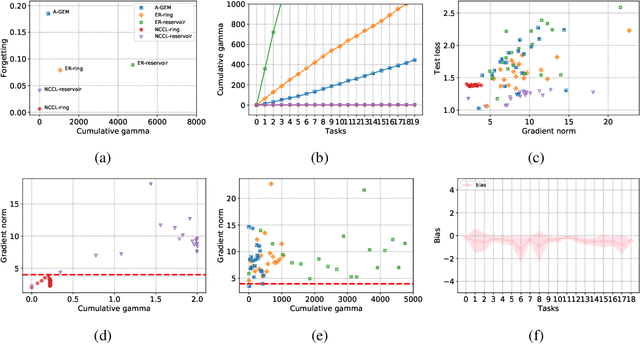

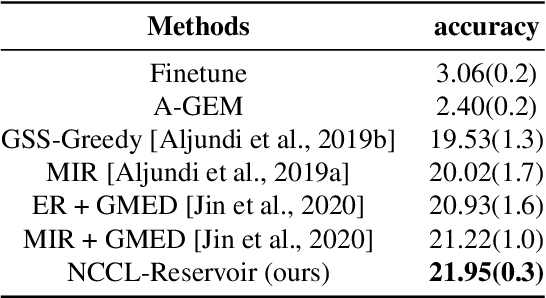

Abstract:One of the objectives of continual learning is to prevent catastrophic forgetting in learning multiple tasks sequentially, and the existing solutions have been driven by the conceptualization of the plasticity-stability dilemma. However, the convergence of continual learning for each sequential task is less studied so far. In this paper, we provide a convergence analysis of memory-based continual learning with stochastic gradient descent and empirical evidence that training current tasks causes the cumulative degradation of previous tasks. We propose an adaptive method for nonconvex continual learning (NCCL), which adjusts step sizes of both previous and current tasks with the gradients. The proposed method can achieve the same convergence rate as the SGD method when the catastrophic forgetting term which we define in the paper is suppressed at each iteration. Further, we demonstrate that the proposed algorithm improves the performance of continual learning over existing methods for several image classification tasks.

* Proceedings of the Thirty-Ninth Conference on Uncertainty in Artificial Intelligence (UAI 2023), see https://proceedings.mlr.press/v216/han23a.html

Learning to Learn Unlearned Feature for Brain Tumor Segmentation

May 13, 2023Abstract:We propose a fine-tuning algorithm for brain tumor segmentation that needs only a few data samples and helps networks not to forget the original tasks. Our approach is based on active learning and meta-learning. One of the difficulties in medical image segmentation is the lack of datasets with proper annotations, because it requires doctors to tag reliable annotation and there are many variants of a disease, such as glioma and brain metastasis, which are the different types of brain tumor and have different structural features in MR images. Therefore, it is impossible to produce the large-scale medical image datasets for all types of diseases. In this paper, we show a transfer learning method from high grade glioma to brain metastasis, and demonstrate that the proposed algorithm achieves balanced parameters for both glioma and brain metastasis domains within a few steps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge