Ye-Sheen Lim

Scale-Invariant Learning-to-Rank

Oct 02, 2024Abstract:At Expedia, learning-to-rank (LTR) models plays a key role on our website in sorting and presenting information more relevant to users, such as search filters, property rooms, amenities, and images. A major challenge in deploying these models is ensuring consistent feature scaling between training and production data, as discrepancies can lead to unreliable rankings when deployed. Normalization techniques like feature standardization and batch normalization could address these issues but are impractical in production due to latency impacts and the difficulty of distributed real-time inference. To address consistent feature scaling issue, we introduce a scale-invariant LTR framework which combines a deep and a wide neural network to mathematically guarantee scale-invariance in the model at both training and prediction time. We evaluate our framework in simulated real-world scenarios with injected feature scale issues by perturbing the test set at prediction time, and show that even with inconsistent train-test scaling, using framework achieves better performance than without.

Intra-Day Price Simulation with Generative Adversarial Modelling of the Order Flow

Sep 28, 2021

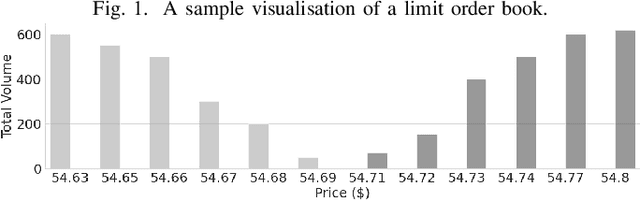

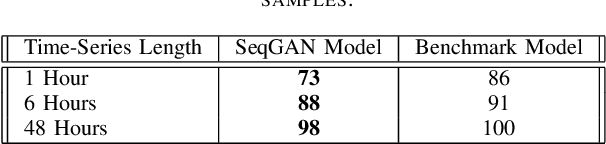

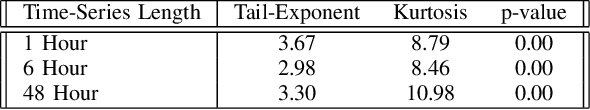

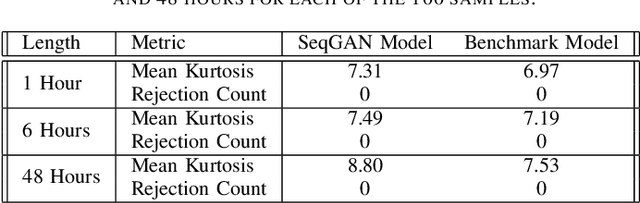

Abstract:Intra-day price variations in financial markets are driven by the sequence of orders, called the order flow, that is submitted at high frequency by traders. This paper introduces a novel application of the Sequence Generative Adversarial Networks framework to model the order flow, such that random sequences of the order flow can then be generated to simulate the intra-day variation of prices. As a benchmark, a well-known parametric model from the quantitative finance literature is selected. The models are fitted, and then multiple random paths of the order flow sequences are sampled from each model. Model performances are then evaluated by using the generated sequences to simulate price variations, and we compare the empirical regularities between the price variations produced by the generated and real sequences. The empirical regularities considered include the distribution of the price log-returns, the price volatility, and the heavy-tail of the log-returns distributions. The results show that the order sequences from the generative model are better able to reproduce the statistical behaviour of real price variations than the sequences from the benchmark.

Deep Probabilistic Modelling of Price Movements for High-Frequency Trading

Mar 31, 2020

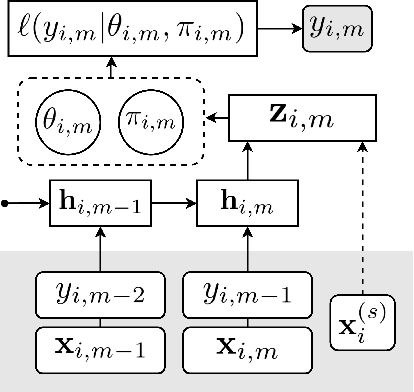

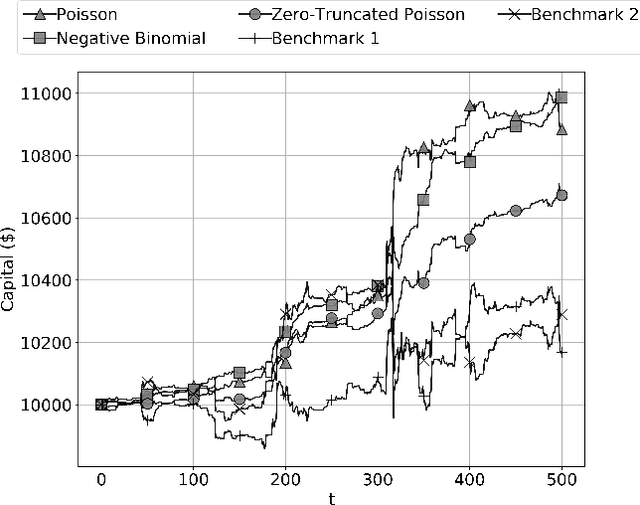

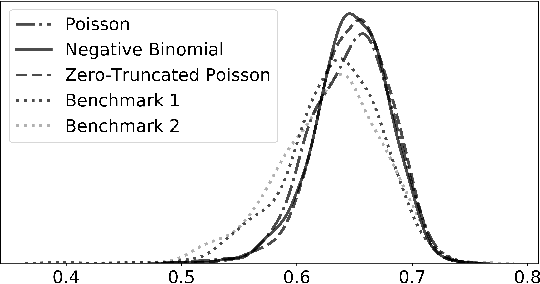

Abstract:In this paper we propose a deep recurrent architecture for the probabilistic modelling of high-frequency market prices, important for the risk management of automated trading systems. Our proposed architecture incorporates probabilistic mixture models into deep recurrent neural networks. The resulting deep mixture models simultaneously address several practical challenges important in the development of automated high-frequency trading strategies that were previously neglected in the literature: 1) probabilistic forecasting of the price movements; 2) single objective prediction of both the direction and size of the price movements. We train our models on high-frequency Bitcoin market data and evaluate them against benchmark models obtained from the literature. We show that our model outperforms the benchmark models in both a metric-based test and in a simulated trading scenario

Deep Recurrent Modelling of Stationary Bitcoin Price Formation Using the Order Flow

Mar 31, 2020

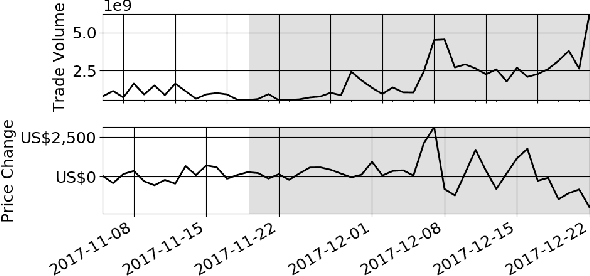

Abstract:In this paper we propose a deep recurrent model based on the order flow for the stationary modelling of the high-frequency directional prices movements. The order flow is the microsecond stream of orders arriving at the exchange, driving the formation of prices seen on the price chart of a stock or currency. To test the stationarity of our proposed model we train our model on data before the 2017 Bitcoin bubble period and test our model during and after the bubble. We show that without any retraining, the proposed model is temporally stable even as Bitcoin trading shifts into an extremely volatile "bubble trouble" period. The significance of the result is shown by benchmarking against existing state-of-the-art models in the literature for modelling price formation using deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge