Alessio Petrozziello

Scale-Invariant Learning-to-Rank

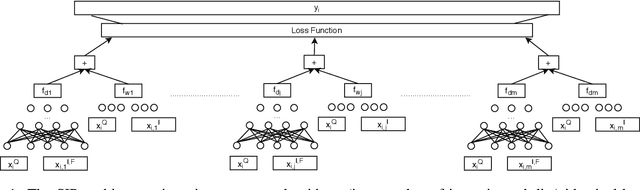

Oct 02, 2024Abstract:At Expedia, learning-to-rank (LTR) models plays a key role on our website in sorting and presenting information more relevant to users, such as search filters, property rooms, amenities, and images. A major challenge in deploying these models is ensuring consistent feature scaling between training and production data, as discrepancies can lead to unreliable rankings when deployed. Normalization techniques like feature standardization and batch normalization could address these issues but are impractical in production due to latency impacts and the difficulty of distributed real-time inference. To address consistent feature scaling issue, we introduce a scale-invariant LTR framework which combines a deep and a wide neural network to mathematically guarantee scale-invariance in the model at both training and prediction time. We evaluate our framework in simulated real-world scenarios with injected feature scale issues by perturbing the test set at prediction time, and show that even with inconsistent train-test scaling, using framework achieves better performance than without.

A scale invariant ranking function for learning-to-rank: a real-world use case

Oct 21, 2021

Abstract:Nowadays, Online Travel Agencies provide the main service for booking holidays, business trips, accommodations, etc. As in many e-commerce services where users, items, and preferences are involved, the use of a Recommender System facilitates the navigation of the marketplaces. One of the main challenges when productizing machine learning models (and in this case, Learning-to-Rank models) is the need of, not only consistent pre-processing transformations, but also input features maintaining a similar scale both at training and prediction time. However, the features' scale does not necessarily stay the same in the real-world production environment, which could lead to unexpected ranking order. Normalization techniques such as feature standardization, batch normalization and layer normalization are commonly used to tackle the scaling issue. However, these techniques. To address this issue, in this paper we propose a novel scale-invariant ranking function (dubbed as SIR) which is accomplished by combining a deep and a wide neural network. We incorporate SIR with five state-of-the-art Learning-to-Rank models and compare the performance of the combined models with the classic algorithms on a large data set containing 56 million booked searches from the Hotels.com website. Besides, we simulate four real-world scenarios where the features' scale at the test set is inconsistent with that at the training set. The results reveal that when the features' scale is inconsistent at prediction time, Learning-To-Rank methods incorporating SIR outperform their original counterpart in all scenarios (with performance difference up to 14.7%), while when the features' scale at the training and test set are consistent our proposal achieves comparable accuracy to the classic algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge