Yasuhiro Endo

Universality of Many-body Projected Ensemble for Learning Quantum Data Distribution

Jan 26, 2026Abstract:Generating quantum data by learning the underlying quantum distribution poses challenges in both theoretical and practical scenarios, yet it is a critical task for understanding quantum systems. A fundamental question in quantum machine learning (QML) is the universality of approximation: whether a parameterized QML model can approximate any quantum distribution. We address this question by proving a universality theorem for the Many-body Projected Ensemble (MPE) framework, a method for quantum state design that uses a single many-body wave function to prepare random states. This demonstrates that MPE can approximate any distribution of pure states within a 1-Wasserstein distance error. This theorem provides a rigorous guarantee of universal expressivity, addressing key theoretical gaps in QML. For practicality, we propose an Incremental MPE variant with layer-wise training to improve the trainability. Numerical experiments on clustered quantum states and quantum chemistry datasets validate MPE's efficacy in learning complex quantum data distributions.

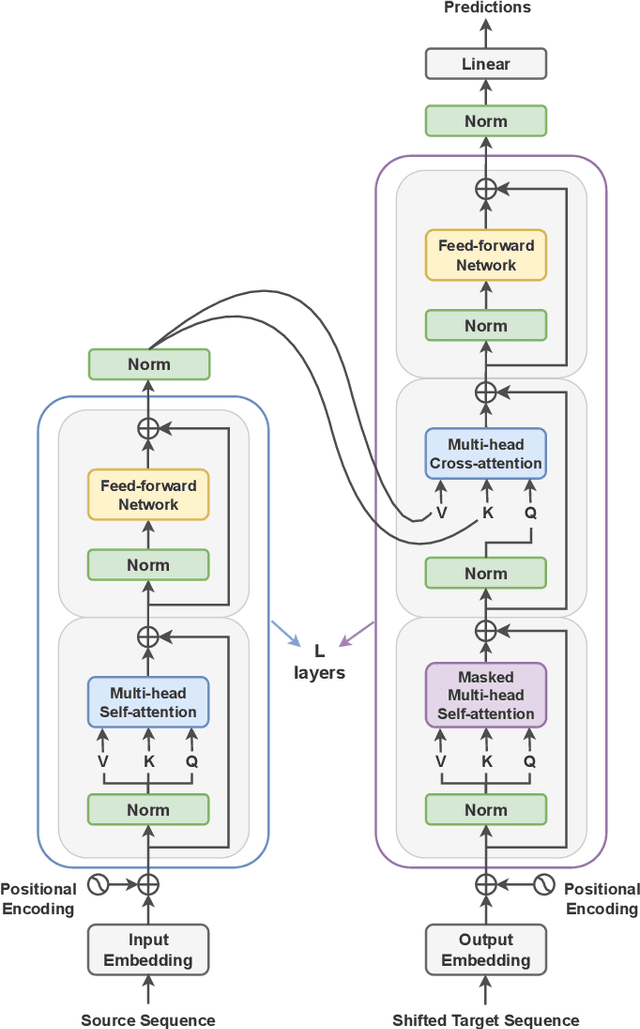

Iterative Quantum Feature Maps

Jun 24, 2025Abstract:Quantum machine learning models that leverage quantum circuits as quantum feature maps (QFMs) are recognized for their enhanced expressive power in learning tasks. Such models have demonstrated rigorous end-to-end quantum speedups for specific families of classification problems. However, deploying deep QFMs on real quantum hardware remains challenging due to circuit noise and hardware constraints. Additionally, variational quantum algorithms often suffer from computational bottlenecks, particularly in accurate gradient estimation, which significantly increases quantum resource demands during training. We propose Iterative Quantum Feature Maps (IQFMs), a hybrid quantum-classical framework that constructs a deep architecture by iteratively connecting shallow QFMs with classically computed augmentation weights. By incorporating contrastive learning and a layer-wise training mechanism, IQFMs effectively reduces quantum runtime and mitigates noise-induced degradation. In tasks involving noisy quantum data, numerical experiments show that IQFMs outperforms quantum convolutional neural networks, without requiring the optimization of variational quantum parameters. Even for a typical classical image classification benchmark, a carefully designed IQFMs achieves performance comparable to that of classical neural networks. This framework presents a promising path to address current limitations and harness the full potential of quantum-enhanced machine learning.

Molecular Quantum Transformer

Mar 27, 2025

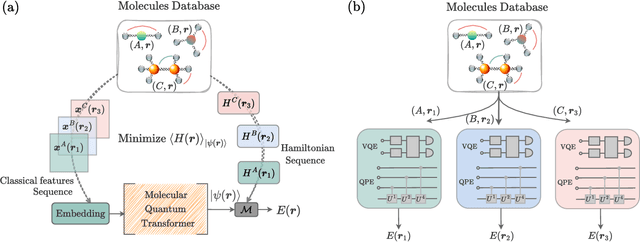

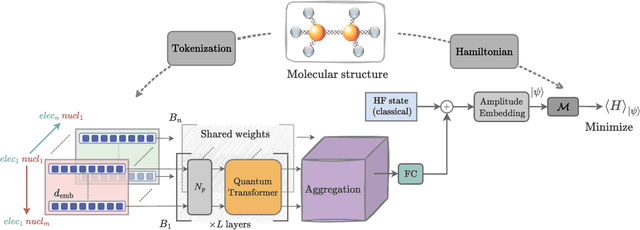

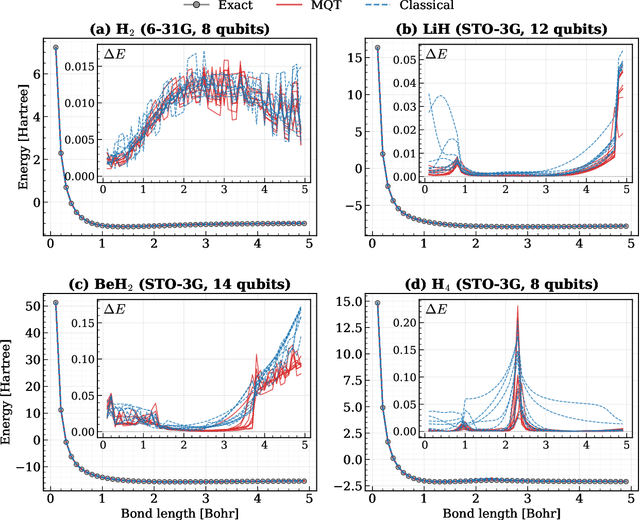

Abstract:The Transformer model, renowned for its powerful attention mechanism, has achieved state-of-the-art performance in various artificial intelligence tasks but faces challenges such as high computational cost and memory usage. Researchers are exploring quantum computing to enhance the Transformer's design, though it still shows limited success with classical data. With a growing focus on leveraging quantum machine learning for quantum data, particularly in quantum chemistry, we propose the Molecular Quantum Transformer (MQT) for modeling interactions in molecular quantum systems. By utilizing quantum circuits to implement the attention mechanism on the molecular configurations, MQT can efficiently calculate ground-state energies for all configurations. Numerical demonstrations show that in calculating ground-state energies for H_2, LiH, BeH_2, and H_4, MQT outperforms the classical Transformer, highlighting the promise of quantum effects in Transformer structures. Furthermore, its pretraining capability on diverse molecular data facilitates the efficient learning of new molecules, extending its applicability to complex molecular systems with minimal additional effort. Our method offers an alternative to existing quantum algorithms for estimating ground-state energies, opening new avenues in quantum chemistry and materials science.

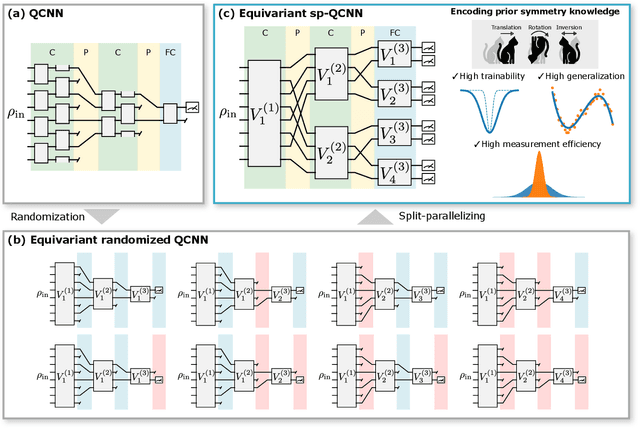

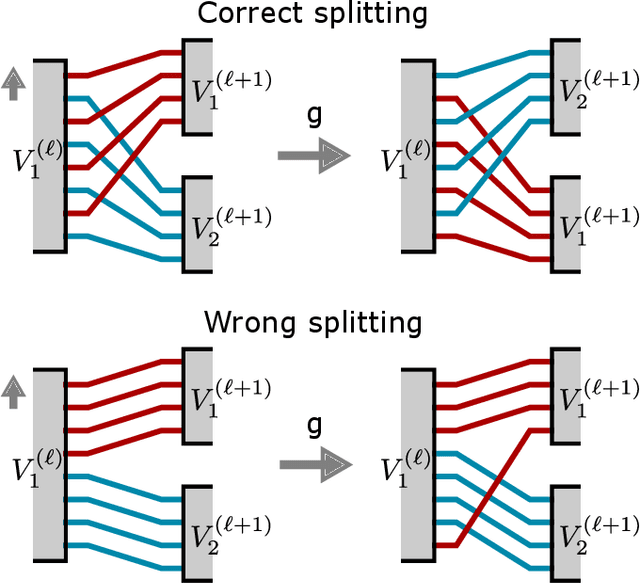

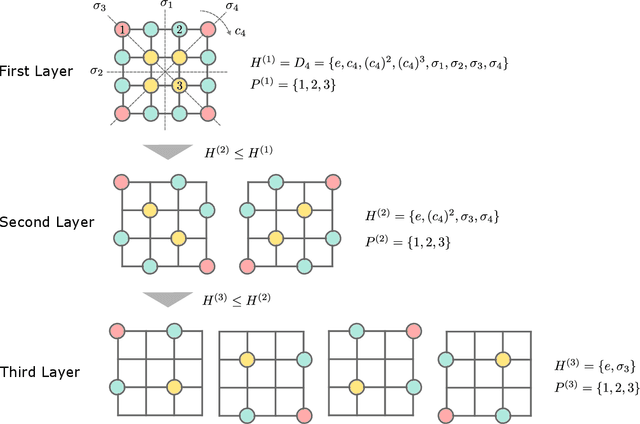

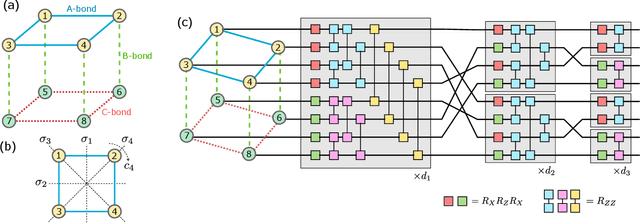

Resource-efficient equivariant quantum convolutional neural networks

Oct 02, 2024

Abstract:Equivariant quantum neural networks (QNNs) are promising quantum machine learning models that exploit symmetries to provide potential quantum advantages. Despite theoretical developments in equivariant QNNs, their implementation on near-term quantum devices remains challenging due to limited computational resources. This study proposes a resource-efficient model of equivariant quantum convolutional neural networks (QCNNs) called equivariant split-parallelizing QCNN (sp-QCNN). Using a group-theoretical approach, we encode general symmetries into our model beyond the translational symmetry addressed by previous sp-QCNNs. We achieve this by splitting the circuit at the pooling layer while preserving symmetry. This splitting structure effectively parallelizes QCNNs to improve measurement efficiency in estimating the expectation value of an observable and its gradient by order of the number of qubits. Our model also exhibits high trainability and generalization performance, including the absence of barren plateaus. Numerical experiments demonstrate that the equivariant sp-QCNN can be trained and generalized with fewer measurement resources than a conventional equivariant QCNN in a noisy quantum data classification task. Our results contribute to the advancement of practical quantum machine learning algorithms.

Quantum Curriculum Learning

Jul 02, 2024Abstract:Quantum machine learning (QML) requires significant quantum resources to achieve quantum advantage. Research should prioritize both the efficient design of quantum architectures and the development of learning strategies to optimize resource usage. We propose a framework called quantum curriculum learning (Q-CurL) for quantum data, where the curriculum introduces simpler tasks or data to the learning model before progressing to more challenging ones. We define the curriculum criteria based on the data density ratio between tasks to determine the curriculum order. We also implement a dynamic learning schedule to emphasize the significance of quantum data in optimizing the loss function. Empirical evidence shows that Q-CurL enhances the training convergence and the generalization for unitary learning tasks and improves the robustness of quantum phase recognition tasks. Our framework provides a general learning strategy, bringing QML closer to realizing practical advantages.

Trade-off between Gradient Measurement Efficiency and Expressivity in Deep Quantum Neural Networks

Jun 26, 2024Abstract:Quantum neural networks (QNNs) require an efficient training algorithm to achieve practical quantum advantages. A promising approach is the use of gradient-based optimization algorithms, where gradients are estimated through quantum measurements. However, it is generally difficult to efficiently measure gradients in QNNs because the quantum state collapses upon measurement. In this work, we prove a general trade-off between gradient measurement efficiency and expressivity in a wide class of deep QNNs, elucidating the theoretical limits and possibilities of efficient gradient estimation. This trade-off implies that a more expressive QNN requires a higher measurement cost in gradient estimation, whereas we can increase gradient measurement efficiency by reducing the QNN expressivity to suit a given task. We further propose a general QNN ansatz called the stabilizer-logical product ansatz (SLPA), which can reach the upper limit of the trade-off inequality by leveraging the symmetric structure of the quantum circuit. In learning an unknown symmetric function, the SLPA drastically reduces the quantum resources required for training while maintaining accuracy and trainability compared to a well-designed symmetric circuit based on the parameter-shift method. Our results not only reveal a theoretical understanding of efficient training in QNNs but also provide a standard and broadly applicable efficient QNN design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge