Resource-efficient equivariant quantum convolutional neural networks

Paper and Code

Oct 02, 2024

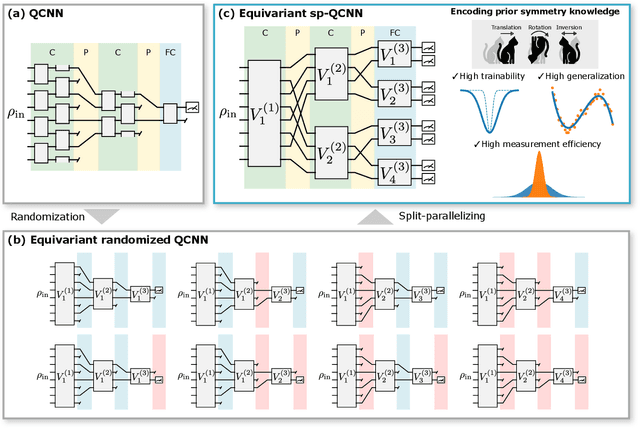

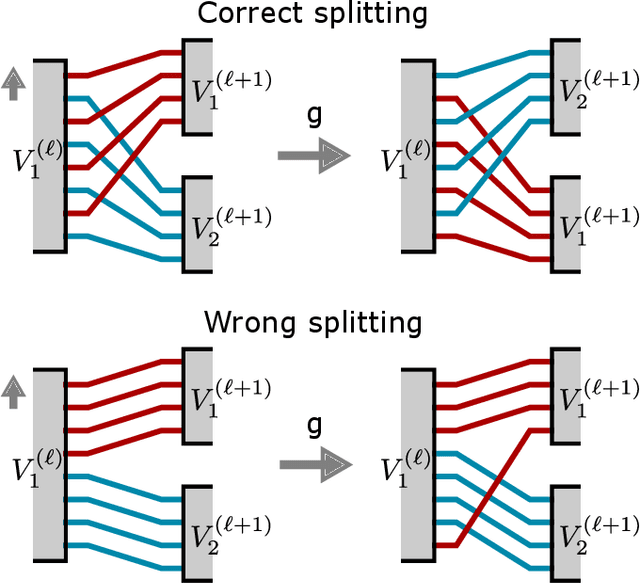

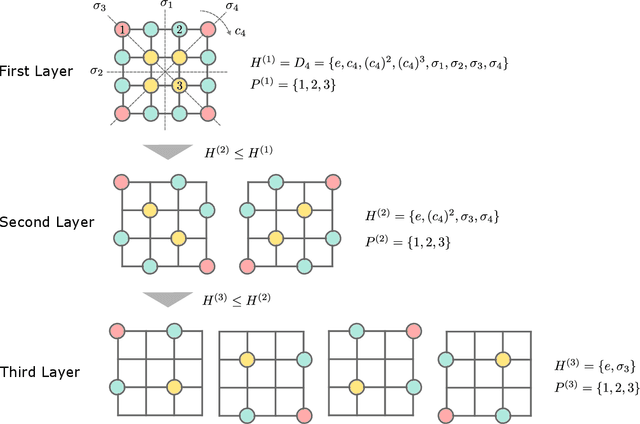

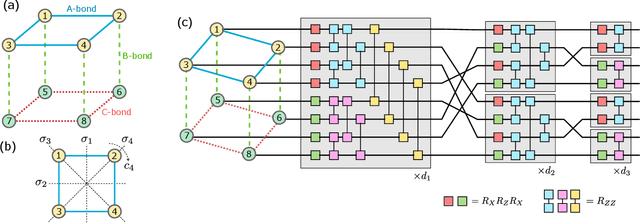

Equivariant quantum neural networks (QNNs) are promising quantum machine learning models that exploit symmetries to provide potential quantum advantages. Despite theoretical developments in equivariant QNNs, their implementation on near-term quantum devices remains challenging due to limited computational resources. This study proposes a resource-efficient model of equivariant quantum convolutional neural networks (QCNNs) called equivariant split-parallelizing QCNN (sp-QCNN). Using a group-theoretical approach, we encode general symmetries into our model beyond the translational symmetry addressed by previous sp-QCNNs. We achieve this by splitting the circuit at the pooling layer while preserving symmetry. This splitting structure effectively parallelizes QCNNs to improve measurement efficiency in estimating the expectation value of an observable and its gradient by order of the number of qubits. Our model also exhibits high trainability and generalization performance, including the absence of barren plateaus. Numerical experiments demonstrate that the equivariant sp-QCNN can be trained and generalized with fewer measurement resources than a conventional equivariant QCNN in a noisy quantum data classification task. Our results contribute to the advancement of practical quantum machine learning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge