Yasaman Boreshban

The Impact of Quantization on the Robustness of Transformer-based Text Classifiers

Mar 08, 2024

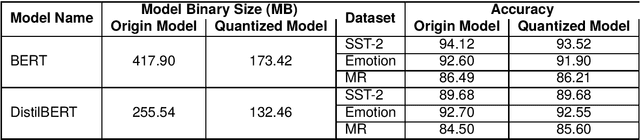

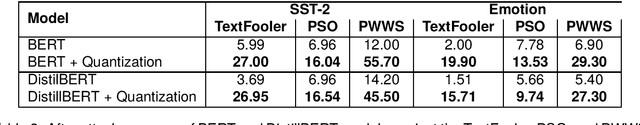

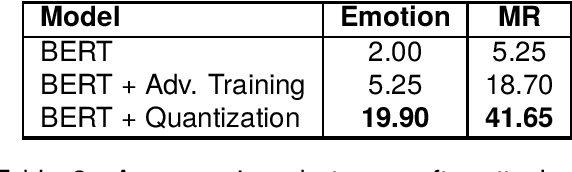

Abstract:Transformer-based models have made remarkable advancements in various NLP areas. Nevertheless, these models often exhibit vulnerabilities when confronted with adversarial attacks. In this paper, we explore the effect of quantization on the robustness of Transformer-based models. Quantization usually involves mapping a high-precision real number to a lower-precision value, aiming at reducing the size of the model at hand. To the best of our knowledge, this work is the first application of quantization on the robustness of NLP models. In our experiments, we evaluate the impact of quantization on BERT and DistilBERT models in text classification using SST-2, Emotion, and MR datasets. We also evaluate the performance of these models against TextFooler, PWWS, and PSO adversarial attacks. Our findings show that quantization significantly improves (by an average of 18.68%) the adversarial accuracy of the models. Furthermore, we compare the effect of quantization versus that of the adversarial training approach on robustness. Our experiments indicate that quantization increases the robustness of the model by 18.80% on average compared to adversarial training without imposing any extra computational overhead during training. Therefore, our results highlight the effectiveness of quantization in improving the robustness of NLP models.

Improving Question Answering Performance Using Knowledge Distillation and Active Learning

Sep 26, 2021

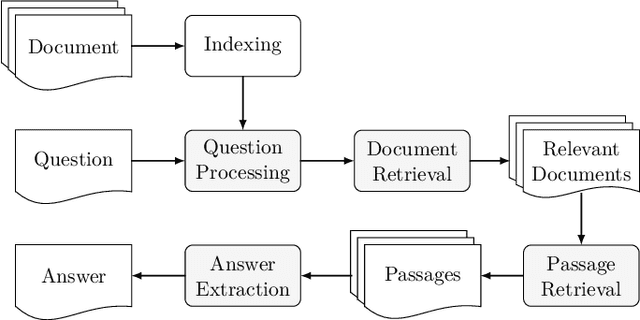

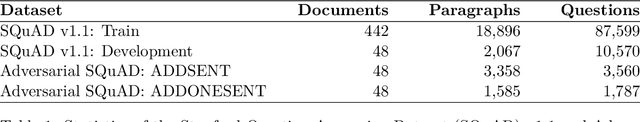

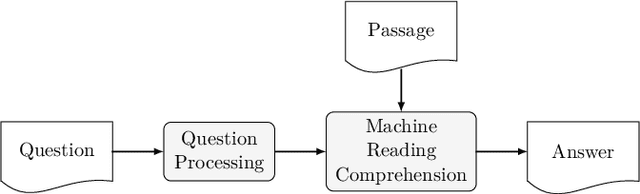

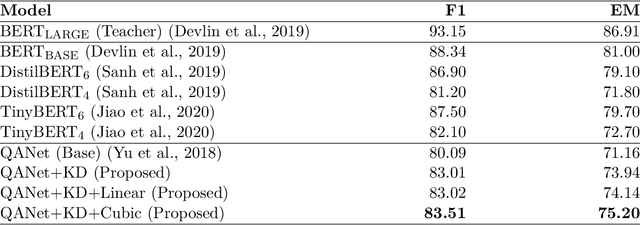

Abstract:Contemporary question answering (QA) systems, including transformer-based architectures, suffer from increasing computational and model complexity which render them inefficient for real-world applications with limited resources. Further, training or even fine-tuning such models requires a vast amount of labeled data which is often not available for the task at hand. In this manuscript, we conduct a comprehensive analysis of the mentioned challenges and introduce suitable countermeasures. We propose a novel knowledge distillation (KD) approach to reduce the parameter and model complexity of a pre-trained BERT system and utilize multiple active learning (AL) strategies for immense reduction in annotation efforts. In particular, we demonstrate that our model achieves the performance of a 6-layer TinyBERT and DistilBERT, whilst using only 2% of their total parameters. Finally, by the integration of our AL approaches into the BERT framework, we show that state-of-the-art results on the SQuAD dataset can be achieved when we only use 20% of the training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge