Yanan You

DenSe-AdViT: A novel Vision Transformer for Dense SAR Object Detection

Apr 18, 2025Abstract:Vision Transformer (ViT) has achieved remarkable results in object detection for synthetic aperture radar (SAR) images, owing to its exceptional ability to extract global features. However, it struggles with the extraction of multi-scale local features, leading to limited performance in detecting small targets, especially when they are densely arranged. Therefore, we propose Density-Sensitive Vision Transformer with Adaptive Tokens (DenSe-AdViT) for dense SAR target detection. We design a Density-Aware Module (DAM) as a preliminary component that generates a density tensor based on target distribution. It is guided by a meticulously crafted objective metric, enabling precise and effective capture of the spatial distribution and density of objects. To integrate the multi-scale information enhanced by convolutional neural networks (CNNs) with the global features derived from the Transformer, Density-Enhanced Fusion Module (DEFM) is proposed. It effectively refines attention toward target-survival regions with the assist of density mask and the multiple sources features. Notably, our DenSe-AdViT achieves 79.8% mAP on the RSDD dataset and 92.5% on the SIVED dataset, both of which feature a large number of densely distributed vehicle targets.

Gleo-Det: Deep Convolution Feature-Guided Detector with Local Entropy Optimization for Salient Points

Apr 27, 2022

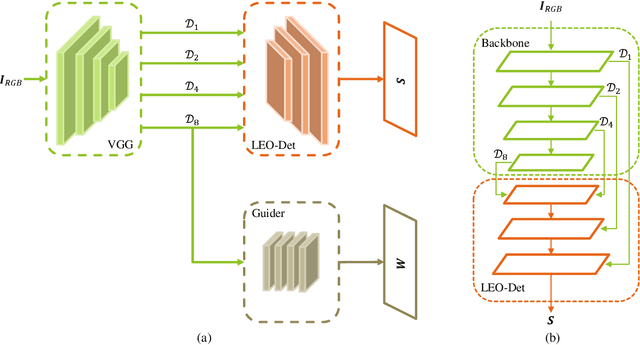

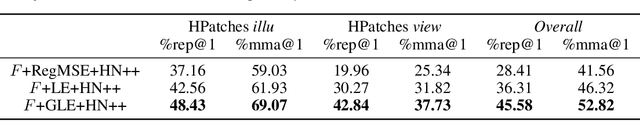

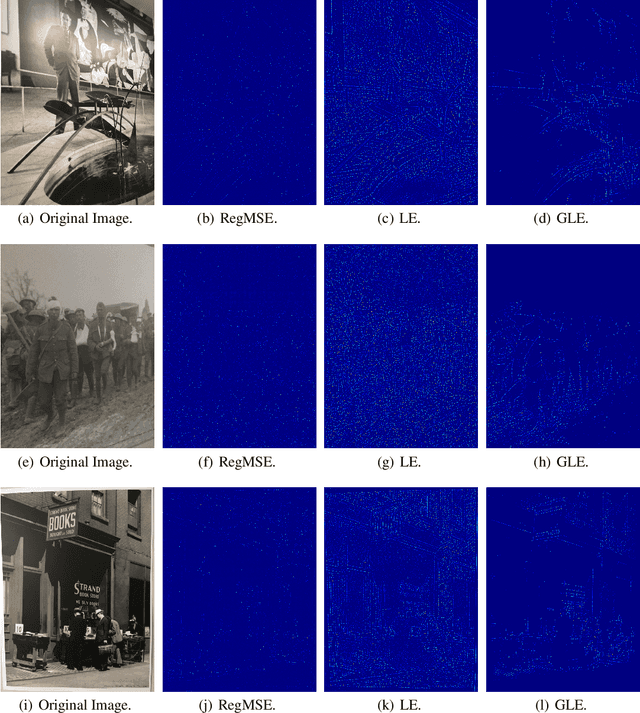

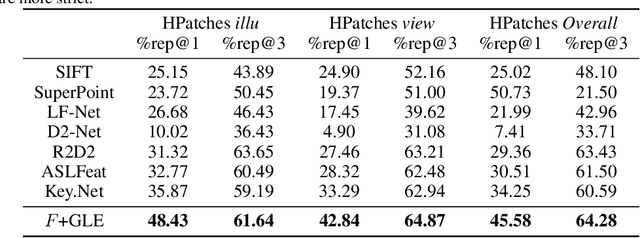

Abstract:Feature detection is an important procedure for image matching, where unsupervised feature detection methods are the detection approaches that have been mostly studied recently, including the ones that are based on repeatability requirement to define loss functions, and the ones that attempt to use descriptor matching to drive the optimization of the pipelines. For the former type, mean square error (MSE) is usually used which cannot provide strong constraint for training and can make the model easy to be stuck into the collapsed solution. For the later one, due to the down sampling operation and the expansion of receptive fields, the details can be lost for local descriptors can be lost, making the constraint not fine enough. Considering the issues above, we propose to combine both ideas, which including three aspects. 1) We propose to achieve fine constraint based on the requirement of repeatability while coarse constraint with guidance of deep convolution features. 2) To address the issue that optimization with MSE is limited, entropy-based cost function is utilized, both soft cross-entropy and self-information. 3) With the guidance of convolution features, we define the cost function from both positive and negative sides. Finally, we study the effect of each modification proposed and experiments demonstrate that our method achieves competitive results over the state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge