Yamuna Prasad

An Effective Tag Assignment Approach for Billboard Advertisement

Sep 04, 2024

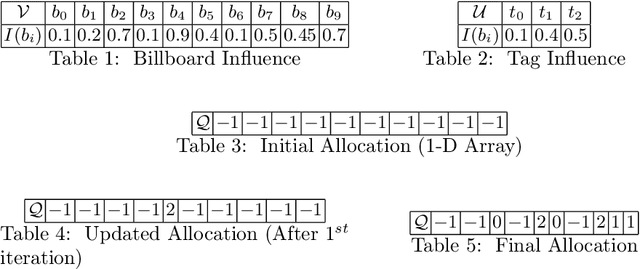

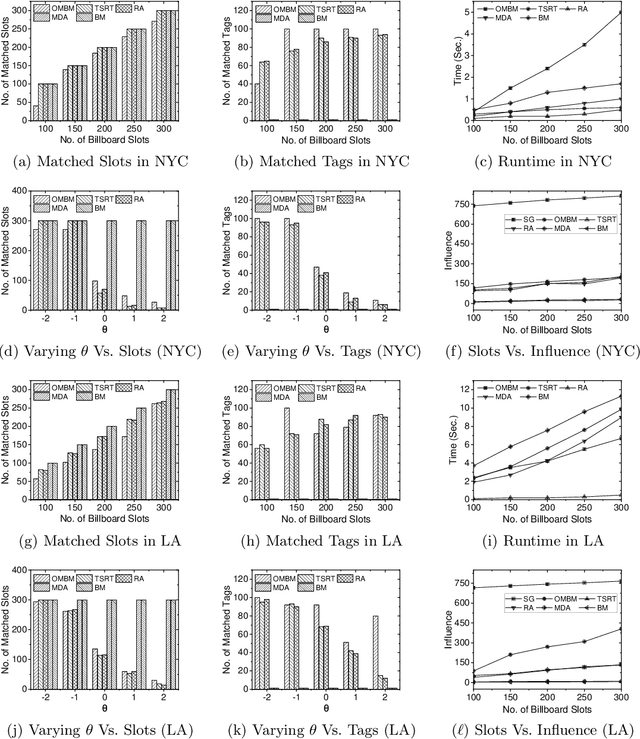

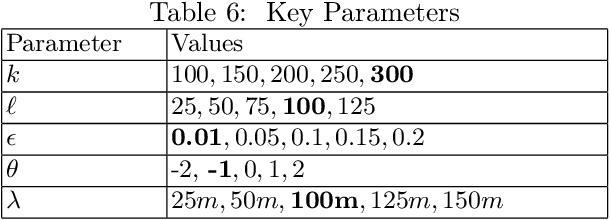

Abstract:Billboard Advertisement has gained popularity due to its significant outrage in return on investment. To make this advertisement approach more effective, the relevant information about the product needs to be reached to the relevant set of people. This can be achieved if the relevant set of tags can be mapped to the correct slots. Formally, we call this problem the Tag Assignment Problem in Billboard Advertisement. Given trajectory, billboard database, and a set of selected billboard slots and tags, this problem asks to output a mapping of selected tags to the selected slots so that the influence is maximized. We model this as a variant of traditional bipartite matching called One-To-Many Bipartite Matching (OMBM). Unlike traditional bipartite matching, a tag can be assigned to only one slot; in the OMBM, a tag can be assigned to multiple slots while the vice versa can not happen. We propose an iterative solution approach that incrementally allocates the tags to the slots. The proposed methodology has been explained with an illustrated example. A complexity analysis of the proposed solution approach has also been conducted. The experimental results on real-world trajectory and billboard datasets prove our claim on the effectiveness and efficiency of the proposed solution.

Minimizing Regret in Billboard Advertisement under Zonal Influence Constraint

Feb 02, 2024Abstract:In a typical billboard advertisement technique, a number of digital billboards are owned by an influence provider, and many advertisers approach the influence provider for a specific number of views of their advertisement content on a payment basis. If the influence provider provides the demanded or more influence, then he will receive the full payment or else a partial payment. In the context of an influence provider, if he provides more or less than an advertiser's demanded influence, it is a loss for him. This is formalized as 'Regret', and naturally, in the context of the influence provider, the goal will be to allocate the billboard slots among the advertisers such that the total regret is minimized. In this paper, we study this problem as a discrete optimization problem and propose four solution approaches. The first one selects the billboard slots from the available ones in an incremental greedy manner, and we call this method the Budget Effective Greedy approach. In the second one, we introduce randomness with the first one, where we perform the marginal gain computation for a sample of randomly chosen billboard slots. The remaining two approaches are further improvements over the second one. We analyze all the algorithms to understand their time and space complexity. We implement them with real-life trajectory and billboard datasets and conduct a number of experiments. It has been observed that the randomized budget effective greedy approach takes reasonable computational time while minimizing the regret.

Towards Regret Free Slot Allocation in Billboard Advertisement

Jan 29, 2024Abstract:Creating and maximizing influence among the customers is one of the central goals of an advertiser, and hence, remains an active area of research in recent times. In this advertisement technique, the advertisers approach an influence provider for a specific number of views of their content on a payment basis. Now, if the influence provider can provide the required number of views or more, he will receive the full, else a partial payment. In the context of an influence provider, it is a loss for him if he offers more or less views. This is formalized as 'Regret', and naturally, in the context of the influence provider, the goal will be to minimize this quantity. In this paper, we solve this problem in the context of billboard advertisement and pose it as a discrete optimization problem. We propose four efficient solution approaches for this problem and analyze them to understand their time and space complexity. We implement all the solution methodologies with real-life datasets and compare the obtained results with the existing solution approaches from the literature. We observe that the proposed solutions lead to less regret while taking less computational time.

Max-Margin Feature Selection

Jun 14, 2016

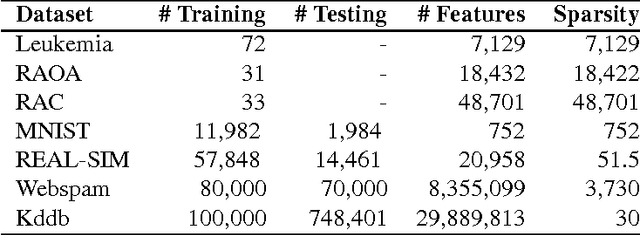

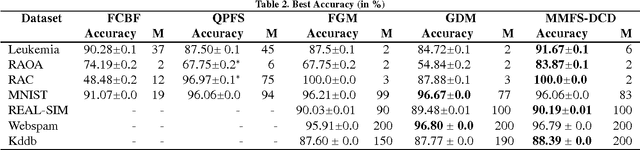

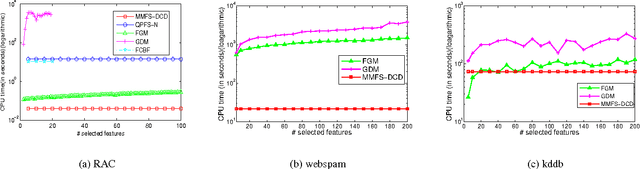

Abstract:Many machine learning applications such as in vision, biology and social networking deal with data in high dimensions. Feature selection is typically employed to select a subset of features which im- proves generalization accuracy as well as reduces the computational cost of learning the model. One of the criteria used for feature selection is to jointly minimize the redundancy and maximize the rele- vance of the selected features. In this paper, we formulate the task of feature selection as a one class SVM problem in a space where features correspond to the data points and instances correspond to the dimensions. The goal is to look for a representative subset of the features (support vectors) which describes the boundary for the region where the set of the features (data points) exists. This leads to a joint optimization of relevance and redundancy in a principled max-margin framework. Additionally, our formulation enables us to leverage existing techniques for optimizing the SVM objective resulting in highly computationally efficient solutions for the task of feature selection. Specifically, we employ the dual coordinate descent algorithm (Hsieh et al., 2008), originally proposed for SVMs, for our formulation. We use a sparse representation to deal with data in very high dimensions. Experiments on seven publicly available benchmark datasets from a variety of domains show that our approach results in orders of magnitude faster solutions even while retaining the same level of accuracy compared to the state of the art feature selection techniques.

Integrating K-means with Quadratic Programming Feature Selection

Aug 11, 2015

Abstract:Several data mining problems are characterized by data in high dimensions. One of the popular ways to reduce the dimensionality of the data is to perform feature selection, i.e, select a subset of relevant and non-redundant features. Recently, Quadratic Programming Feature Selection (QPFS) has been proposed which formulates the feature selection problem as a quadratic program. It has been shown to outperform many of the existing feature selection methods for a variety of applications. Though, better than many existing approaches, the running time complexity of QPFS is cubic in the number of features, which can be quite computationally expensive even for moderately sized datasets. In this paper we propose a novel method for feature selection by integrating k-means clustering with QPFS. The basic variant of our approach runs k-means to bring down the number of features which need to be passed on to QPFS. We then enhance this idea, wherein we gradually refine the feature space from a very coarse clustering to a fine-grained one, by interleaving steps of QPFS with k-means clustering. Every step of QPFS helps in identifying the clusters of irrelevant features (which can then be thrown away), whereas every step of k-means further refines the clusters which are potentially relevant. We show that our iterative refinement of clusters is guaranteed to converge. We provide bounds on the number of distance computations involved in the k-means algorithm. Further, each QPFS run is now cubic in number of clusters, which can be much smaller than actual number of features. Experiments on eight publicly available datasets show that our approach gives significant computational gains (both in time and memory), over standard QPFS as well as other state of the art feature selection methods, even while improving the overall accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge