Xuetao Wang

DoseDiff: Distance-aware Diffusion Model for Dose Prediction in Radiotherapy

Jun 28, 2023

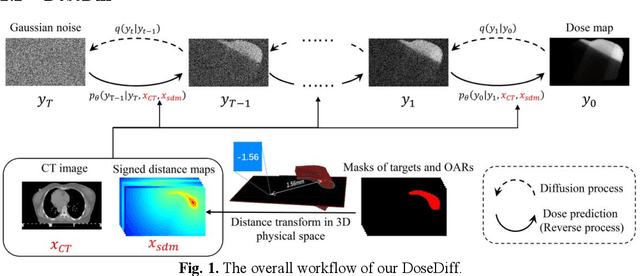

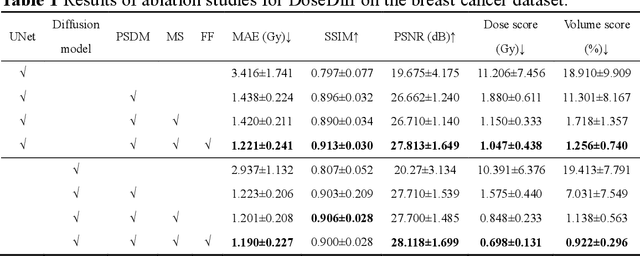

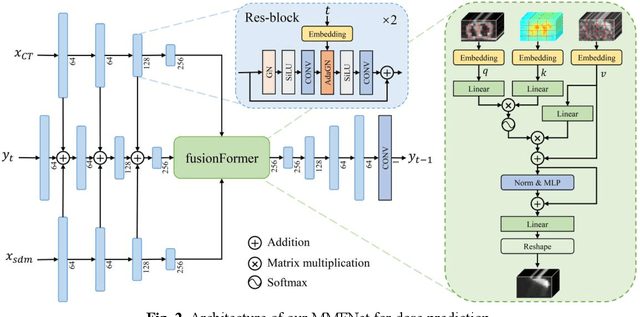

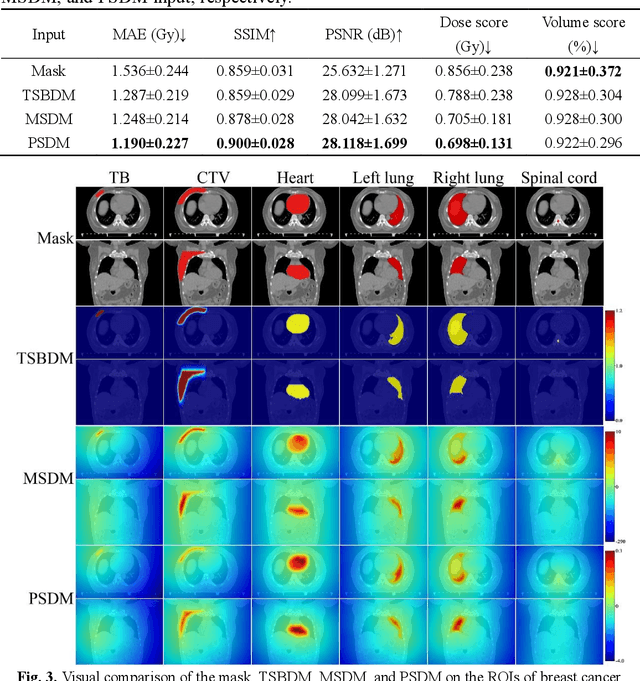

Abstract:Treatment planning is a critical component of the radiotherapy workflow, typically carried out by a medical physicist using a time-consuming trial-and-error manner. Previous studies have proposed knowledge-based or deep learning-based methods for predicting dose distribution maps to assist medical physicists in improving the efficiency of treatment planning. However, these dose prediction methods usuallylack the effective utilization of distance information between surrounding tissues andtargets or organs-at-risk (OARs). Moreover, they are poor in maintaining the distribution characteristics of ray paths in the predicted dose distribution maps, resulting in a loss of valuable information obtained by medical physicists. In this paper, we propose a distance-aware diffusion model (DoseDiff) for precise prediction of dose distribution. We define dose prediction as a sequence of denoising steps, wherein the predicted dose distribution map is generated with the conditions of the CT image and signed distance maps (SDMs). The SDMs are obtained by a distance transformation from the masks of targets or OARs, which provide the distance information from each pixel in the image to the outline of the targets or OARs. Besides, we propose a multiencoder and multi-scale fusion network (MMFNet) that incorporates a multi-scale fusion and a transformer-based fusion module to enhance information fusion between the CT image and SDMs at the feature level. Our model was evaluated on two datasets collected from patients with breast cancer and nasopharyngeal cancer, respectively. The results demonstrate that our DoseDiff outperforms the state-of-the-art dose prediction methods in terms of both quantitative and visual quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge