Xuejun Wen

Learning on Abstract Domains: A New Approach for Verifiable Guarantee in Reinforcement Learning

Jun 13, 2021

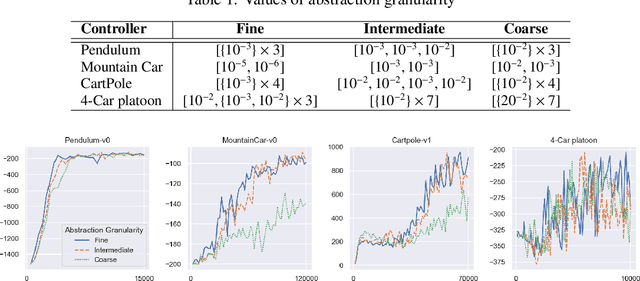

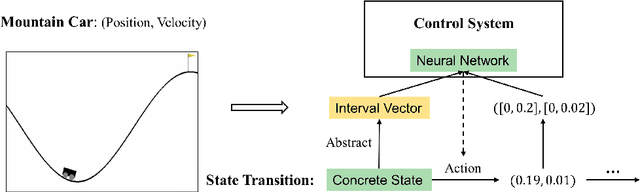

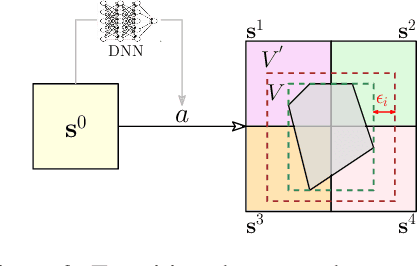

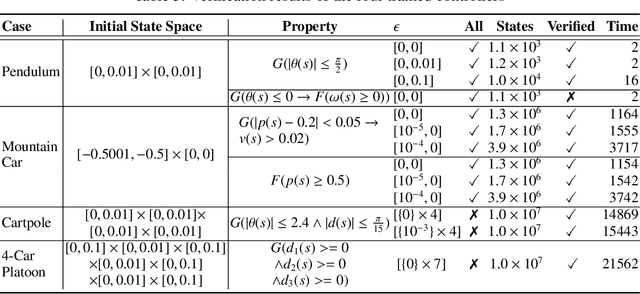

Abstract:Formally verifying Deep Reinforcement Learning (DRL) systems is a challenging task due to the dynamic continuity of system behaviors and the black-box feature of embedded neural networks. In this paper, we propose a novel abstraction-based approach to train DRL systems on finite abstract domains instead of concrete system states. It yields neural networks whose input states are finite, making hosting DRL systems directly verifiable using model checking techniques. Our approach is orthogonal to existing DRL algorithms and off-the-shelf model checkers. We implement a resulting prototype training and verification framework and conduct extensive experiments on the state-of-the-art benchmark. The results show that the systems trained in our approach can be verified more efficiently while they retain comparable performance against those that are trained without abstraction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge