Xuan-Qui Pham

An Efficient Video Streaming Architecture with QoS Control for Virtual Desktop Infrastructure in Cloud Computing

Mar 11, 2022

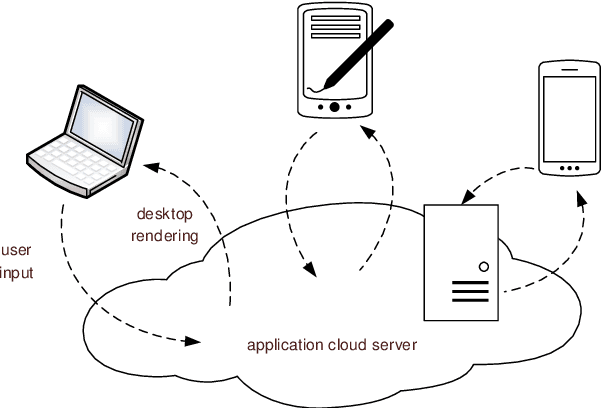

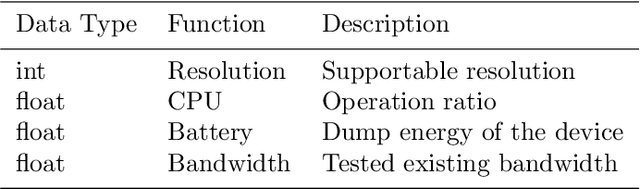

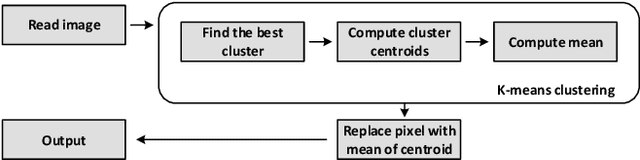

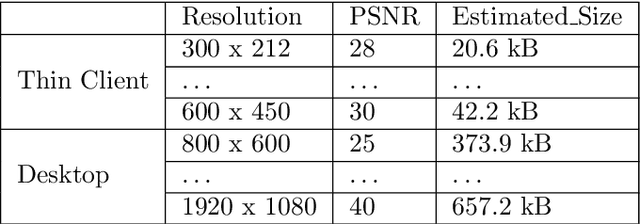

Abstract:In virtual desktop infrastructure (VDI) environments, the remote display protocol has a big responsibility to transmit video data from a data center-hosted desktop to the endpoint. The protocol must ensure a high level of client perceived end-to-end quality of service (QoS) under heavy work load conditions. Each remote display protocol works differently depending on the network and which applications are being delivered. In healthcare applications, doctors and nurses can use mobile devices directly to monitor patients. Moreover, the ability to implement tasks requiring high consumption of CPU and other resources is applicable to a variety of applications including research and cloud gaming. Such computer games and complex processes will run on powerful cloud servers and the screen contents will be transmitted to the client. TO enable such applications, remote display technology requires further enhancements to meet more stringent requirements on bandwidth and QoS, an to allow realtime operation. In this paper, we present an architecture including flexible QoS control to improve the user quality of experience (QoE). The QoS control is developed based on linear regression modeling using historical network data. Additionally, the architecture includes a novel compression algorithm of 2D images, designed to guarantee the best image quality and to reduce video delay; this algorithm is based on k-means clustering and can satisfy the requirements of realtime onboard processing. Through simulations with a real work dataset collected by the MIT Computer Science and Artificial Lab, we present experimental as well as explain the performance of the QoS system.

Artificial Intelligence for the Metaverse: A Survey

Feb 15, 2022

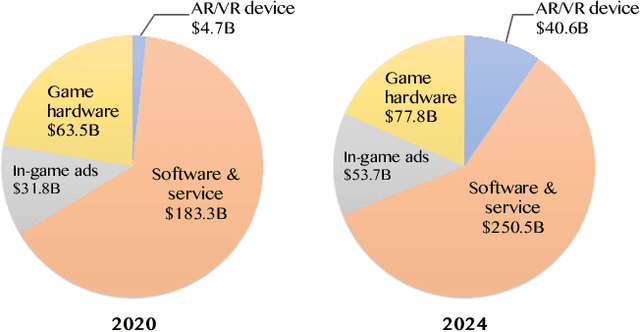

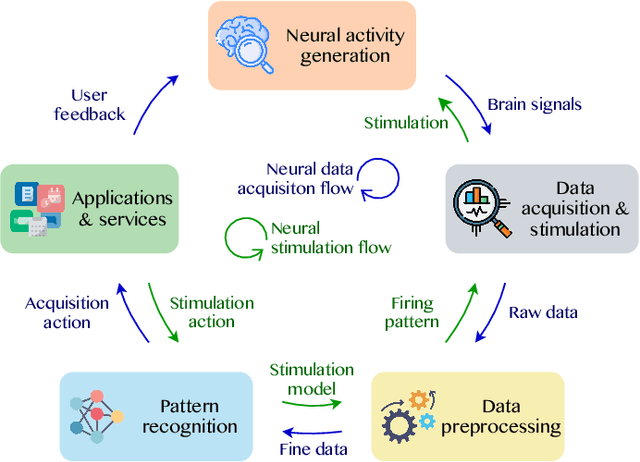

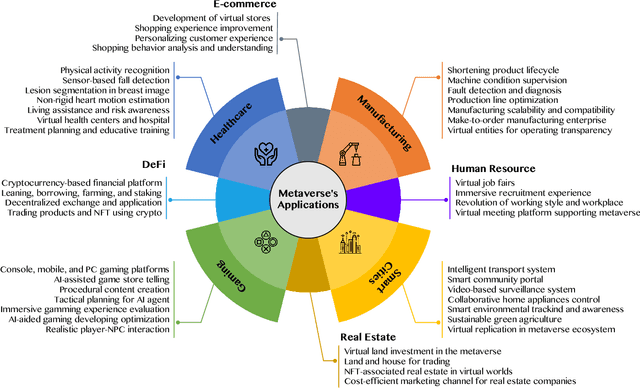

Abstract:Along with the massive growth of the Internet from the 1990s until now, various innovative technologies have been created to bring users breathtaking experiences with more virtual interactions in cyberspace. Many virtual environments with thousands of services and applications, from social networks to virtual gaming worlds, have been developed with immersive experience and digital transformation, but most are incoherent instead of being integrated into a platform. In this context, metaverse, a term formed by combining meta and universe, has been introduced as a shared virtual world that is fueled by many emerging technologies, such as fifth-generation networks and beyond, virtual reality, and artificial intelligence (AI). Among such technologies, AI has shown the great importance of processing big data to enhance immersive experience and enable human-like intelligence of virtual agents. In this survey, we make a beneficial effort to explore the role of AI in the foundation and development of the metaverse. We first deliver a preliminary of AI, including machine learning algorithms and deep learning architectures, and its role in the metaverse. We then convey a comprehensive investigation of AI-based methods concerning six technical aspects that have potentials for the metaverse: natural language processing, machine vision, blockchain, networking, digital twin, and neural interface, and being potential for the metaverse. Subsequently, several AI-aided applications, such as healthcare, manufacturing, smart cities, and gaming, are studied to be deployed in the virtual worlds. Finally, we conclude the key contribution of this survey and open some future research directions in AI for the metaverse.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge