Xuan Nguyen

Prediction of Model Generalizability for Unseen Data: Methodology and Case Study in Brain Metastases Detection in T1-Weighted Contrast-Enhanced 3D MRI

Dec 15, 2022Abstract:A medical AI system's generalizability describes the continuity of its performance acquired from varying geographic, historical, and methodologic settings. Previous literature on this topic has mostly focused on "how" to achieve high generalizability with limited success. Instead, we aim to understand "when" the generalizability is achieved: Our study presents a medical AI system that could estimate its generalizability status for unseen data on-the-fly. We introduce a latent space mapping (LSM) approach utilizing Frechet distance loss to force the underlying training data distribution into a multivariate normal distribution. During the deployment, a given test data's LSM distribution is processed to detect its deviation from the forced distribution; hence, the AI system could predict its generalizability status for any previously unseen data set. If low model generalizability is detected, then the user is informed by a warning message. While the approach is applicable for most classification deep neural networks, we demonstrate its application to a brain metastases (BM) detector for T1-weighted contrast-enhanced (T1c) 3D MRI. The BM detection model was trained using 175 T1c studies acquired internally, and tested using (1) 42 internally and (2) 72 externally acquired exams from the publicly distributed Brain Mets dataset provided by the Stanford University School of Medicine. Generalizability scores, false positive (FP) rates, and sensitivities of the BM detector were computed for the test datasets. The model predicted its generalizability to be low for 31% of the testing data, where it produced (1) ~13.5 FPs at 76.1% BM detection sensitivity for the low and (2) ~10.5 FPs at 89.2% BM detection sensitivity for the high generalizability groups respectively. The results suggest that the proposed formulation enables a model to predict its generalizability for unseen data.

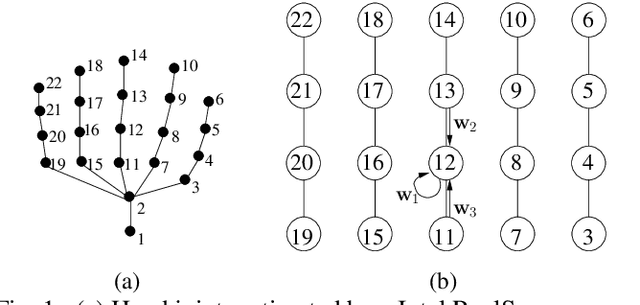

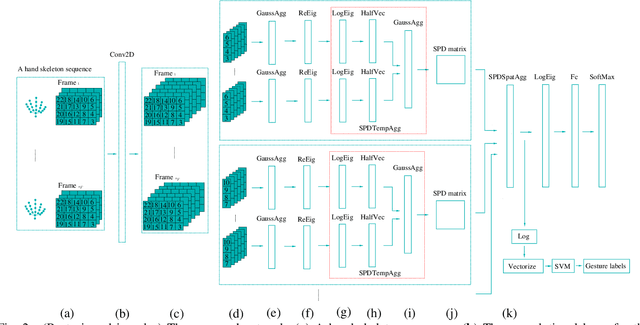

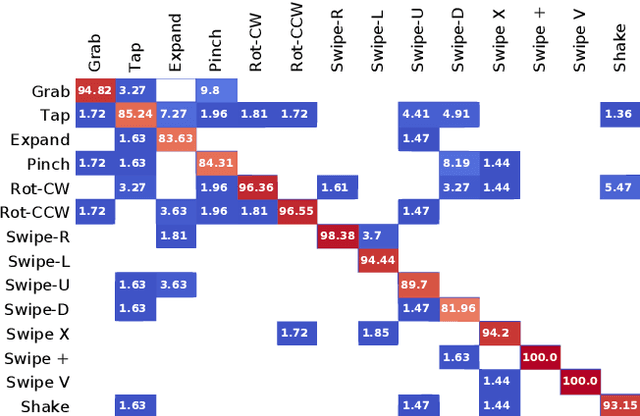

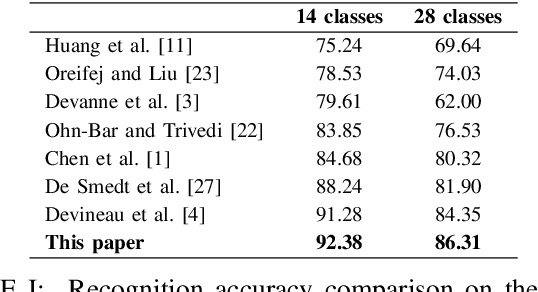

Skeleton-Based Hand Gesture Recognition by Learning SPD Matrices with Neural Networks

May 20, 2019

Abstract:In this paper, we propose a new hand gesture recognition method based on skeletal data by learning SPD matrices with neural networks. We model the hand skeleton as a graph and introduce a neural network for SPD matrix learning, taking as input the 3D coordinates of hand joints. The proposed network is based on two newly designed layers that transform a set of SPD matrices into a SPD matrix. For gesture recognition, we train a linear SVM classifier using features extracted from our network. Experimental results on a challenging dataset (Dynamic Hand Gesture dataset from the SHREC 2017 3D Shape Retrieval Contest) show that the proposed method outperforms state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge