Xose Luis Dean-Ben

Deep learning facilitates fully automated brain image registration of optoacoustic tomography and magnetic resonance imaging

Sep 04, 2021

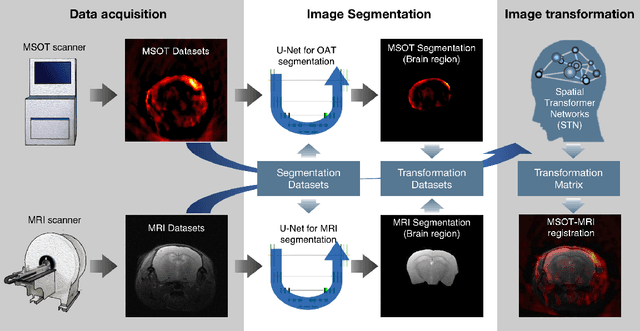

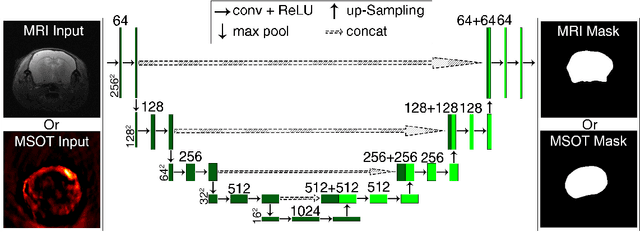

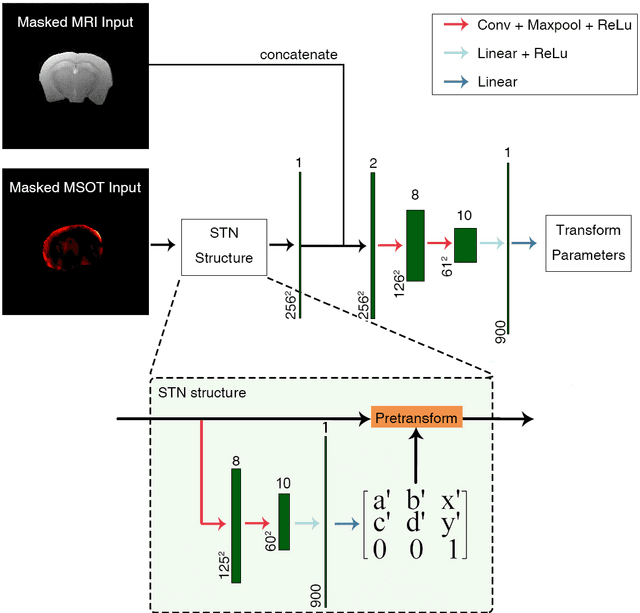

Abstract:Multi-spectral optoacoustic tomography (MSOT) is an emerging optical imaging method providing multiplex molecular and functional information from the rodent brain. It can be greatly augmented by magnetic resonance imaging (MRI) that offers excellent soft-tissue contrast and high-resolution brain anatomy. Nevertheless, registration of multi-modal images remains challenging, chiefly due to the entirely different image contrast rendered by these modalities. Previously reported registration algorithms mostly relied on manual user-dependent brain segmentation, which compromised data interpretation and accurate quantification. Here we propose a fully automated registration method for MSOT-MRI multimodal imaging empowered by deep learning. The automated workflow includes neural network-based image segmentation to generate suitable masks, which are subsequently registered using an additional neural network. Performance of the algorithm is showcased with datasets acquired by cross-sectional MSOT and high-field MRI preclinical scanners. The automated registration method is further validated with manual and half-automated registration, demonstrating its robustness and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge