Xiyue Zhu

Hierarchical Diffusion Framework for Pseudo-Healthy Brain MRI Inpainting with Enhanced 3D Consistency

Jul 23, 2025Abstract:Pseudo-healthy image inpainting is an essential preprocessing step for analyzing pathological brain MRI scans. Most current inpainting methods favor slice-wise 2D models for their high in-plane fidelity, but their independence across slices produces discontinuities in the volume. Fully 3D models alleviate this issue, but their high model capacity demands extensive training data for reliable, high-fidelity synthesis -- often impractical in medical settings. We address these limitations with a hierarchical diffusion framework by replacing direct 3D modeling with two perpendicular coarse-to-fine 2D stages. An axial diffusion model first yields a coarse, globally consistent inpainting; a coronal diffusion model then refines anatomical details. By combining perpendicular spatial views with adaptive resampling, our method balances data efficiency and volumetric consistency. Our experiments show our approach outperforms state-of-the-art baselines in both realism and volumetric consistency, making it a promising solution for pseudo-healthy image inpainting. Code is available at https://github.com/dou0000/3dMRI-Consistent-Inpaint.

Diff-Ensembler: Learning to Ensemble 2D Diffusion Models for Volume-to-Volume Medical Image Translation

Jan 13, 2025

Abstract:Despite success in volume-to-volume translations in medical images, most existing models struggle to effectively capture the inherent volumetric distribution using 3D representations. The current state-of-the-art approach combines multiple 2D-based networks through weighted averaging, thereby neglecting the 3D spatial structures. Directly training 3D models in medical imaging presents significant challenges due to high computational demands and the need for large-scale datasets. To address these challenges, we introduce Diff-Ensembler, a novel hybrid 2D-3D model for efficient and effective volumetric translations by ensembling perpendicularly trained 2D diffusion models with a 3D network in each diffusion step. Moreover, our model can naturally be used to ensemble diffusion models conditioned on different modalities, allowing flexible and accurate fusion of input conditions. Extensive experiments demonstrate that Diff-Ensembler attains superior accuracy and volumetric realism in 3D medical image super-resolution and modality translation. We further demonstrate the strength of our model's volumetric realism using tumor segmentation as a downstream task.

MapPrior: Bird's-Eye View Map Layout Estimation with Generative Models

Aug 24, 2023Abstract:Despite tremendous advancements in bird's-eye view (BEV) perception, existing models fall short in generating realistic and coherent semantic map layouts, and they fail to account for uncertainties arising from partial sensor information (such as occlusion or limited coverage). In this work, we introduce MapPrior, a novel BEV perception framework that combines a traditional discriminative BEV perception model with a learned generative model for semantic map layouts. Our MapPrior delivers predictions with better accuracy, realism, and uncertainty awareness. We evaluate our model on the large-scale nuScenes benchmark. At the time of submission, MapPrior outperforms the strongest competing method, with significantly improved MMD and ECE scores in camera- and LiDAR-based BEV perception.

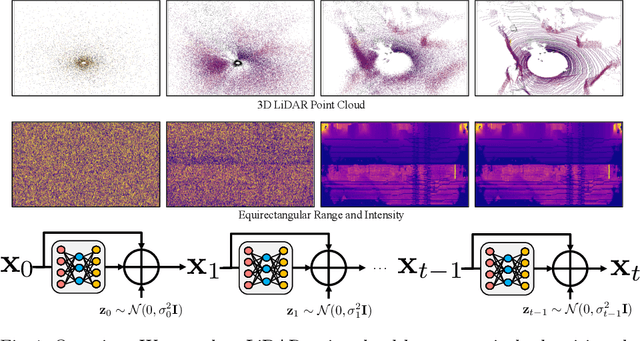

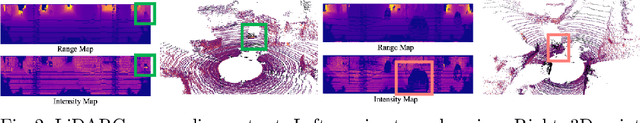

Learning to Generate Realistic LiDAR Point Clouds

Sep 08, 2022

Abstract:We present LiDARGen, a novel, effective, and controllable generative model that produces realistic LiDAR point cloud sensory readings. Our method leverages the powerful score-matching energy-based model and formulates the point cloud generation process as a stochastic denoising process in the equirectangular view. This model allows us to sample diverse and high-quality point cloud samples with guaranteed physical feasibility and controllability. We validate the effectiveness of our method on the challenging KITTI-360 and NuScenes datasets. The quantitative and qualitative results show that our approach produces more realistic samples than other generative models. Furthermore, LiDARGen can sample point clouds conditioned on inputs without retraining. We demonstrate that our proposed generative model could be directly used to densify LiDAR point clouds. Our code is available at: https://www.zyrianov.org/lidargen/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge