Xintao Xiang

Realistic Counterfactual Explanations by Learned Relations

Feb 15, 2022

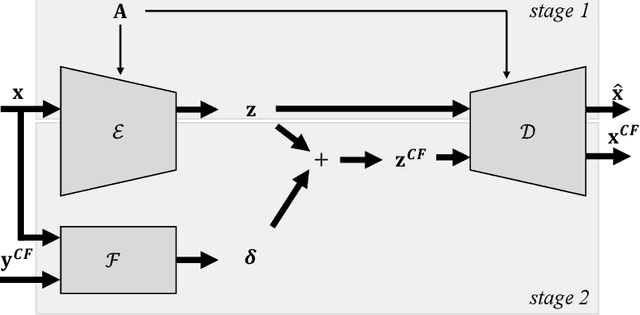

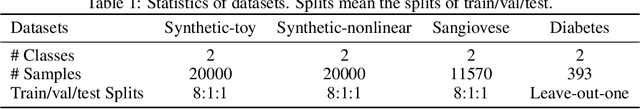

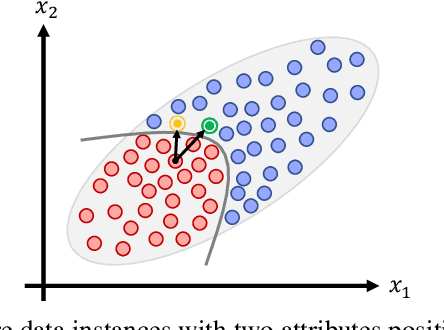

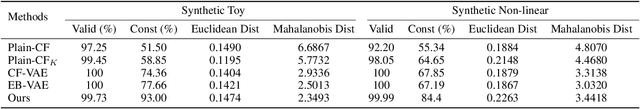

Abstract:Many existing methods of counterfactual explanations ignore the intrinsic relationships between data attributes and thus fail to generate realistic counterfactuals. Moreover, the existing methods that account for relationships between data attributes require domain knowledge, which limits their applicability in complex real-world applications. In this paper, we propose a novel approach to realistic counterfactual explanations that preserve relationships between data attributes. The model directly learns the relationships by a variational auto-encoder without domain knowledge and then learns to disturb the latent space accordingly. We conduct extensive experiments on both synthetic and real-world datasets. The results demonstrate that the proposed method learns relationships from the data and preserves these relationships in generated counterfactuals.

Learning to Evolve on Dynamic Graphs

Nov 13, 2021

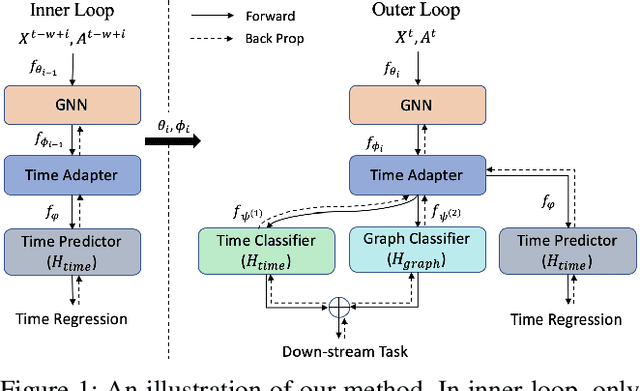

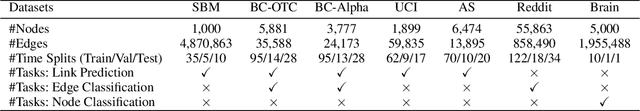

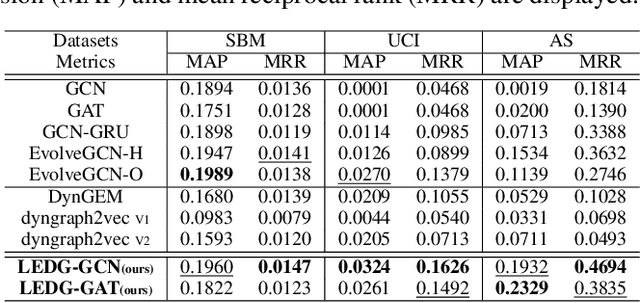

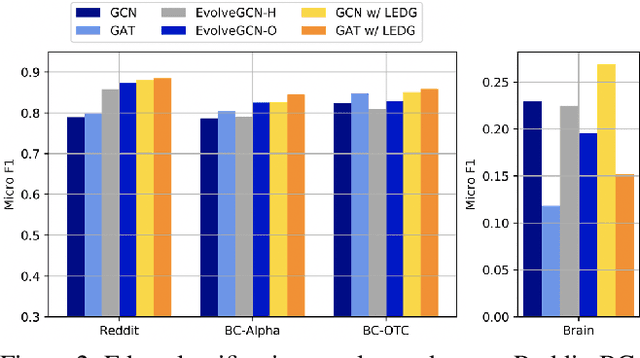

Abstract:Representation learning in dynamic graphs is a challenging problem because the topology of graph and node features vary at different time. This requires the model to be able to effectively capture both graph topology information and temporal information. Most existing works are built on recurrent neural networks (RNNs), which are used to exact temporal information of dynamic graphs, and thus they inherit the same drawbacks of RNNs. In this paper, we propose Learning to Evolve on Dynamic Graphs (LEDG) - a novel algorithm that jointly learns graph information and time information. Specifically, our approach utilizes gradient-based meta-learning to learn updating strategies that have better generalization ability than RNN on snapshots. It is model-agnostic and thus can train any message passing based graph neural network (GNN) on dynamic graphs. To enhance the representation power, we disentangle the embeddings into time embeddings and graph intrinsic embeddings. We conduct experiments on various datasets and down-stream tasks, and the experimental results validate the effectiveness of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge