Realistic Counterfactual Explanations by Learned Relations

Paper and Code

Feb 15, 2022

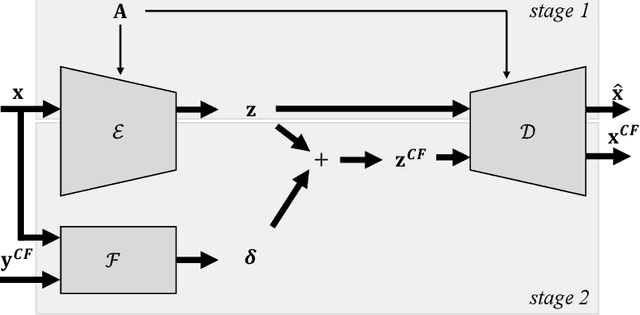

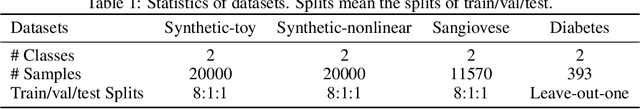

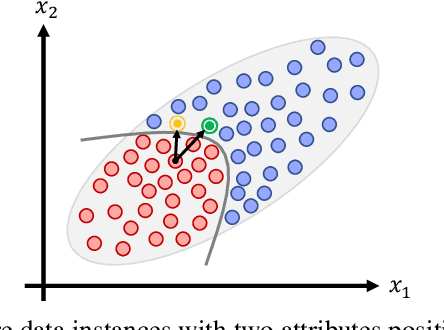

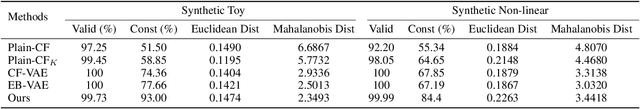

Many existing methods of counterfactual explanations ignore the intrinsic relationships between data attributes and thus fail to generate realistic counterfactuals. Moreover, the existing methods that account for relationships between data attributes require domain knowledge, which limits their applicability in complex real-world applications. In this paper, we propose a novel approach to realistic counterfactual explanations that preserve relationships between data attributes. The model directly learns the relationships by a variational auto-encoder without domain knowledge and then learns to disturb the latent space accordingly. We conduct extensive experiments on both synthetic and real-world datasets. The results demonstrate that the proposed method learns relationships from the data and preserves these relationships in generated counterfactuals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge