Xinrui Zeng

Deep Learning Based Multi-Node ISAC 4D Environmental Reconstruction with Uplink- Downlink Cooperation

Apr 23, 2024

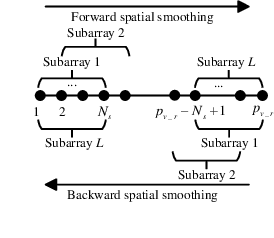

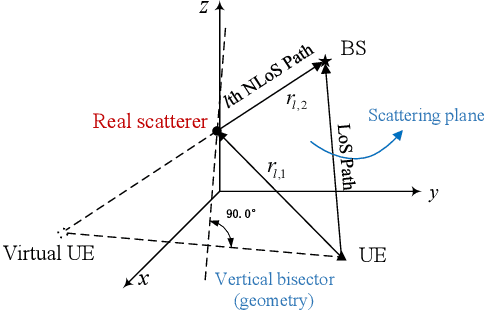

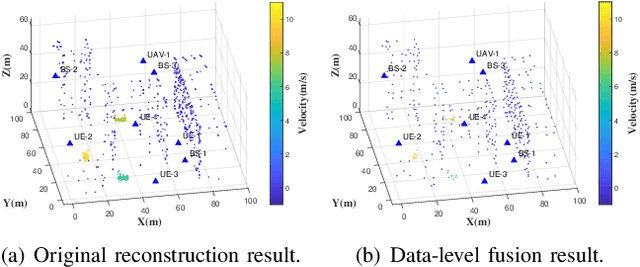

Abstract:Utilizing widely distributed communication nodes to achieve environmental reconstruction is one of the significant scenarios for Integrated Sensing and Communication (ISAC) and a crucial technology for 6G. To achieve this crucial functionality, we propose a deep learning based multi-node ISAC 4D environment reconstruction method with Uplink-Downlink (UL-DL) cooperation, which employs virtual aperture technology, Constant False Alarm Rate (CFAR) detection, and Mutiple Signal Classification (MUSIC) algorithm to maximize the sensing capabilities of single sensing nodes. Simultaneously, it introduces a cooperative environmental reconstruction scheme involving multi-node cooperation and Uplink-Downlink (UL-DL) cooperation to overcome the limitations of single-node sensing caused by occlusion and limited viewpoints. Furthermore, the deep learning models Attention Gate Gridding Residual Neural Network (AGGRNN) and Multi-View Sensing Fusion Network (MVSFNet) to enhance the density of sparsely reconstructed point clouds are proposed, aiming to restore as many original environmental details as possible while preserving the spatial structure of the point cloud. Additionally, we propose a multi-level fusion strategy incorporating both data-level and feature-level fusion to fully leverage the advantages of multi-node cooperation. Experimental results demonstrate that the environmental reconstruction performance of this method significantly outperforms other comparative method, enabling high-precision environmental reconstruction using ISAC system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge