Xiaowei Xing

The Adaptive Dynamic Programming Toolbox

Dec 29, 2020

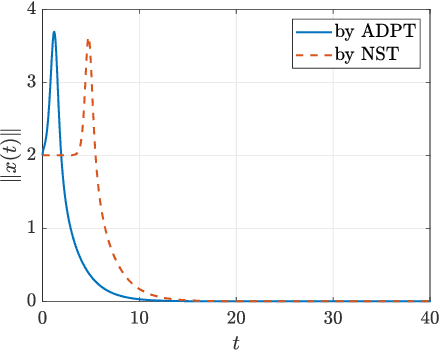

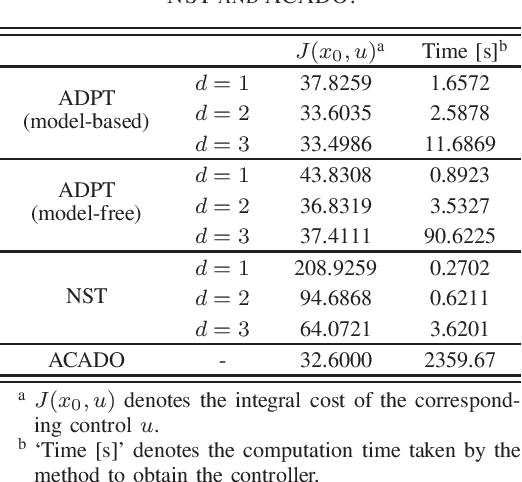

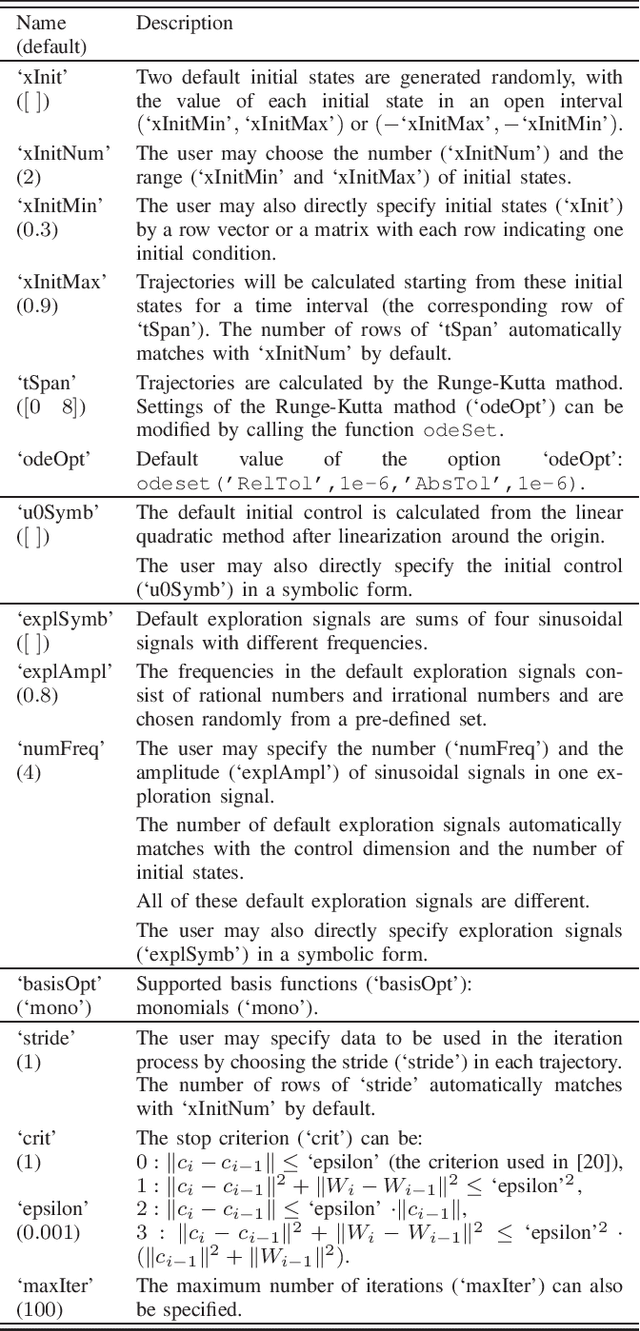

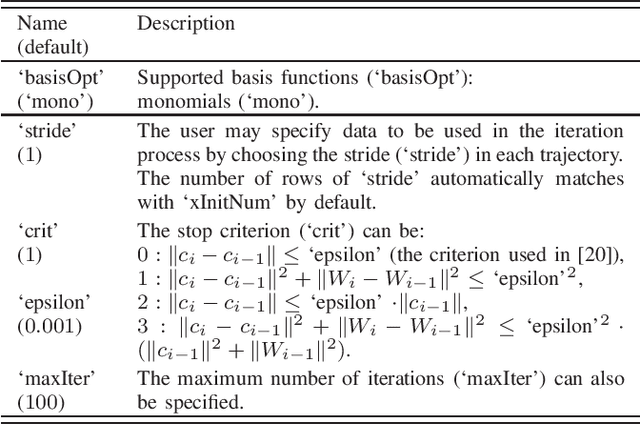

Abstract:The paper develops the Adaptive Dynamic Programming Toolbox (ADPT), which solves optimal control problems for continuous-time nonlinear systems. Based on the adaptive dynamic programming technique, the ADPT computes optimal feedback controls from the system dynamics in the model-based working mode, or from measurements of trajectories of the system in the model-free working mode without the requirement of knowledge of the system model. Multiple options are provided such that the ADPT can accommodate various customized circumstances. Compared to other popular software toolboxes for optimal control, the ADPT enjoys its computational precision and speed, which is illustrated with its applications to a satellite attitude control problem.

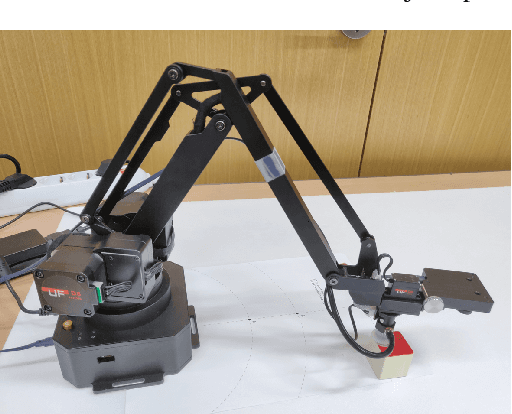

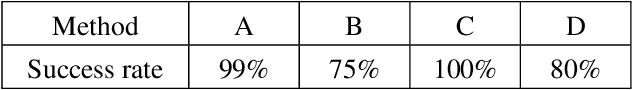

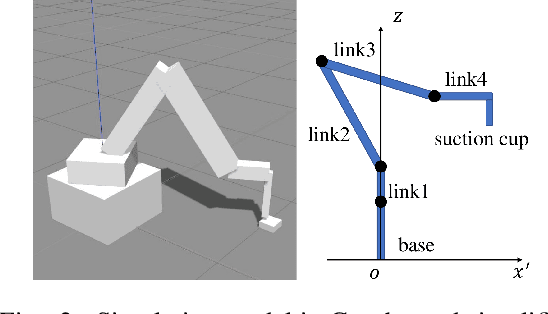

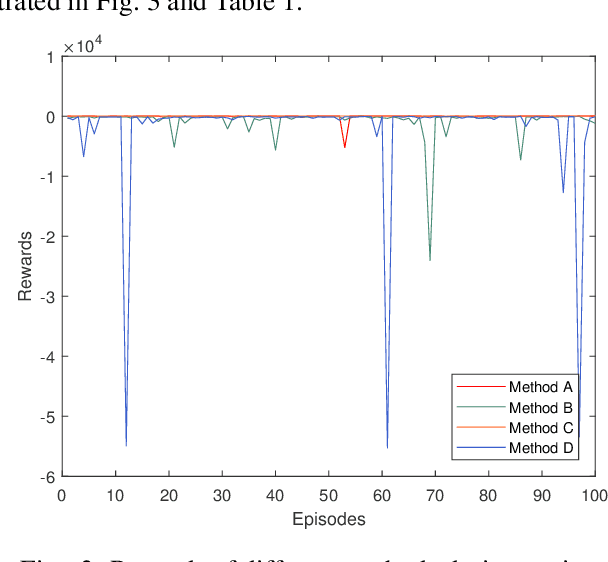

Deep Reinforcement Learning Based Robot Arm Manipulation with Efficient Training Data through Simulation

Sep 06, 2019

Abstract:Deep reinforcement learning trains neural networks using experiences sampled from the replay buffer, which is commonly updated at each time step. In this paper, we propose a method to update the replay buffer adaptively and selectively to train a robot arm to accomplish a suction task in simulation. The response time of the agent is thoroughly taken into account. The state transitions that remain stuck at the boundary of constraint are not stored. The policy trained with our method works better than the one with the common replay buffer update method. The result is demonstrated both by simulation and by experiment with a real robot arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge