Xiaoqing Fan

Deep Geometry Post-Processing for Decompressed Point Clouds

Apr 29, 2022

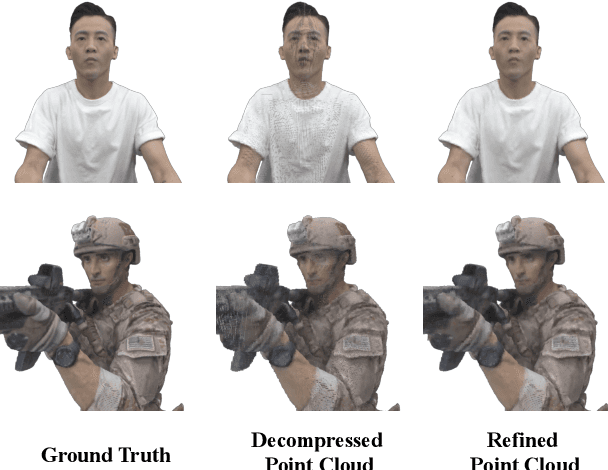

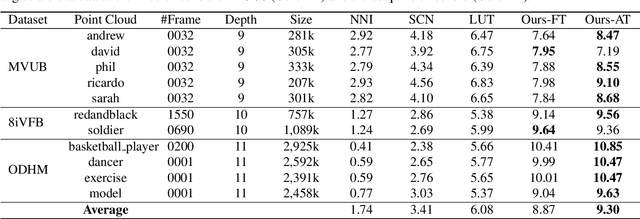

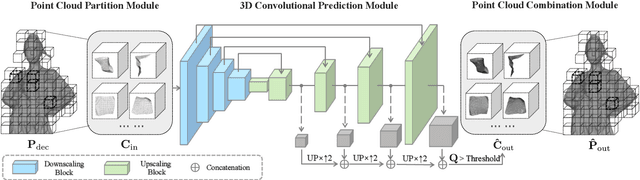

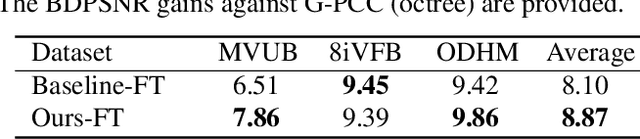

Abstract:Point cloud compression plays a crucial role in reducing the huge cost of data storage and transmission. However, distortions can be introduced into the decompressed point clouds due to quantization. In this paper, we propose a novel learning-based post-processing method to enhance the decompressed point clouds. Specifically, a voxelized point cloud is first divided into small cubes. Then, a 3D convolutional network is proposed to predict the occupancy probability for each location of a cube. We leverage both local and global contexts by generating multi-scale probabilities. These probabilities are progressively summed to predict the results in a coarse-to-fine manner. Finally, we obtain the geometry-refined point clouds based on the predicted probabilities. Different from previous methods, we deal with decompressed point clouds with huge variety of distortions using a single model. Experimental results show that the proposed method can significantly improve the quality of the decompressed point clouds, achieving 9.30dB BDPSNR gain on three representative datasets on average.

Neural Texture Extraction and Distribution for Controllable Person Image Synthesis

Apr 13, 2022

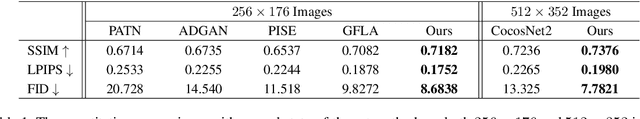

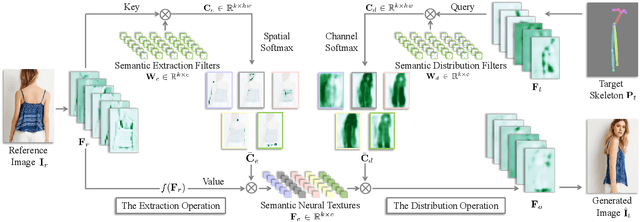

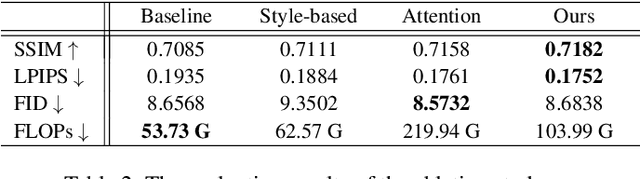

Abstract:We deal with the controllable person image synthesis task which aims to re-render a human from a reference image with explicit control over body pose and appearance. Observing that person images are highly structured, we propose to generate desired images by extracting and distributing semantic entities of reference images. To achieve this goal, a neural texture extraction and distribution operation based on double attention is described. This operation first extracts semantic neural textures from reference feature maps. Then, it distributes the extracted neural textures according to the spatial distributions learned from target poses. Our model is trained to predict human images in arbitrary poses, which encourages it to extract disentangled and expressive neural textures representing the appearance of different semantic entities. The disentangled representation further enables explicit appearance control. Neural textures of different reference images can be fused to control the appearance of the interested areas. Experimental comparisons show the superiority of the proposed model. Code is available at https://github.com/RenYurui/Neural-Texture-Extraction-Distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge