Xiaoao Song

Design of an End-effector with Application to Avocado Harvesting

Jul 01, 2024

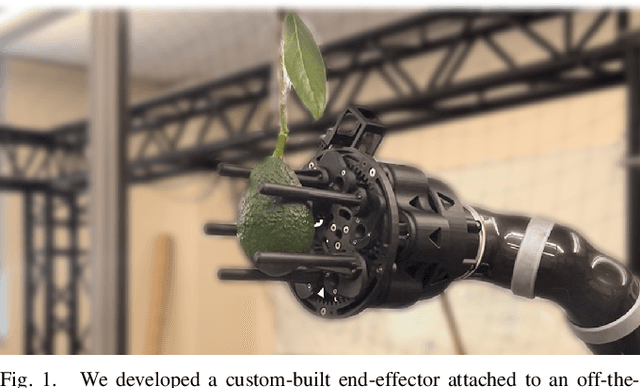

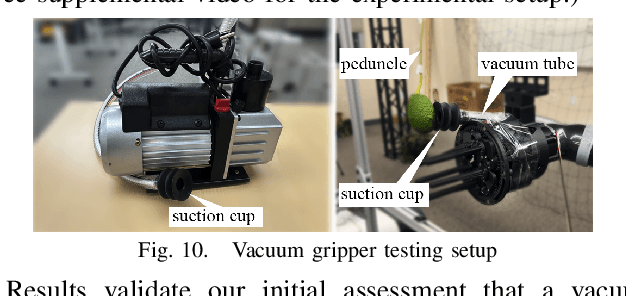

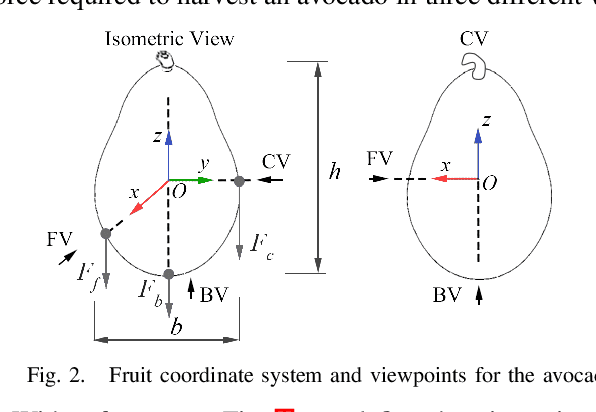

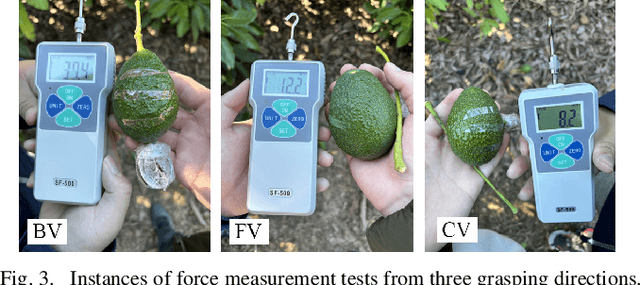

Abstract:Robot-assisted fruit harvesting has been a critical research direction supporting sustainable crop production. One important determinant of system behavior and efficiency is the end-effector that comes in direct contact with the crop during harvesting and directly affects harvesting success. Harvesting avocados poses unique challenges not addressed by existing end-effectors (namely, they have uneven surfaces and irregular shapes grow on thick peduncles, and have a sturdy calyx attached). The work reported in this paper contributes a new end-effector design suitable for avocado picking. A rigid system design with a two-stage rotational motion is developed, to first grasp the avocado and then detach it from its peduncle. A force analysis is conducted to determine key design parameters. Preliminary experiments demonstrate the efficiency of the developed end-effector to pick and apply a moment to an avocado from a specific viewpoint (as compared to pulling it directly), and in-lab experiments show that the end-effector can grasp and retrieve avocados with a 100% success rate.

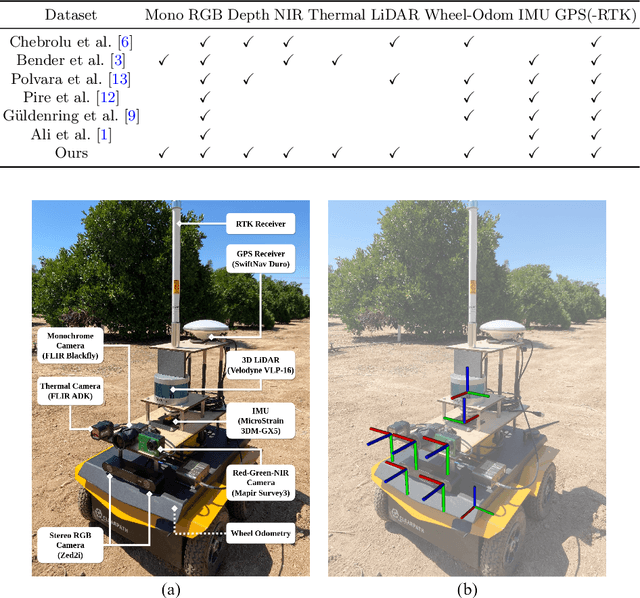

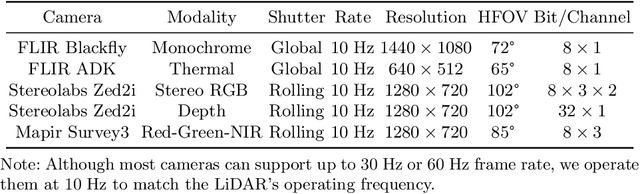

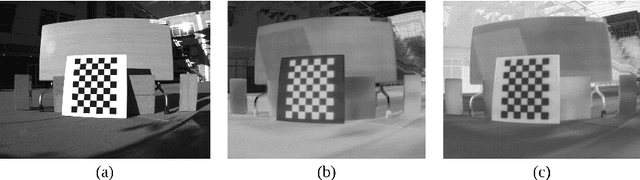

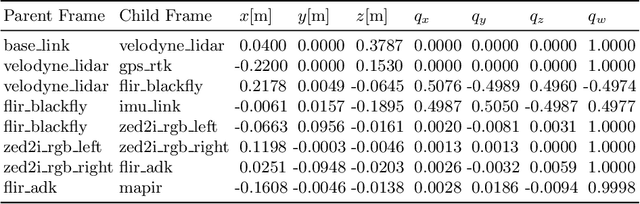

Multimodal Dataset for Localization, Mapping and Crop Monitoring in Citrus Tree Farms

Sep 29, 2023

Abstract:In this work we introduce the CitrusFarm dataset, a comprehensive multimodal sensory dataset collected by a wheeled mobile robot operating in agricultural fields. The dataset offers stereo RGB images with depth information, as well as monochrome, near-infrared and thermal images, presenting diverse spectral responses crucial for agricultural research. Furthermore, it provides a range of navigational sensor data encompassing wheel odometry, LiDAR, inertial measurement unit (IMU), and GNSS with Real-Time Kinematic (RTK) as the centimeter-level positioning ground truth. The dataset comprises seven sequences collected in three fields of citrus trees, featuring various tree species at different growth stages, distinctive planting patterns, as well as varying daylight conditions. It spans a total operation time of 1.7 hours, covers a distance of 7.5 km, and constitutes 1.3 TB of data. We anticipate that this dataset can facilitate the development of autonomous robot systems operating in agricultural tree environments, especially for localization, mapping and crop monitoring tasks. Moreover, the rich sensing modalities offered in this dataset can also support research in a range of robotics and computer vision tasks, such as place recognition, scene understanding, object detection and segmentation, and multimodal learning. The dataset, in conjunction with related tools and resources, is made publicly available at https://github.com/UCR-Robotics/Citrus-Farm-Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge