Xiao-Lin Wang

Causally-Aware Spatio-Temporal Multi-Graph Convolution Network for Accurate and Reliable Traffic Prediction

Aug 23, 2024Abstract:Accurate and reliable prediction has profound implications to a wide range of applications. In this study, we focus on an instance of spatio-temporal learning problem--traffic prediction--to demonstrate an advanced deep learning model developed for making accurate and reliable forecast. Despite the significant progress in traffic prediction, limited studies have incorporated both explicit and implicit traffic patterns simultaneously to improve prediction performance. Meanwhile, the variability nature of traffic states necessitates quantifying the uncertainty of model predictions in a statistically principled way; however, extant studies offer no provable guarantee on the statistical validity of confidence intervals in reflecting its actual likelihood of containing the ground truth. In this paper, we propose an end-to-end traffic prediction framework that leverages three primary components to generate accurate and reliable traffic predictions: dynamic causal structure learning for discovering implicit traffic patterns from massive traffic data, causally-aware spatio-temporal multi-graph convolution network (CASTMGCN) for learning spatio-temporal dependencies, and conformal prediction for uncertainty quantification. CASTMGCN fuses several graphs that characterize different important aspects of traffic networks and an auxiliary graph that captures the effect of exogenous factors on the road network. On this basis, a conformal prediction approach tailored to spatio-temporal data is further developed for quantifying the uncertainty in node-wise traffic predictions over varying prediction horizons. Experimental results on two real-world traffic datasets demonstrate that the proposed method outperforms several state-of-the-art models in prediction accuracy; moreover, it generates more efficient prediction regions than other methods while strictly satisfying the statistical validity in coverage.

Enhancing the Performance of Neural Networks Through Causal Discovery and Integration of Domain Knowledge

Dec 01, 2023

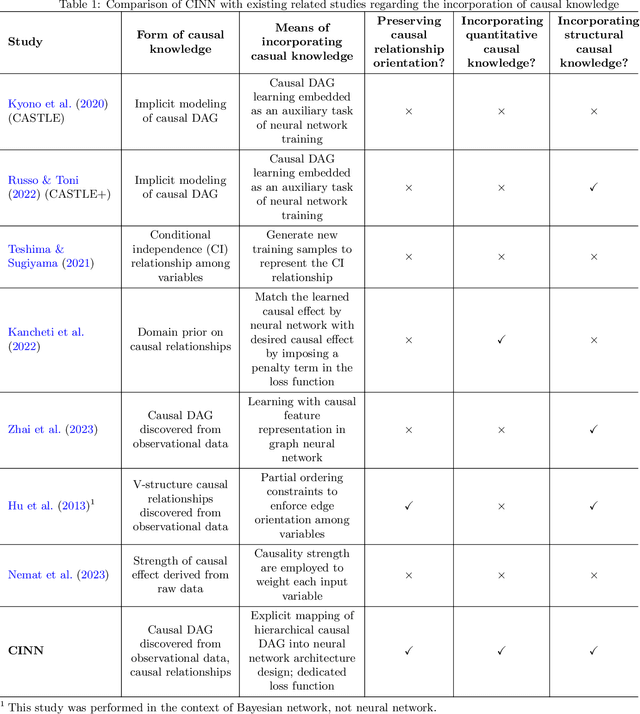

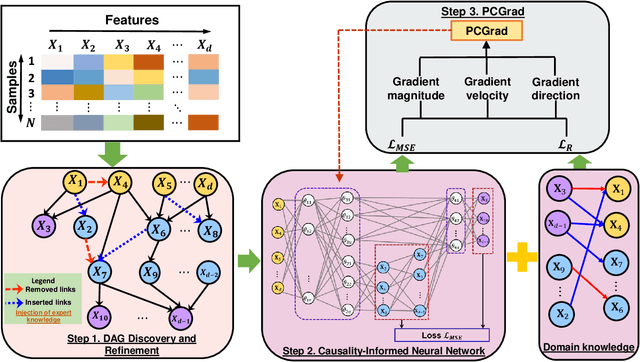

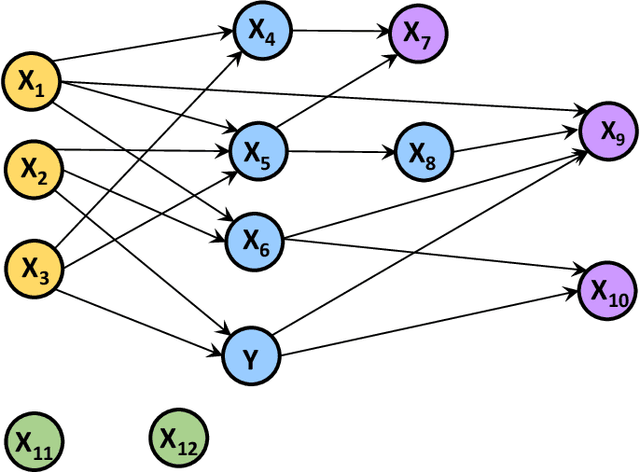

Abstract:In this paper, we develop a generic methodology to encode hierarchical causality structure among observed variables into a neural network in order to improve its predictive performance. The proposed methodology, called causality-informed neural network (CINN), leverages three coherent steps to systematically map the structural causal knowledge into the layer-to-layer design of neural network while strictly preserving the orientation of every causal relationship. In the first step, CINN discovers causal relationships from observational data via directed acyclic graph (DAG) learning, where causal discovery is recast as a continuous optimization problem to avoid the combinatorial nature. In the second step, the discovered hierarchical causality structure among observed variables is systematically encoded into neural network through a dedicated architecture and customized loss function. By categorizing variables in the causal DAG as root, intermediate, and leaf nodes, the hierarchical causal DAG is translated into CINN with a one-to-one correspondence between nodes in the causal DAG and units in the CINN while maintaining the relative order among these nodes. Regarding the loss function, both intermediate and leaf nodes in the DAG graph are treated as target outputs during CINN training so as to drive co-learning of causal relationships among different types of nodes. As multiple loss components emerge in CINN, we leverage the projection of conflicting gradients to mitigate gradient interference among the multiple learning tasks. Computational experiments across a broad spectrum of UCI data sets demonstrate substantial advantages of CINN in predictive performance over other state-of-the-art methods. In addition, an ablation study underscores the value of integrating structural and quantitative causal knowledge in enhancing the neural network's predictive performance incrementally.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge