Xianhao Wu

SmokeBench: A Real-World Dataset for Surveillance Image Desmoking in Early-Stage Fire Scenes

Sep 16, 2025Abstract:Early-stage fire scenes (0-15 minutes after ignition) represent a crucial temporal window for emergency interventions. During this stage, the smoke produced by combustion significantly reduces the visibility of surveillance systems, severely impairing situational awareness and hindering effective emergency response and rescue operations. Consequently, there is an urgent need to remove smoke from images to obtain clear scene information. However, the development of smoke removal algorithms remains limited due to the lack of large-scale, real-world datasets comprising paired smoke-free and smoke-degraded images. To address these limitations, we present a real-world surveillance image desmoking benchmark dataset named SmokeBench, which contains image pairs captured under diverse scenes setup and smoke concentration. The curated dataset provides precisely aligned degraded and clean images, enabling supervised learning and rigorous evaluation. We conduct comprehensive experiments by benchmarking a variety of desmoking methods on our dataset. Our dataset provides a valuable foundation for advancing robust and practical image desmoking in real-world fire scenes. This dataset has been released to the public and can be downloaded from https://github.com/ncfjd/SmokeBench.

Dual-Path Multi-Scale Transformer for High-Quality Image Deraining

May 28, 2024

Abstract:Despite the superiority of convolutional neural networks (CNNs) and Transformers in single-image rain removal, current multi-scale models still face significant challenges due to their reliance on single-scale feature pyramid patterns. In this paper, we propose an effective rain removal method, the dual-path multi-scale Transformer (DPMformer) for high-quality image reconstruction by leveraging rich multi-scale information. This method consists of a backbone path and two branch paths from two different multi-scale approaches. Specifically, one path adopts the coarse-to-fine strategy, progressively downsampling the image to 1/2 and 1/4 scales, which helps capture fine-scale potential rain information fusion. Simultaneously, we employ the multi-patch stacked model (non-overlapping blocks of size 2 and 4) to enrich the feature information of the deep network in the other path. To learn a richer blend of features, the backbone path fully utilizes the multi-scale information to achieve high-quality rain removal image reconstruction. Extensive experiments on benchmark datasets demonstrate that our method achieves promising performance compared to other state-of-the-art methods.

RSDehamba: Lightweight Vision Mamba for Remote Sensing Satellite Image Dehazing

May 16, 2024

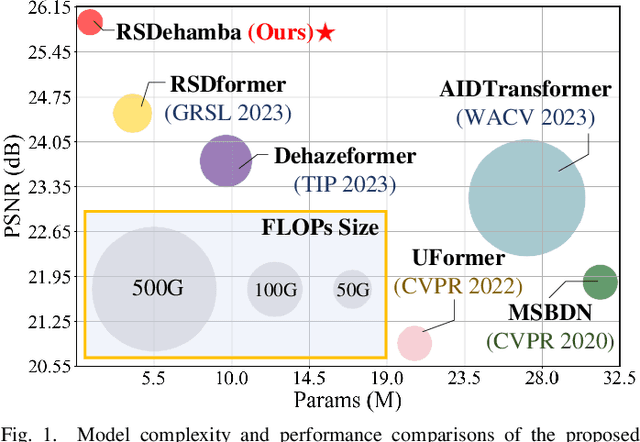

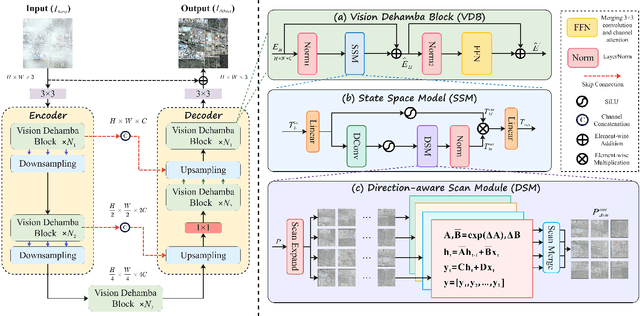

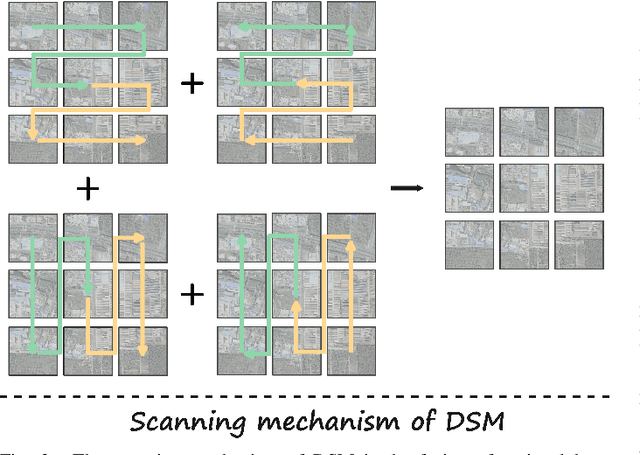

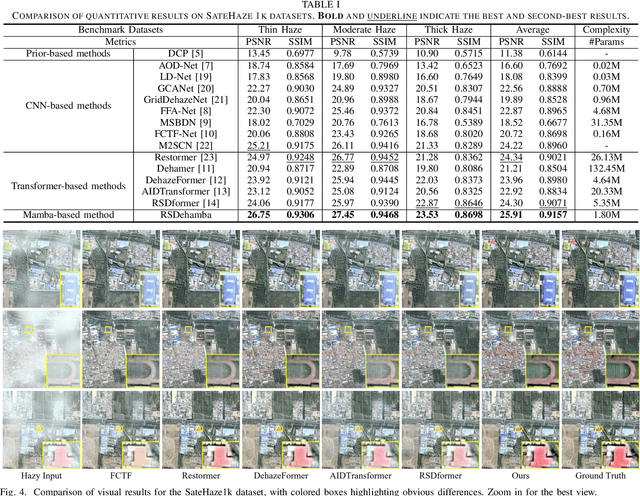

Abstract:Remote sensing image dehazing (RSID) aims to remove nonuniform and physically irregular haze factors for high-quality image restoration. The emergence of CNNs and Transformers has taken extraordinary strides in the RSID arena. However, these methods often struggle to demonstrate the balance of adequate long-range dependency modeling and maintaining computational efficiency. To this end, we propose the first lightweight network on the mamba-based model called RSDhamba in the field of RSID. Greatly inspired by the recent rise of Selective State Space Model (SSM) for its superior performance in modeling linear complexity and remote dependencies, our designed RSDehamba integrates the SSM framework into the U-Net architecture. Specifically, we propose the Vision Dehamba Block (VDB) as the core component of the overall network, which utilizes the linear complexity of SSM to achieve the capability of global context encoding. Simultaneously, the Direction-aware Scan Module (DSM) is designed to dynamically aggregate feature exchanges over different directional domains to effectively enhance the flexibility of sensing the spatially varying distribution of haze. In this way, our RSDhamba fully demonstrates the superiority of spatial distance capture dependencies and channel information exchange for better extraction of haze features. Extensive experimental results on widely used benchmarks validate the surpassing performance of our RSDehamba against existing state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge