Xi Niu

Automated CVE Analysis for Threat Prioritization and Impact Prediction

Sep 06, 2023Abstract:The Common Vulnerabilities and Exposures (CVE) are pivotal information for proactive cybersecurity measures, including service patching, security hardening, and more. However, CVEs typically offer low-level, product-oriented descriptions of publicly disclosed cybersecurity vulnerabilities, often lacking the essential attack semantic information required for comprehensive weakness characterization and threat impact estimation. This critical insight is essential for CVE prioritization and the identification of potential countermeasures, particularly when dealing with a large number of CVEs. Current industry practices involve manual evaluation of CVEs to assess their attack severities using the Common Vulnerability Scoring System (CVSS) and mapping them to Common Weakness Enumeration (CWE) for potential mitigation identification. Unfortunately, this manual analysis presents a major bottleneck in the vulnerability analysis process, leading to slowdowns in proactive cybersecurity efforts and the potential for inaccuracies due to human errors. In this research, we introduce our novel predictive model and tool (called CVEDrill) which revolutionizes CVE analysis and threat prioritization. CVEDrill accurately estimates the CVSS vector for precise threat mitigation and priority ranking and seamlessly automates the classification of CVEs into the appropriate CWE hierarchy classes. By harnessing CVEDrill, organizations can now implement cybersecurity countermeasure mitigation with unparalleled accuracy and timeliness, surpassing in this domain the capabilities of state-of-the-art tools like ChaptGPT.

Language Model for Text Analytic in Cybersecurity

Apr 06, 2022

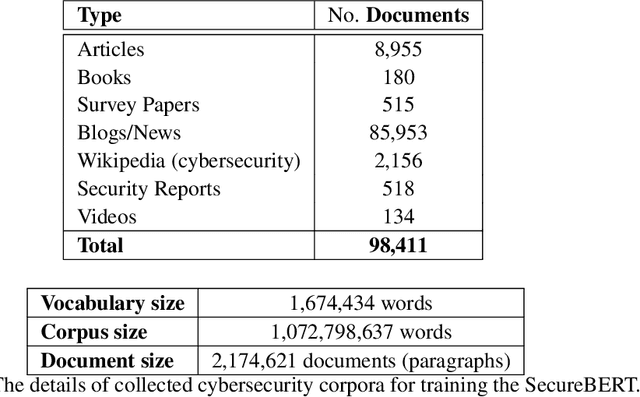

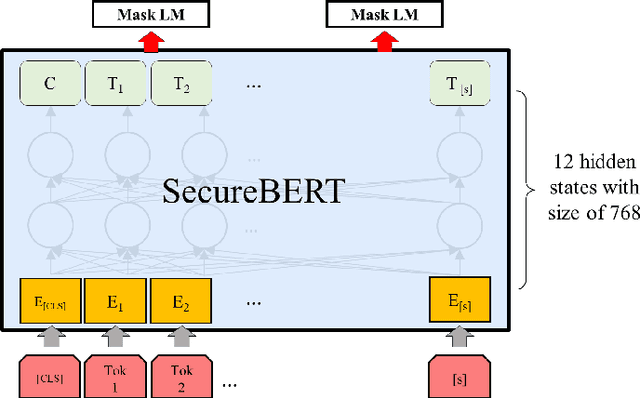

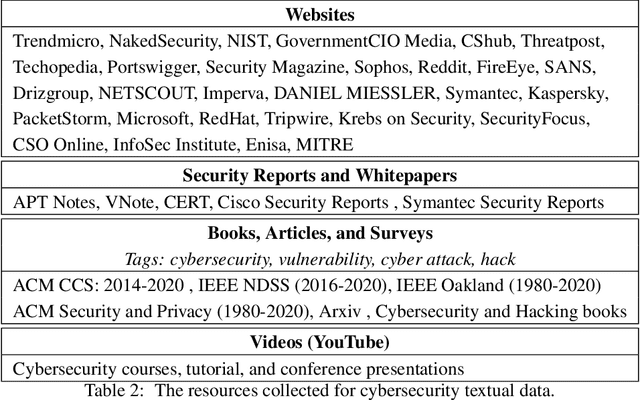

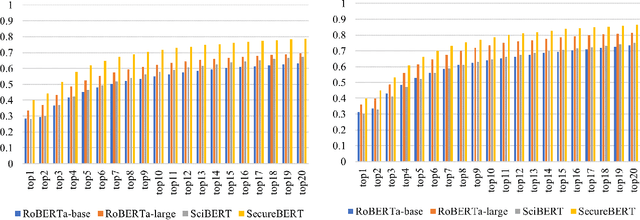

Abstract:NLP is a form of artificial intelligence and machine learning concerned with a computer or machine's ability to understand and interpret human language. Language models are crucial in text analytics and NLP since they allow computers to interpret qualitative input and convert it to quantitative data that they can use in other tasks. In essence, in the context of transfer learning, language models are typically trained on a large generic corpus, referred to as the pre-training stage, and then fine-tuned to a specific underlying task. As a result, pre-trained language models are mostly used as a baseline model that incorporates a broad grasp of the context and may be further customized to be used in a new NLP task. The majority of pre-trained models are trained on corpora from general domains, such as Twitter, newswire, Wikipedia, and Web. Such off-the-shelf NLP models trained on general text may be inefficient and inaccurate in specialized fields. In this paper, we propose a cybersecurity language model called SecureBERT, which is able to capture the text connotations in the cybersecurity domain, and therefore could further be used in automation for many important cybersecurity tasks that would otherwise rely on human expertise and tedious manual efforts. SecureBERT is trained on a large corpus of cybersecurity text collected and preprocessed by us from a variety of sources in cybersecurity and the general computing domain. Using our proposed methods for tokenization and model weights adjustment, SecureBERT is not only able to preserve the understanding of general English as most pre-trained language models can do, but also effective when applied to text that has cybersecurity implications.

Modeling the Uncertainty in Electronic Health Records: a Bayesian Deep Learning Approach

Jul 14, 2019

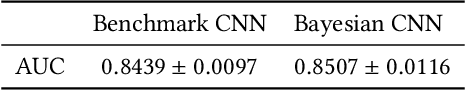

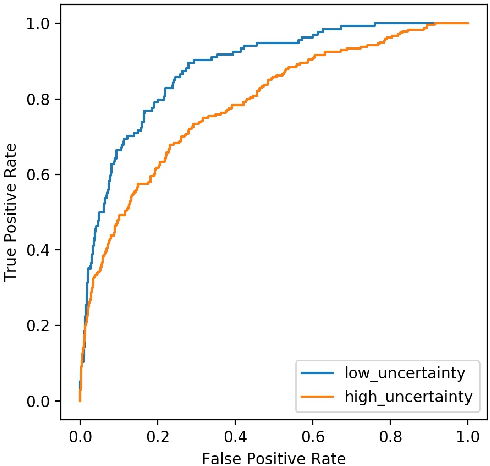

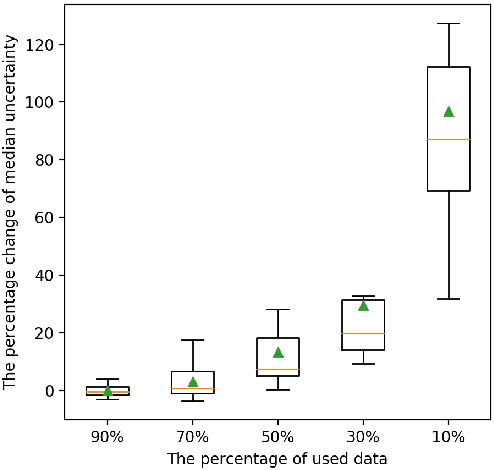

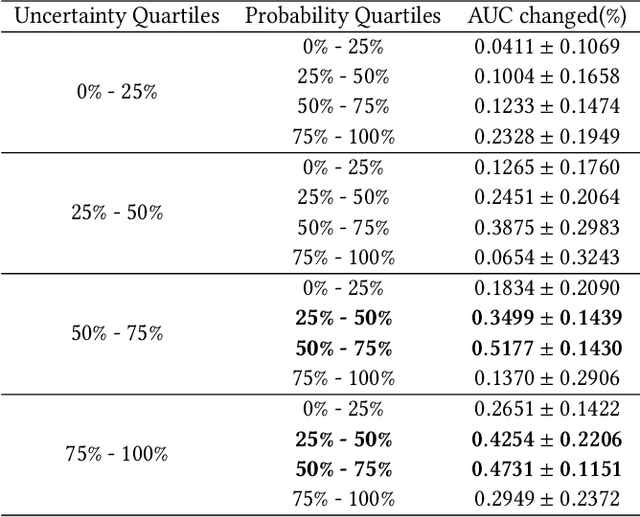

Abstract:Deep learning models have exhibited superior performance in predictive tasks with the explosively increasing Electronic Health Records (EHR). However, due to the lack of transparency, behaviors of deep learning models are difficult to interpret. Without trustworthiness, deep learning models will not be able to assist in the real-world decision-making process of healthcare issues. We propose a deep learning model based on Bayesian Neural Networks (BNN) to predict uncertainty induced by data noise. The uncertainty is introduced to provide model predictions with an extra level of confidence. Our experiments verify that instances with high uncertainty are harmful to model performance. Moreover, by investigating the distributions of model prediction and uncertainty, we show that it is possible to identify a group of patients for timely intervention, such that decreasing data noise will benefit more on the prediction accuracy for these patients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge