Weronika Hryniewska-Guzik

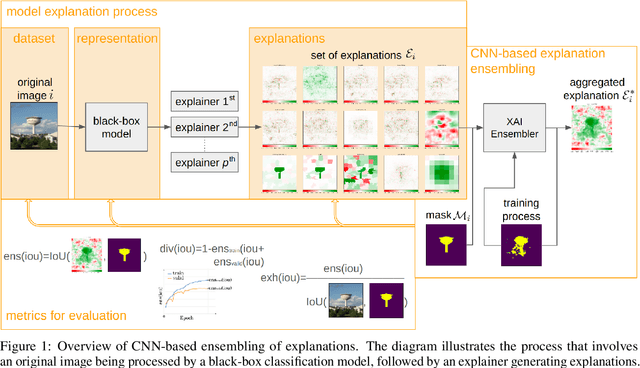

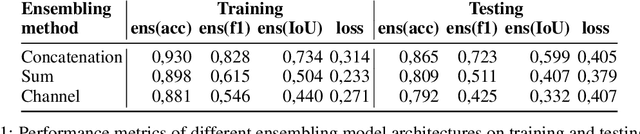

CNN-based explanation ensembling for dataset, representation and explanations evaluation

Apr 16, 2024

Abstract:Explainable Artificial Intelligence has gained significant attention due to the widespread use of complex deep learning models in high-stake domains such as medicine, finance, and autonomous cars. However, different explanations often present different aspects of the model's behavior. In this research manuscript, we explore the potential of ensembling explanations generated by deep classification models using convolutional model. Through experimentation and analysis, we aim to investigate the implications of combining explanations to uncover a more coherent and reliable patterns of the model's behavior, leading to the possibility of evaluating the representation learned by the model. With our method, we can uncover problems of under-representation of images in a certain class. Moreover, we discuss other side benefits like features' reduction by replacing the original image with its explanations resulting in the removal of some sensitive information. Through the use of carefully selected evaluation metrics from the Quantus library, we demonstrated the method's superior performance in terms of Localisation and Faithfulness, compared to individual explanations.

A comparative analysis of deep learning models for lung segmentation on X-ray images

Apr 09, 2024

Abstract:Robust and highly accurate lung segmentation in X-rays is crucial in medical imaging. This study evaluates deep learning solutions for this task, ranking existing methods and analyzing their performance under diverse image modifications. Out of 61 analyzed papers, only nine offered implementation or pre-trained models, enabling assessment of three prominent methods: Lung VAE, TransResUNet, and CE-Net. The analysis revealed that CE-Net performs best, demonstrating the highest values in dice similarity coefficient and intersection over union metric.

NormEnsembleXAI: Unveiling the Strengths and Weaknesses of XAI Ensemble Techniques

Jan 30, 2024Abstract:This paper presents a comprehensive comparative analysis of explainable artificial intelligence (XAI) ensembling methods. Our research brings three significant contributions. Firstly, we introduce a novel ensembling method, NormEnsembleXAI, that leverages minimum, maximum, and average functions in conjunction with normalization techniques to enhance interpretability. Secondly, we offer insights into the strengths and weaknesses of XAI ensemble methods. Lastly, we provide a library, facilitating the practical implementation of XAI ensembling, thus promoting the adoption of transparent and interpretable deep learning models.

Multi-task learning for classification, segmentation, reconstruction, and detection on chest CT scans

Aug 02, 2023Abstract:Lung cancer and covid-19 have one of the highest morbidity and mortality rates in the world. For physicians, the identification of lesions is difficult in the early stages of the disease and time-consuming. Therefore, multi-task learning is an approach to extracting important features, such as lesions, from small amounts of medical data because it learns to generalize better. We propose a novel multi-task framework for classification, segmentation, reconstruction, and detection. To the best of our knowledge, we are the first ones who added detection to the multi-task solution. Additionally, we checked the possibility of using two different backbones and different loss functions in the segmentation task.

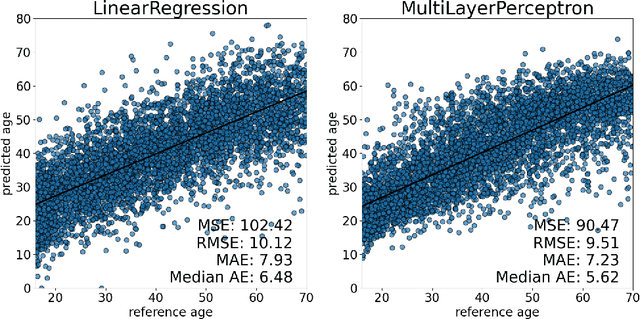

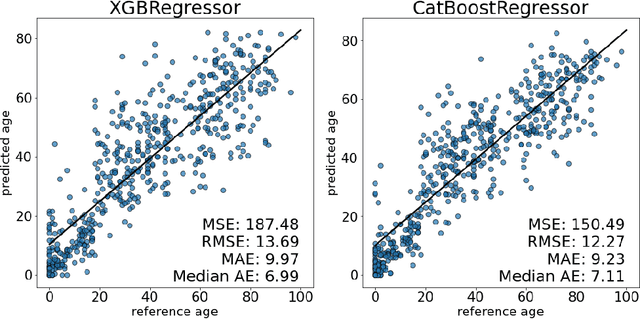

Challenges facing the explainability of age prediction models: case study for two modalities

Mar 12, 2023

Abstract:The prediction of age is a challenging task with various practical applications in high-impact fields like the healthcare domain or criminology. Despite the growing number of models and their increasing performance, we still know little about how these models work. Numerous examples of failures of AI systems show that performance alone is insufficient, thus, new methods are needed to explore and explain the reasons for the model's predictions. In this paper, we investigate the use of Explainable Artificial Intelligence (XAI) for age prediction focusing on two specific modalities, EEG signal and lung X-rays. We share predictive models for age to facilitate further research on new techniques to explain models for these modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge