Wenqi Ge

Commonsense Scene Graph-based Target Localization for Object Search

Mar 30, 2024

Abstract:Object search is a fundamental skill for household robots, yet the core problem lies in the robot's ability to locate the target object accurately. The dynamic nature of household environments, characterized by the arbitrary placement of daily objects by users, makes it challenging to perform target localization. To efficiently locate the target object, the robot needs to be equipped with knowledge at both the object and room level. However, existing approaches rely solely on one type of knowledge, leading to unsatisfactory object localization performance and, consequently, inefficient object search processes. To address this problem, we propose a commonsense scene graph-based target localization, CSG-TL, to enhance target object search in the household environment. Given the pre-built map with stationary items, the robot models the room-level knowledge with object-level commonsense knowledge generated by a large language model (LLM) to a commonsense scene graph (CSG), supporting both types of knowledge for CSG-TL. To demonstrate the superiority of CSG-TL on target localization, extensive experiments are performed on the real-world ScanNet dataset and the AI2THOR simulator. Moreover, we have extended CSG-TL to an object search framework, CSG-OS, validated in both simulated and real-world environments. Code and videos are available at https://sites.google.com/view/csg-os.

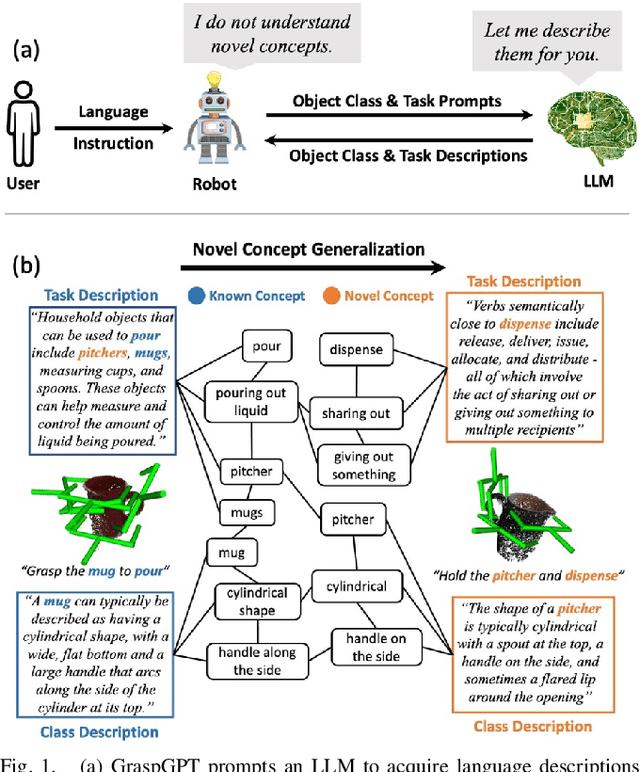

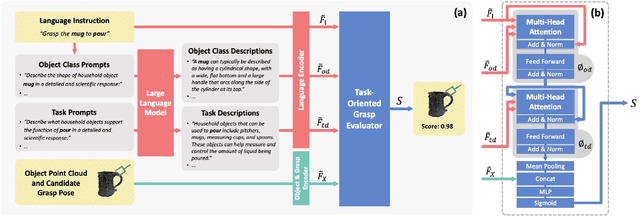

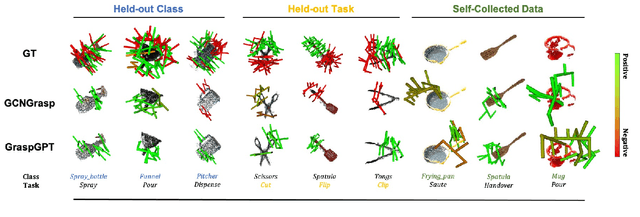

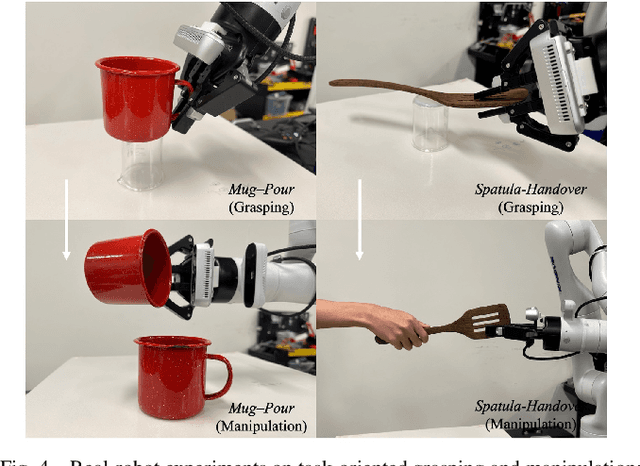

GraspGPT: Leveraging Semantic Knowledge from a Large Language Model for Task-Oriented Grasping

Jul 30, 2023

Abstract:Task-oriented grasping (TOG) refers to the problem of predicting grasps on an object that enable subsequent manipulation tasks. To model the complex relationships between objects, tasks, and grasps, existing methods incorporate semantic knowledge as priors into TOG pipelines. However, the existing semantic knowledge is typically constructed based on closed-world concept sets, restraining the generalization to novel concepts out of the pre-defined sets. To address this issue, we propose GraspGPT, a large language model (LLM) based TOG framework that leverages the open-end semantic knowledge from an LLM to achieve zero-shot generalization to novel concepts. We conduct experiments on Language Augmented TaskGrasp (LA-TaskGrasp) dataset and demonstrate that GraspGPT outperforms existing TOG methods on different held-out settings when generalizing to novel concepts out of the training set. The effectiveness of GraspGPT is further validated in real-robot experiments. Our code, data, appendix, and video are publicly available at https://sites.google.com/view/graspgpt/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge