Wenjing Chu

On the Out-Of-Distribution Generalization of Multimodal Large Language Models

Feb 09, 2024Abstract:We investigate the generalization boundaries of current Multimodal Large Language Models (MLLMs) via comprehensive evaluation under out-of-distribution scenarios and domain-specific tasks. We evaluate their zero-shot generalization across synthetic images, real-world distributional shifts, and specialized datasets like medical and molecular imagery. Empirical results indicate that MLLMs struggle with generalization beyond common training domains, limiting their direct application without adaptation. To understand the cause of unreliable performance, we analyze three hypotheses: semantic misinterpretation, visual feature extraction insufficiency, and mapping deficiency. Results identify mapping deficiency as the primary hurdle. To address this problem, we show that in-context learning (ICL) can significantly enhance MLLMs' generalization, opening new avenues for overcoming generalization barriers. We further explore the robustness of ICL under distribution shifts and show its vulnerability to domain shifts, label shifts, and spurious correlation shifts between in-context examples and test data.

A Decentralized Approach Towards Responsible AI in Social Ecosystems

Feb 12, 2021

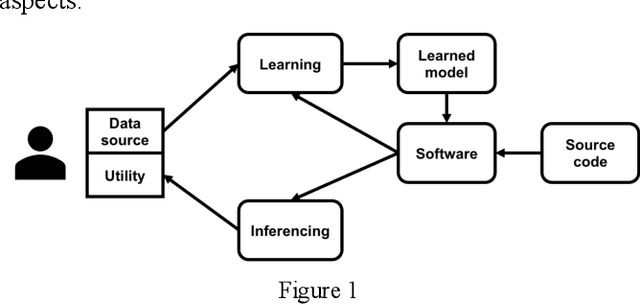

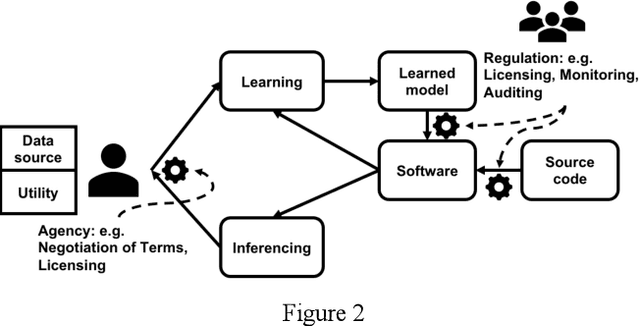

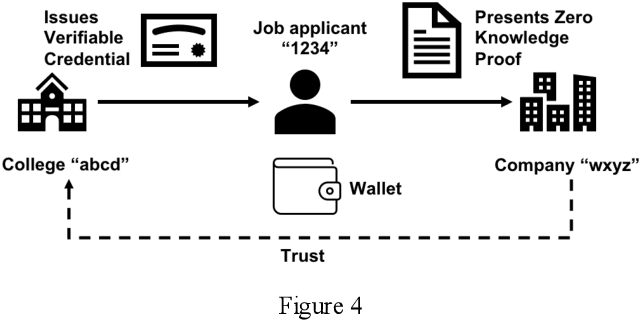

Abstract:For AI technology to fulfill its full promises, we must design effective mechanisms into the AI systems to support responsible AI behavior and curtail potential irresponsible use, e.g. in areas of privacy protection, human autonomy, robustness, and prevention of biases and discrimination in automated decision making. In this paper, we present a framework that provides computational facilities for parties in a social ecosystem to produce the desired responsible AI behaviors. To achieve this goal, we analyze AI systems at the architecture level and propose two decentralized cryptographic mechanisms for an AI system architecture: (1) using Autonomous Identity to empower human users, and (2) automating rules and adopting conventions within social institutions. We then propose a decentralized approach and outline the key concepts and mechanisms based on Decentralized Identifier (DID) and Verifiable Credentials (VC) for a general-purpose computational infrastructure to realize these mechanisms. We argue the case that a decentralized approach is the most promising path towards Responsible AI from both the computer science and social science perspectives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge