Wenbo Lan

Vision-Based UAV Localization System in Denial Environments

Jan 23, 2022

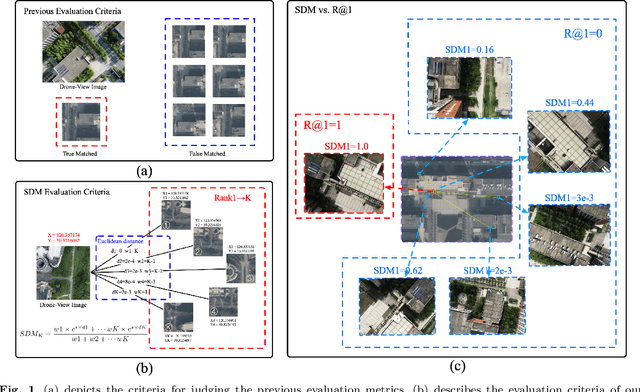

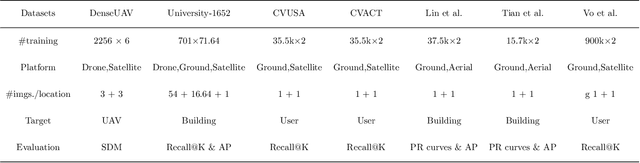

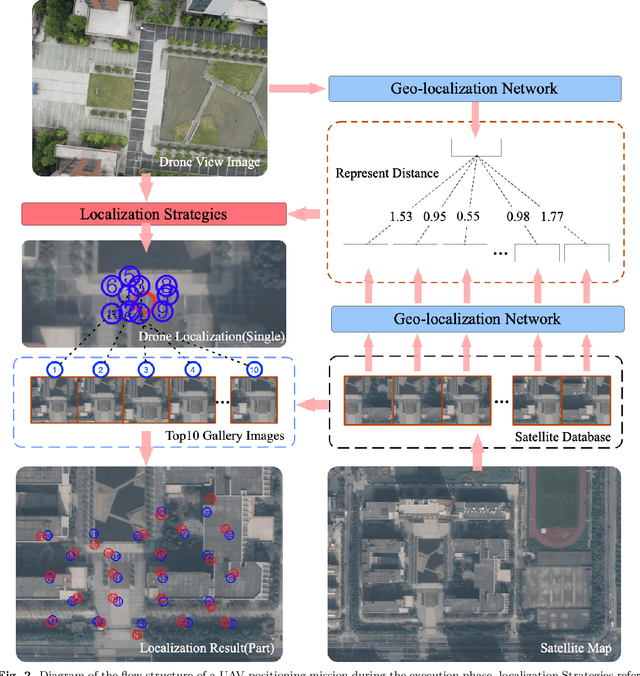

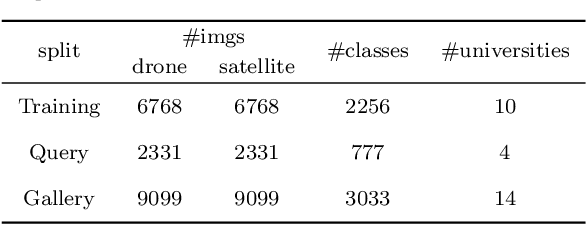

Abstract:Unmanned Aerial Vehicle (UAV) localization capability is critical in a Global Navigation Satellite System (GNSS) denial environment. The aim of this paper is to investigate the problem of locating the UAV itself through a purely visual approach. This task mainly refers to: matching the corresponding geo-tagged satellite images through the images acquired by the camera when the UAV does not acquire GNSS signals, where the satellite images are the bridge between the UAV images and the location information. However, the sampling points of previous cross-view datasets based on UAVs are discrete in spatial distribution and the inter-class relationships are not established. In the actual process of UAV-localization, the inter-class feature similarity of the proximity position distribution should be small due to the continuity of UAV movement in space. In view of this, this paper has reformulated an intensive dataset for UAV positioning tasks, which is named DenseUAV, aiming to solve the problems caused by spatial distance and scale transformation in practical application scenarios, so as to achieve high-precision UAV-localization in GNSS denial environment. In addition, a new continuum-type evaluation metric named SDM is designed to evaluate the accuracy of model matching by exploiting the continuum of UAVs in space. Specifically, with the ideas of siamese networks and metric learning, a transformer-based baseline was constructed to enhance the capture of spatially subtle features. Ultimately, a neighbor-search post-processing strategy was proposed to solve the problem of large distance localisation bias.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge