Weixun Zhou

Region Convolutional Features for Multi-Label Remote Sensing Image Retrieval

Jul 23, 2018

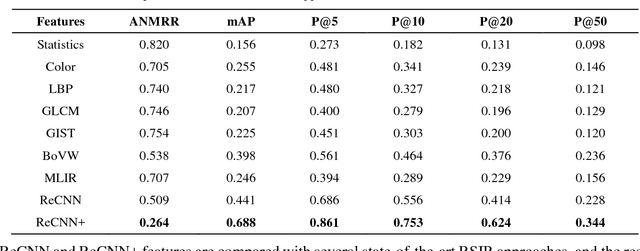

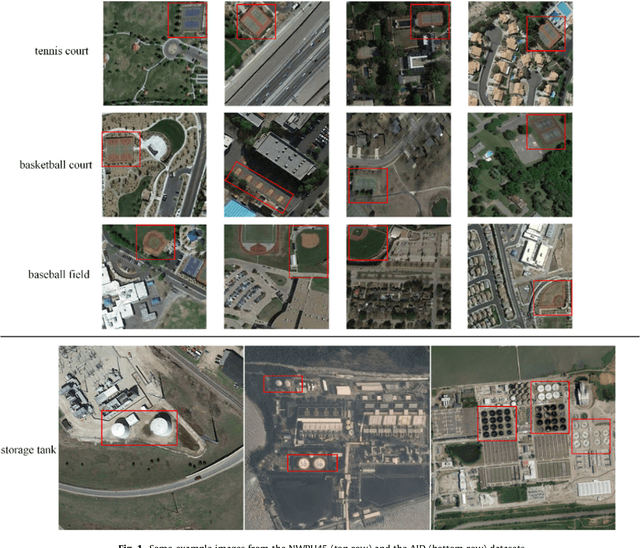

Abstract:Conventional remote sensing image retrieval (RSIR) systems usually perform single-label retrieval where each image is annotated by a single label representing the most significant semantic content of the image. This assumption, however, ignores the complexity of remote sensing images, where an image might have multiple classes (i.e., multiple labels), thus resulting in worse retrieval performance. We therefore propose a novel multi-label RSIR approach with fully convolutional networks (FCN). In our approach, we first train a FCN model using a pixel-wise labeled dataset,and the trained FCN is then used to predict the segmentation maps of each image in the considered archive. We finally extract region convolutional features of each image based on its segmentation map.The region features can be either used to perform region-based retrieval or further post-processed to obtain a feature vector for similarity measure. The experimental results show that our approach achieves state-of-the-art performance in contrast to conventional single-label and recent multi-label RSIR approaches.

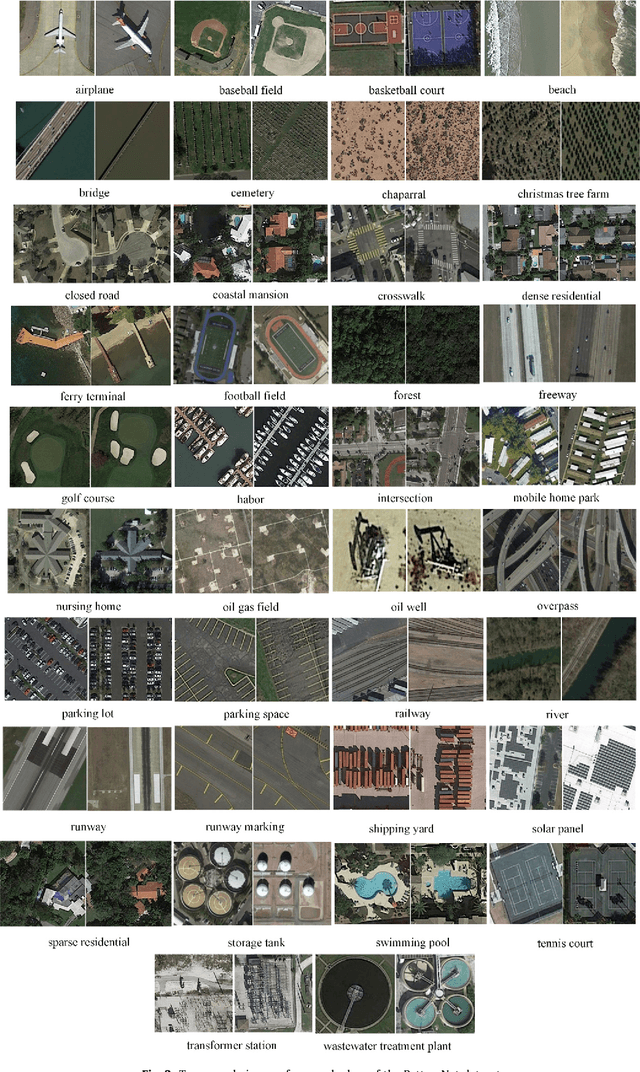

PatternNet: A Benchmark Dataset for Performance Evaluation of Remote Sensing Image Retrieval

Jul 10, 2017

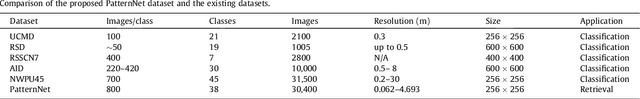

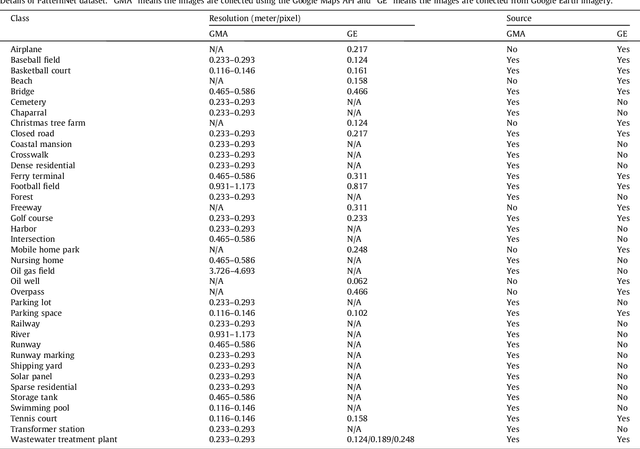

Abstract:Remote sensing image retrieval(RSIR), which aims to efficiently retrieve data of interest from large collections of remote sensing data, is a fundamental task in remote sensing. Over the past several decades, there has been significant effort to extract powerful feature representations for this task since the retrieval performance depends on the representative strength of the features. Benchmark datasets are also critical for developing, evaluating, and comparing RSIR approaches. Current benchmark datasets are deficient in that 1) they were originally collected for land use/land cover classification and not image retrieval, 2) they are relatively small in terms of the number of classes as well the number of sample images per class, and 3) the retrieval performance has saturated. These limitations have severely restricted the development of novel feature representations for RSIR, particularly the recent deep-learning based features which require large amounts of training data. We therefore present in this paper, a new large-scale remote sensing dataset termed "PatternNet" that was collected specifically for RSIR. PatternNet was collected from high-resolution imagery and contains 38 classes with 800 images per class. We also provide a thorough review of RSIR approaches ranging from traditional handcrafted feature based methods to recent deep learning based ones. We evaluate over 35 methods to establish extensive baseline results for future RSIR research using the PatternNet benchmark.

Learning Low Dimensional Convolutional Neural Networks for High-Resolution Remote Sensing Image Retrieval

Dec 30, 2016

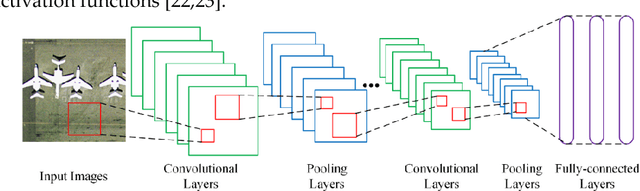

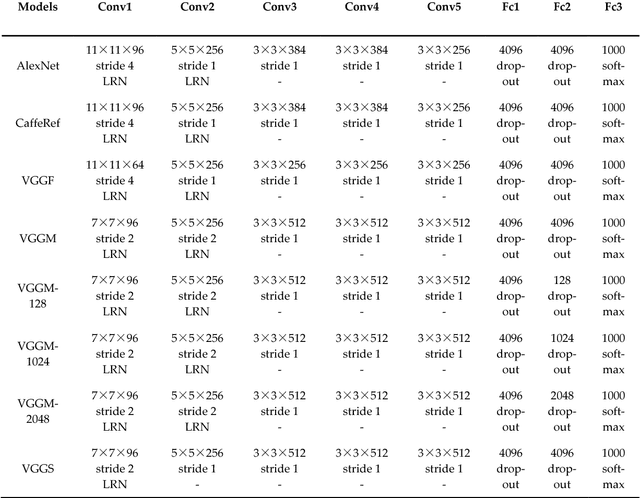

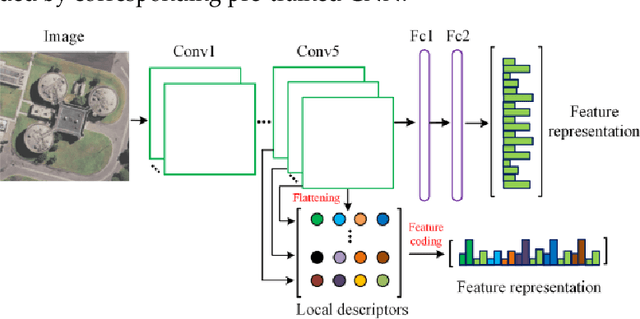

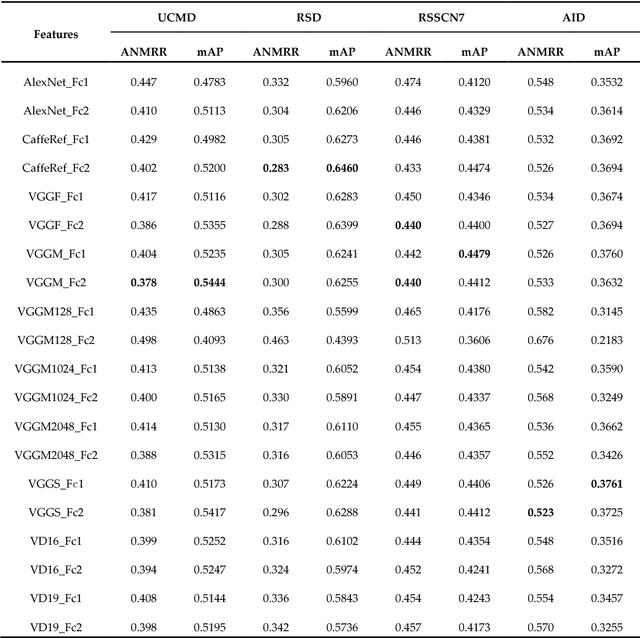

Abstract:Learning powerful feature representations for image retrieval has always been a challenging task in the field of remote sensing. Traditional methods focus on extracting low-level hand-crafted features which are not only time-consuming but also tend to achieve unsatisfactory performance due to the content complexity of remote sensing images. In this paper, we investigate how to extract deep feature representations based on convolutional neural networks (CNN) for high-resolution remote sensing image retrieval (HRRSIR). To this end, two effective schemes are proposed to generate powerful feature representations for HRRSIR. In the first scheme, the deep features are extracted from the fully-connected and convolutional layers of the pre-trained CNN models, respectively; in the second scheme, we propose a novel CNN architecture based on conventional convolution layers and a three-layer perceptron. The novel CNN model is then trained on a large remote sensing dataset to learn low dimensional features. The two schemes are evaluated on several public and challenging datasets, and the results indicate that the proposed schemes and in particular the novel CNN are able to achieve state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge