Weihu Song

Patch Network for medical image Segmentation

Feb 23, 2023

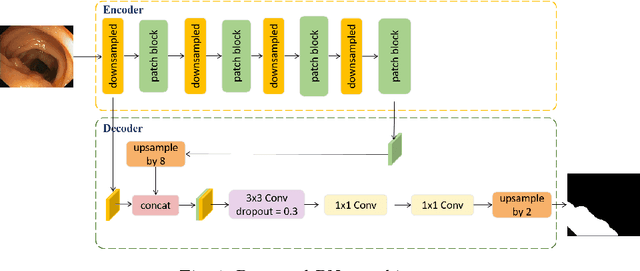

Abstract:Accurate and fast segmentation of medical images is clinically essential, yet current research methods include convolutional neural networks with fast inference speed but difficulty in learning image contextual features, and transformer with good performance but high hardware requirements. In this paper, we present a Patch Network (PNet) that incorporates the Swin Transformer notion into a convolutional neural network, allowing it to gather richer contextual information while achieving the balance of speed and accuracy. We test our PNet on Polyp(CVC-ClinicDB and ETIS- LaribPolypDB), Skin(ISIC-2018 Skin lesion segmentation challenge dataset) segmentation datasets. Our PNet achieves SOTA performance in both speed and accuracy.

PLU-Net: Extraction of multi-scale feature fusion

Feb 23, 2023Abstract:Deep learning algorithms have achieved remarkable results in medical image segmentation in recent years. These networks are unable to handle with image boundaries and details with enormous parameters, resulting in poor segmentation results. To address the issue, we develop atrous spatial pyramid pooling (ASPP) and combine it with the Squeeze-and-Excitation block (SE block), as well as present the PS module, which employs a broader and multi-scale receptive field at the network's bottom to obtain more detailed semantic information. We also propose the Local Guided block (LG block) and also its combination with the SE block to form the LS block, which can obtain more abundant local features in the feature map, so that more edge information can be retained in each down sampling process, thereby improving the performance of boundary segmentation. We propose PLU-Net and integrate our PS module and LS block into U-Net. We put our PLU-Net to the test on three benchmark datasets, and the results show that by fewer parameters and FLOPs, it outperforms on medical semantic segmentation tasks.

Non-pooling Network for medical image segmentation

Feb 21, 2023

Abstract:Existing studies tend tofocus onmodel modifications and integration with higher accuracy, which improve performance but also carry huge computational costs, resulting in longer detection times. Inmedical imaging, the use of time is extremely sensitive. And at present most of the semantic segmentation models have encoder-decoder structure or double branch structure. Their several times of the pooling use with high-level semantic information extraction operation cause information loss although there si a reverse pooling or other similar action to restore information loss of pooling operation. In addition, we notice that visual attention mechanism has superior performance on a variety of tasks. Given this, this paper proposes non-pooling network(NPNet), non-pooling commendably reduces the loss of information and attention enhancement m o d u l e ( A M ) effectively increases the weight of useful information. The method greatly reduces the number of parametersand computation costs by the shallow neural network structure. We evaluate the semantic segmentation model of our NPNet on three benchmark datasets comparing w i t h multiple current state-of-the-art(SOTA) models, and the implementation results show thatour NPNetachieves SOTA performance, with an excellent balance between accuracyand speed.

GPU-Net: Lightweight U-Net with more diverse features

Jan 07, 2022

Abstract:Image segmentation is an important task in the medical image field and many convolutional neural networks (CNNs) based methods have been proposed, among which U-Net and its variants show promising performance. In this paper, we propose GP-module and GPU-Net based on U-Net, which can learn more diverse features by introducing Ghost module and atrous spatial pyramid pooling (ASPP). Our method achieves better performance with more than 4 times fewer parameters and 2 times fewer FLOPs, which provides a new potential direction for future research. Our plug-and-play module can also be applied to existing segmentation methods to further improve their performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge