Wei zhang

Improving Neural Relation Extraction with Positive and Unlabeled Learning

Nov 28, 2019

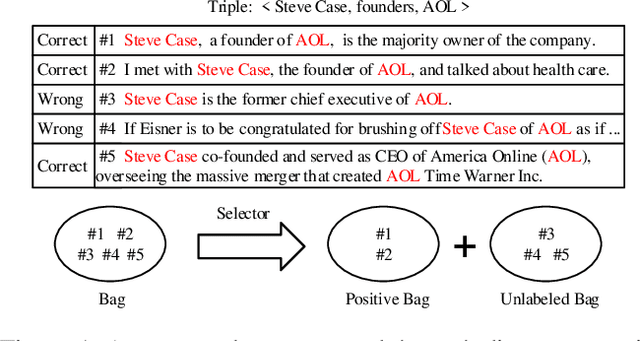

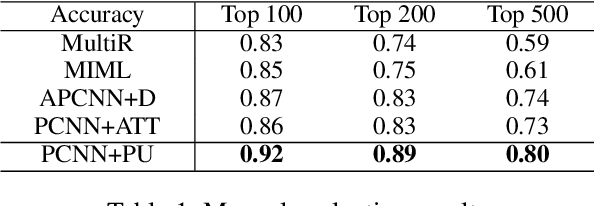

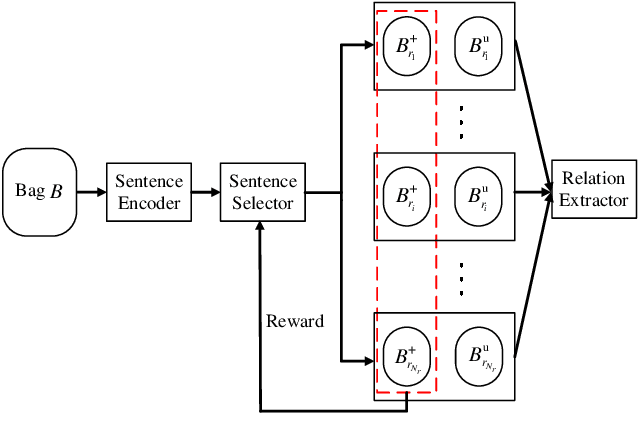

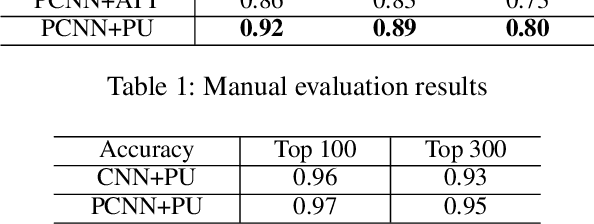

Abstract:We present a novel approach to improve the performance of distant supervision relation extraction with Positive and Unlabeled (PU) Learning. This approach first applies reinforcement learning to decide whether a sentence is positive to a given relation, and then positive and unlabeled bags are constructed. In contrast to most previous studies, which mainly use selected positive instances only, we make full use of unlabeled instances and propose two new representations for positive and unlabeled bags. These two representations are then combined in an appropriate way to make bag-level prediction. Experimental results on a widely used real-world dataset demonstrate that this new approach indeed achieves significant and consistent improvements as compared to several competitive baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge