Waleed Ragheb

ADVANSE

Emotional Speech Recognition with Pre-trained Deep Visual Models

Apr 06, 2022

Abstract:In this paper, we propose a new methodology for emotional speech recognition using visual deep neural network models. We employ the transfer learning capabilities of the pre-trained computer vision deep models to have a mandate for the emotion recognition in speech task. In order to achieve that, we propose to use a composite set of acoustic features and a procedure to convert them into images. Besides, we present a training paradigm for these models taking into consideration the different characteristics between acoustic-based images and regular ones. In our experiments, we use the pre-trained VGG-16 model and test the overall methodology on the Berlin EMO-DB dataset for speaker-independent emotion recognition. We evaluate the proposed model on the full list of the seven emotions and the results set a new state-of-the-art.

Prediction of Missing Semantic Relations in Lexical-Semantic Network using Random Forest Classifier

Nov 12, 2019

Abstract:This study focuses on the prediction of missing six semantic relations (such as is_a and has_part) between two given nodes in RezoJDM a French lexical-semantic network. The output of this prediction is a set of pairs in which the first entries are semantic relations and the second entries are the probabilities of existence of such relations. Due to the statement of the problem we choose the random forest (RF) predictor classifier approach to tackle this problem. We take for granted the existing semantic relations, for training/test dataset, gathered and validated by crowdsourcing. We describe how all of the mentioned ideas can be followed after using the node2vec approach in the feature extraction phase. We show how this approach can lead to acceptable results.

Attention-based Modeling for Emotion Detection and Classification in Textual Conversations

Jun 14, 2019

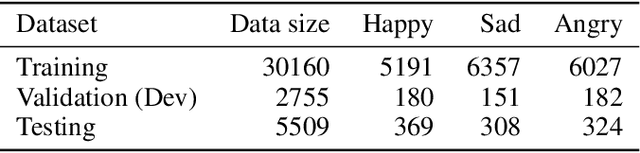

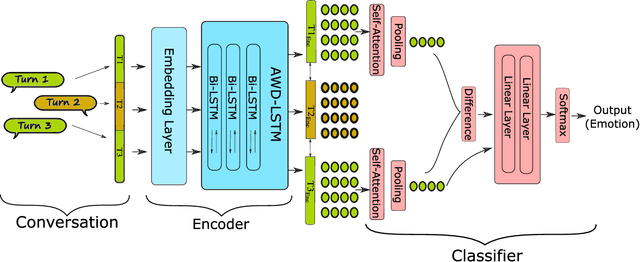

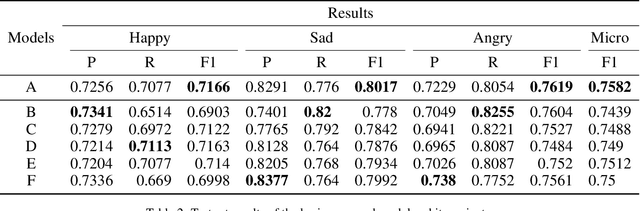

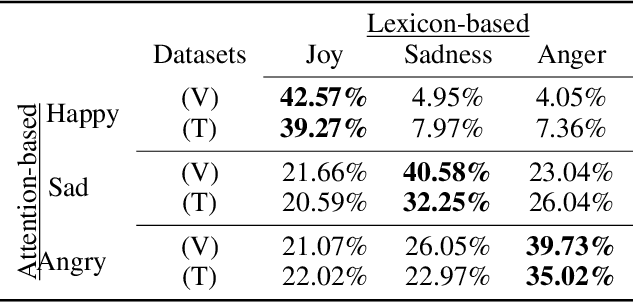

Abstract:This paper addresses the problem of modeling textual conversations and detecting emotions. Our proposed model makes use of 1) deep transfer learning rather than the classical shallow methods of word embedding; 2) self-attention mechanisms to focus on the most important parts of the texts and 3) turn-based conversational modeling for classifying the emotions. The approach does not rely on any hand-crafted features or lexicons. Our model was evaluated on the data provided by the SemEval-2019 shared task on contextual emotion detection in text. The model shows very competitive results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge